AI regulations and world governments: balancing innovation, safety, and sovereignty

AI regulations and world governments: balancing innovation, safety, and sovereignty

Artificial intelligence (AI) is transforming economies, reshaping industries, and altering the way societies function. Its potential is enormous—from diagnosing diseases and optimizing supply chains to enabling autonomous vehicles and personalizing education. But with its rapid evolution comes a pressing challenge: how can governments regulate AI in a way that fosters innovation while protecting citizens from potential harm? Around the world, governments are wrestling with this question, often taking different paths based on political systems, economic priorities, and cultural values.

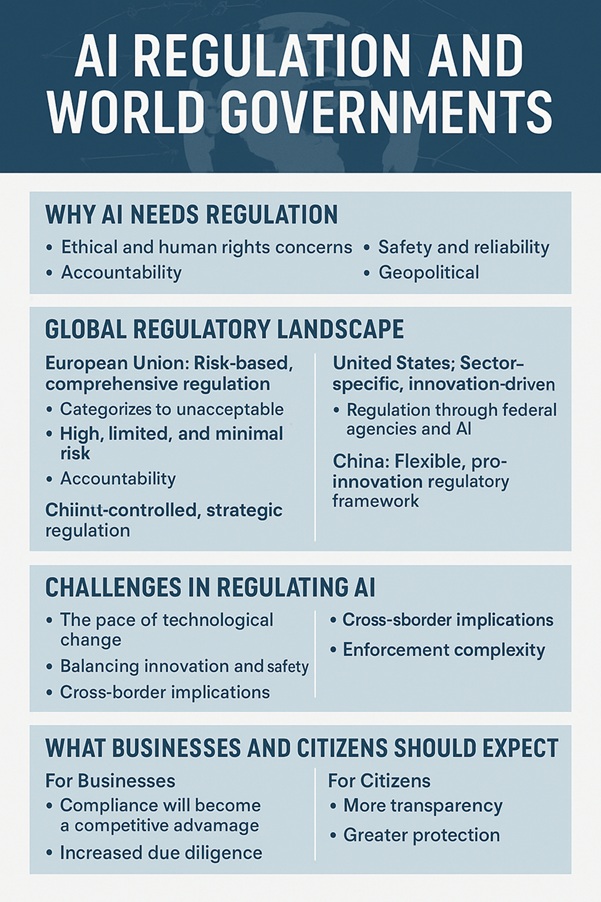

- Why AI needs regulation

AI systems are not neutral tools. Their design, training data, and applications can introduce risks—ranging from biased decision-making and job displacement to privacy breaches and even national security threats. Without regulation, AI development may prioritize commercial or strategic advantage over societal wellbeing. Key reasons for regulation include:

- Ethical and human rights concerns: AI can inadvertently reinforce discrimination, as seen in hiring algorithms or facial recognition systems that underperform for certain demographic groups.

- Safety and reliability: Autonomous systems, such as self-driving cars or AI-assisted surgery, require high safety standards to prevent harm.

- Accountability: When an AI system makes an error, it can be unclear who is responsible—the developer, the deployer, or the AI itself.

- Geopolitical competition: Countries view AI leadership as a strategic advantage, raising concerns about military applications and cyber capabilities.

- The global regulatory landscape

No single, unified global AI regulation exists. Instead, nations and regions are creating frameworks tailored to their political and economic environments. The diversity in approaches reflects differing philosophies about technology governance.

European union: risk-based, comprehensive regulation

The EU is spearheading one of the most ambitious AI regulatory efforts with the AI Act, expected to take effect in 2026. It categorizes AI systems into four risk levels:

- Unacceptable risk: Prohibited (e.g., social scoring like in China’s system, manipulative subliminal techniques).

- High risk: Subject to strict compliance, testing, and oversight (e.g., AI in recruitment, education, healthcare, law enforcement).

- Limited risk: Transparency requirements (e.g., chatbots must disclose they are not human).

- Minimal risk: No restrictions, but encouraged ethical use.

The EU’s approach emphasizes human oversight, safety, and transparency, often influencing other countries’ policies—similar to the GDPR’s global impact on data privacy.

United states: sector-specific, innovation-driven

The U.S. lacks a single AI law, instead opting for a sectoral approach. Federal agencies regulate AI within their jurisdictions—for example:

- FDA for medical AI devices.

- FTC for consumer protection against deceptive AI marketing.

- NHTSA for autonomous vehicles.

In 2023, the White House issued an AI Executive Order focusing on safety, equity, and transparency, and encouraging voluntary commitments from tech companies. The American model prioritizes innovation and market competitiveness, but critics say it risks leaving regulatory gaps.

China: state-controlled, strategic regulation

China sees AI as central to its national strategy and implements strong state-led regulation. Laws like the Algorithm Regulation (2022) require companies to file details of recommendation algorithms with the government. The Generative AI Measures (2023) mandate that AI outputs reflect socialist values and prohibit content that threatens state security. While fostering rapid AI growth, this centralized control also ensures political oversight of information.

United kingdom: flexible, pro-innovation

Post-Brexit, the UK has opted for a pro-innovation AI regulatory framework without a new AI law. It delegates oversight to existing regulators like the ICO (for privacy) and the CMA (for competition). The government publishes guidelines but avoids overly prescriptive rules to encourage AI startups.

Other notable approaches

- Canada: The Artificial Intelligence and Data Act (AIDA) focuses on responsible AI in trade and commerce.

- Japan: Promotes “Society 5.0,” balancing innovation with human-centric values.

- India: Currently no AI-specific law, but the government is drafting a national AI policy with emphasis on ethics and inclusive growth.

- Challenges in regulating AI

The pace of technological change

AI evolves faster than legislative processes. Laws can become outdated before they’re implemented. This creates a “chasing the technology” problem for policymakers.

Balancing innovation and safety

Over-regulation can stifle innovation and deter investment, while under-regulation can allow harmful applications to proliferate.

Cross-border implications

AI systems operate across national borders, making purely domestic regulation insufficient. For example, a chatbot developed in one country can serve users worldwide, raising jurisdictional challenges.

Enforcement complexity

Even with laws in place, monitoring AI compliance is resource-intensive, especially for high-risk applications in sensitive sectors.

- Emerging trends in AI governance

International cooperation

Organizations like the OECD, UNESCO, and the G7 are creating AI principles to promote interoperability of regulations. The Global Partnership on AI (GPAI) fosters collaboration on ethics, governance, and innovation.

AI auditing and certification

Much like safety certifications for electronics, governments are exploring independent audits for AI systems – testing for bias, robustness, and explainability before deployment.

Public involvement

Some governments, like Canada and the EU, are engaging citizens through consultations, aiming for democratic legitimacy in AI governance.

Regulatory sandboxes

The UK, Singapore, and others are testing AI sandboxes – controlled environments where companies can trial AI systems under regulator supervision before public release.

- The future: toward global AI norms?

Given AI’s global nature, some experts argue for a “Geneva Convention for AI” – an international treaty setting baseline rules, especially for high-risk and military applications. However, geopolitical rivalries make consensus difficult.

In the short term, we’re likely to see regulatory blocs emerge:

- EU-led bloc: High safety and ethics standards.

- US-led bloc: Innovation-friendly, market-driven.

- China-led bloc: State-controlled, strategically aligned.

These blocs may compete for influence over global AI norms, similar to how data privacy standards evolved.

- What businesses and citizens should expect

For businesses:

- Compliance will become a competitive advantage: Firms that build transparency, fairness, and safety into AI systems from the start will adapt faster to new regulations.

- Increased due diligence: Especially for cross-border operations, as varying laws will require careful compliance mapping.

For citizens:

- More transparency: Expect more disclosures when interacting with AI.

- Greater protection: Stronger safeguards against harmful AI uses, though protection levels will vary by region.

Conclusion

AI regulation is not merely a legal or technical challenge—it is a societal choice about how much control we place on a transformative technology. Different world governments are taking different paths, but the shared goal is to ensure AI develops in a way that benefits humanity while minimizing harm. The next decade will be critical in determining whether AI becomes a tool of empowerment or division.

RELATED POSTS

Nothing Found