AI Agents

1. What are AI Agents and why they matter

AI Agents are everywhere: popular social media, mainstream media and public imagination. So what exactly are they?

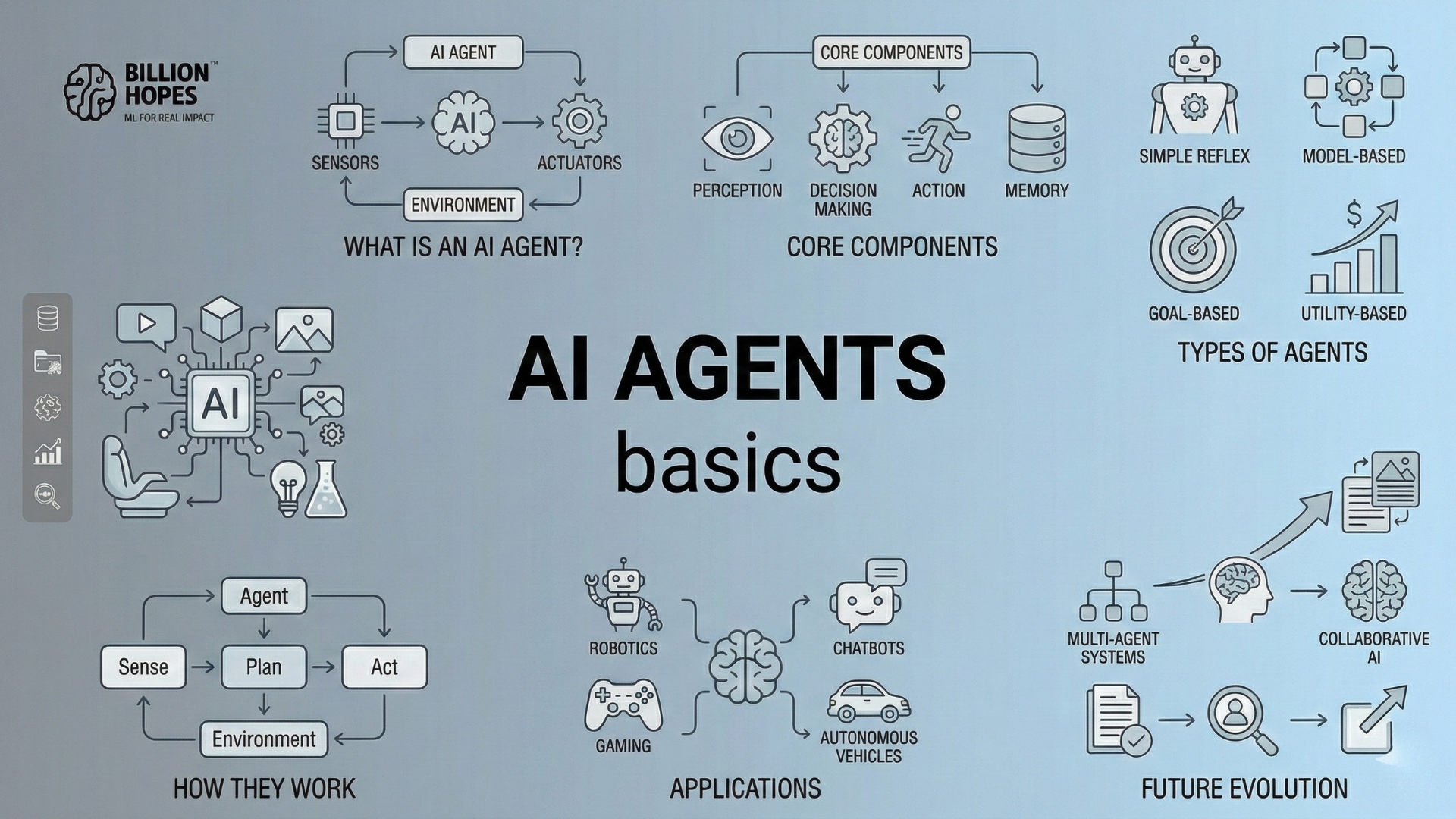

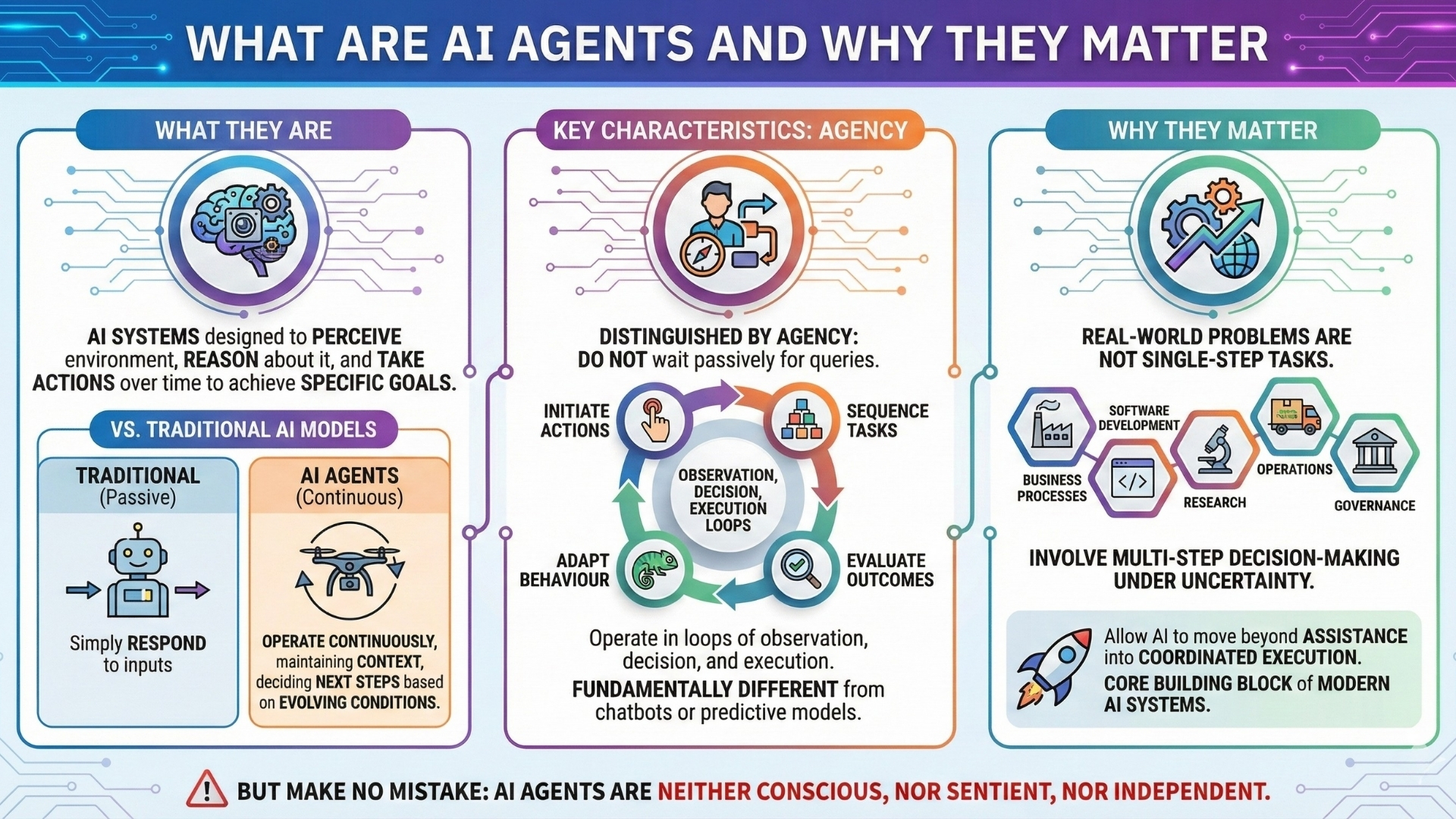

AI agents are artificial intelligence systems designed to perceive an environment, reason about it, and take actions over time to achieve specific goals. Unlike traditional AI models that simply respond to inputs, agents operate continuously, maintaining context and deciding what to do next based on evolving conditions.

What distinguishes AI agents is their agency. An agent does not wait passively for queries. It can initiate actions, sequence tasks, evaluate outcomes, and adapt its behaviour. This ability to operate in loops of observation, decision, and execution makes agents fundamentally different from chatbots or predictive models.

AI agents matter because most real-world problems are not single-step tasks. Business processes, software development, research, operations, and governance involve multi-step decision-making under uncertainty. Agents allow AI to move beyond assistance into coordinated execution, making them a core building block of modern AI systems.

But make no mistake: AI Agents are neither conscious, nor sentient nor independent.

2. Early AI systems: Programs, automation, and limits

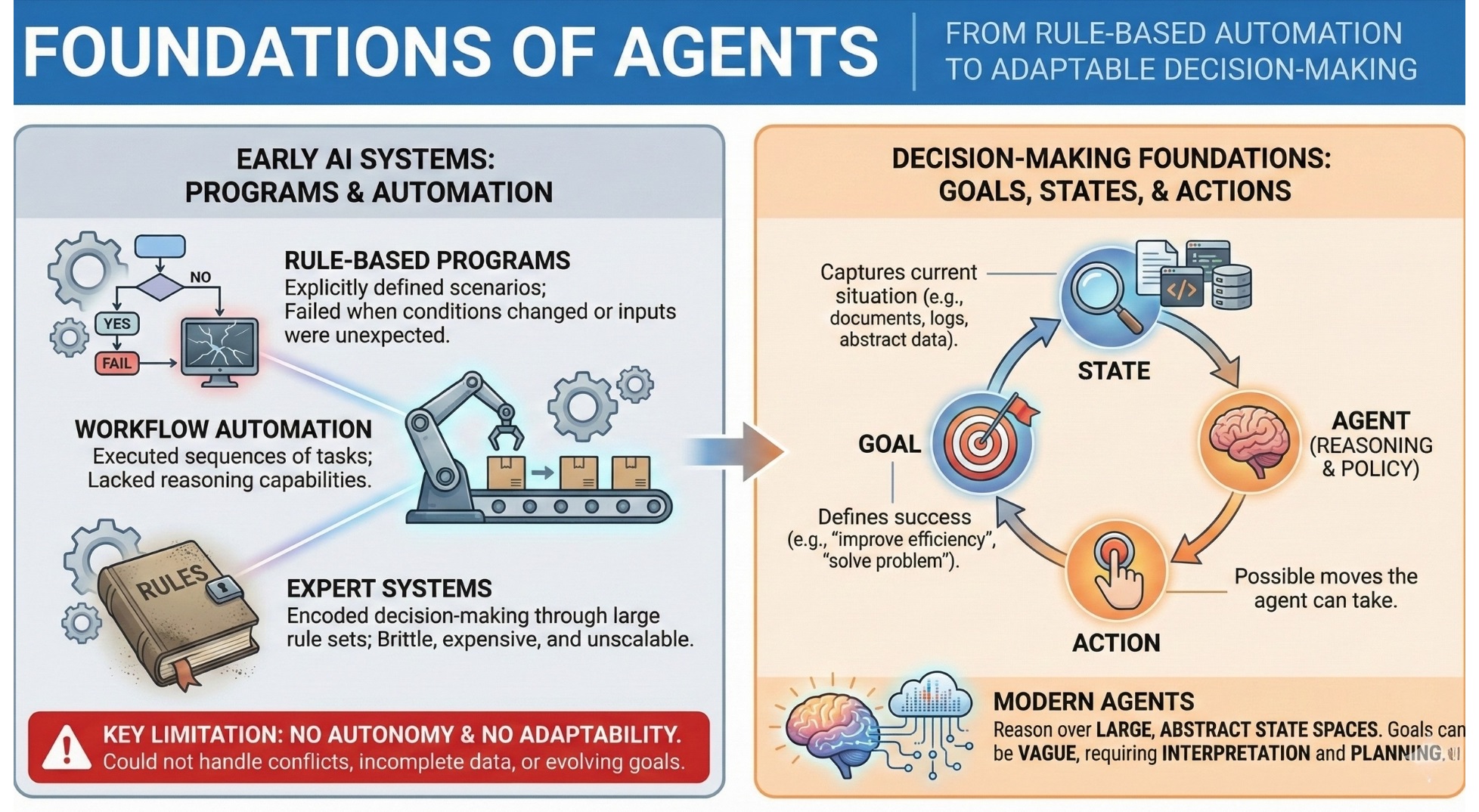

Before AI agents, most intelligent systems were rule-based programs or deterministic automation pipelines. Engineers explicitly defined what the system should do in every possible scenario. These systems worked well in controlled environments but failed when conditions changed or unexpected inputs appeared.

Workflow automation tools could execute sequences of tasks, but they lacked reasoning. Expert systems attempted to encode decision-making through large rule sets, but they were brittle, expensive to maintain, and impossible to scale to real-world complexity.

The key limitation was that these systems had no autonomy and no adaptability. They could not decide what to do when rules conflicted, data was incomplete, or goals evolved. This exposed a gap between automation and intelligence, setting the stage for agent-based approaches. An excellent collection of learning videos awaits you on our Youtube channel.

3. Decision-making foundations: Goals, states, and actions

Every AI agent is built on a foundational framework consisting of states, actions, rewards, and goals. The state captures the current situation of the environment. Actions represent the possible moves the agent can take. Goals define what success looks like.

This framework originates from reinforcement learning and control theory, where agents learn or follow policies that map states to actions. Even simple systems, such as thermostats or game-playing bots, operate using this logic.

What differentiates modern agents is their ability to reason over large, abstract state spaces. Instead of numeric variables alone, states may include documents, codebases, conversations, or system logs. Goals may be vague or high-level, such as “improve efficiency” or “solve this problem,” requiring interpretation and planning.

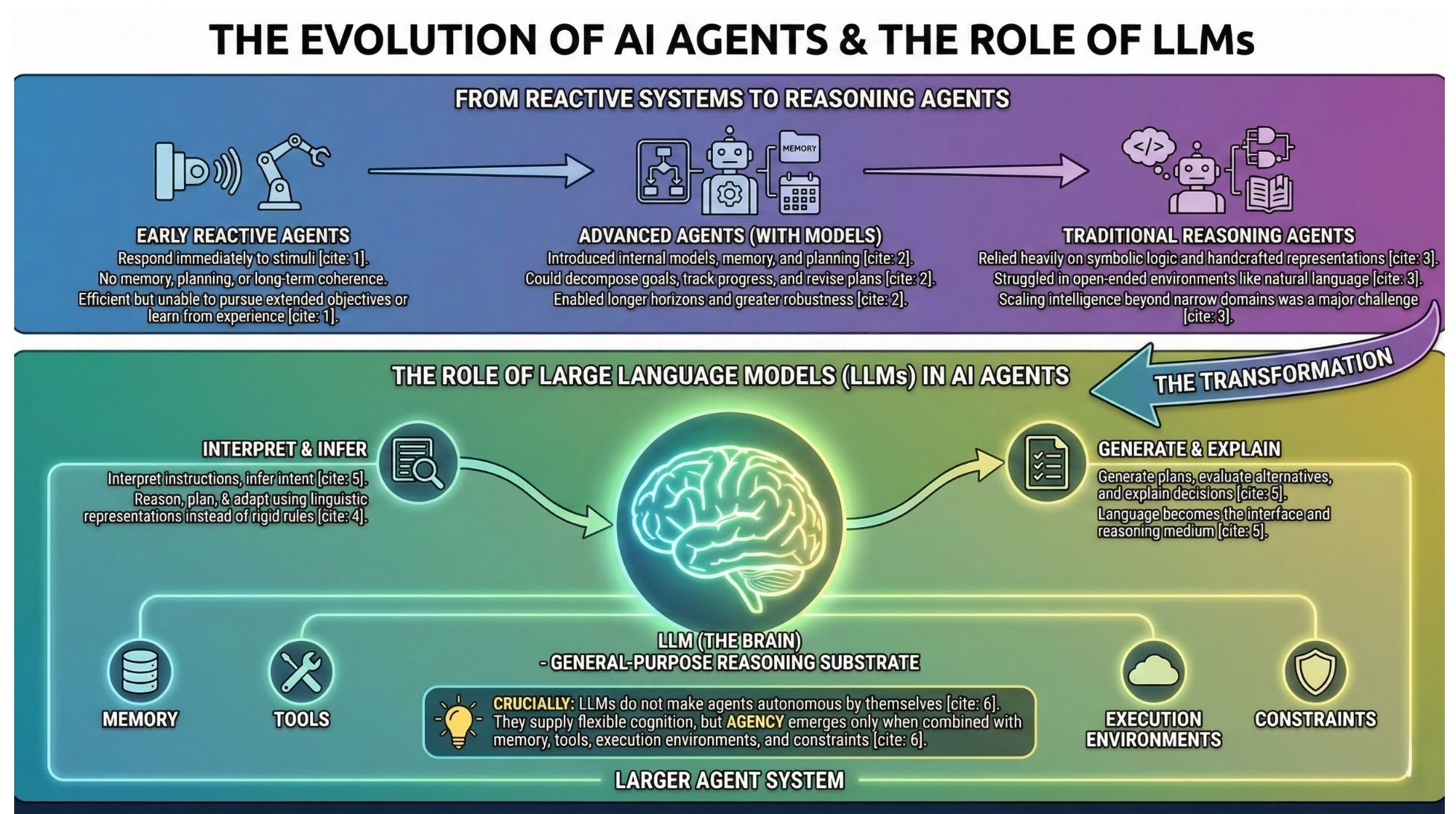

4. From reactive systems to reasoning agents

Early agents were primarily reactive. They responded immediately to stimuli without memory, planning, or long-term coherence. While efficient, such systems could not pursue extended objectives or learn from experience.

More advanced agents introduced internal models, memory, and planning mechanisms. These agents could decompose goals into subgoals, track progress, and revise plans when obstacles appeared. This enabled longer horizons of action and greater robustness.

However, traditional reasoning agents relied heavily on symbolic logic and handcrafted representations. They struggled in open-ended environments like natural language, the internet, or dynamic organizations. Scaling intelligence beyond narrow domains remained a major challenge. A constantly updated Whatsapp channel awaits your participation.

5. The role of Large Language Models (LLMs) in AI agents

Large Language Models (LLMs) transformed AI agents by providing a general-purpose reasoning substrate expressed in natural language. Instead of relying on rigid rules, agents could now reason, plan, and adapt using linguistic representations.

An LLM-powered agent can interpret instructions, infer intent, generate plans, evaluate alternatives, and explain decisions. Language becomes both the interface and the reasoning medium.

Crucially, LLMs do not make agents autonomous by themselves. They supply flexible cognition, but agency emerges only when combined with memory, tools, execution environments, and constraints. LLMs are best understood as the “brain” within a larger agent system.

6. How AI agents work at a high level

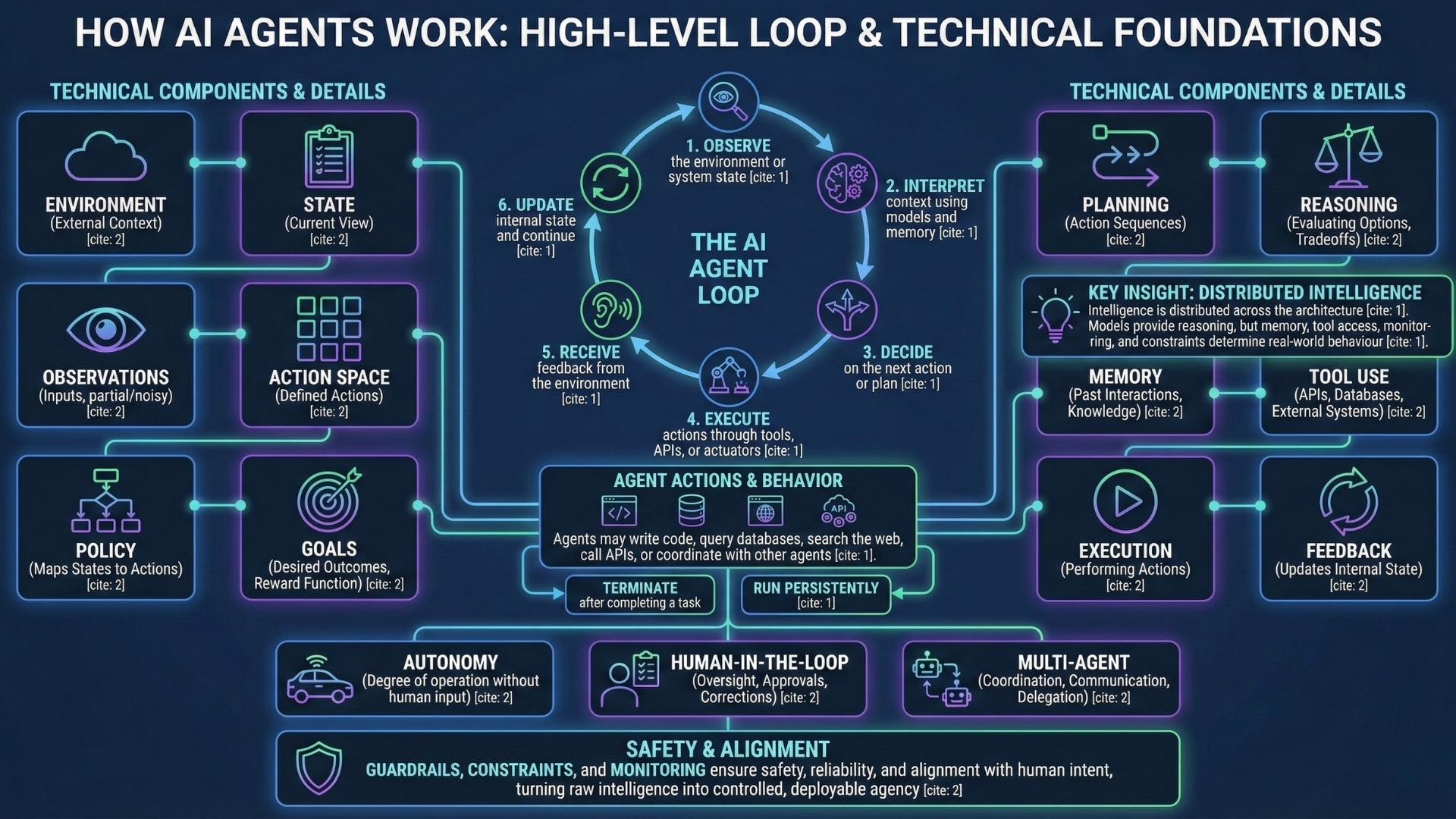

Most modern AI agents operate in a structured loop:

- Observe the environment or system state

- Interpret context using models and memory

- Decide on the next action or plan

- Execute actions through tools, APIs, or actuators

- Receive feedback from the environment

- Update internal state and continue

Agents may write code, query databases, search the web, call APIs, or coordinate with other agents. Some agents terminate after completing a task, while others run persistently.

The key insight is that intelligence is distributed across the architecture. Models provide reasoning, but memory, tool access, monitoring, and constraints determine real-world behaviour.

TECHNICAL DETAILS: AI agents are built from several core technical components working together as a system. An environment is the external context the agent operates in, while the state represents the agent’s current view of that environment. Observations are the inputs the agent receives, which may be partial or noisy. An action space defines what the agent can do, and a policy maps states or observations to actions. Goals specify desired outcomes, often formalized through a reward function that evaluates success. Planning refers to generating multi-step action sequences, while reasoning involves evaluating options, constraints, and tradeoffs. Memory stores past interactions, intermediate results, or long-term knowledge, enabling continuity over time. Tool use allows agents to call APIs, databases, code executors, or external systems. Execution is the act of performing chosen actions, and feedback updates the agent’s internal state. Autonomy describes the degree to which an agent operates without human input, while human-in-the-loop mechanisms introduce oversight, approvals, or corrections. In multi-agent setups, coordination, communication, and delegation govern interactions between agents. Finally, guardrails, constraints, and monitoring ensure safety, reliability, and alignment with human intent, turning raw intelligence into controlled, deployable agency. Excellent individualised mentoring programmes available.

7. Why AI agents feel autonomous

AI agents feel autonomous because they display behaviours humans associate with agency: initiative, continuity, and adaptation. They choose actions without constant prompting, maintain goals across time, and adjust when plans fail.

This perception can be misleading. Agents do not possess intent, desire, or awareness. Their autonomy is delegated and conditional, defined by goals, permissions, and system boundaries set by humans.

Understanding this distinction is critical. Treating agents as independent actors rather than engineered systems risks misplaced trust. Effective agent design requires clear accountability, constraints, and escalation paths to humans.

8. Key types of AI agents

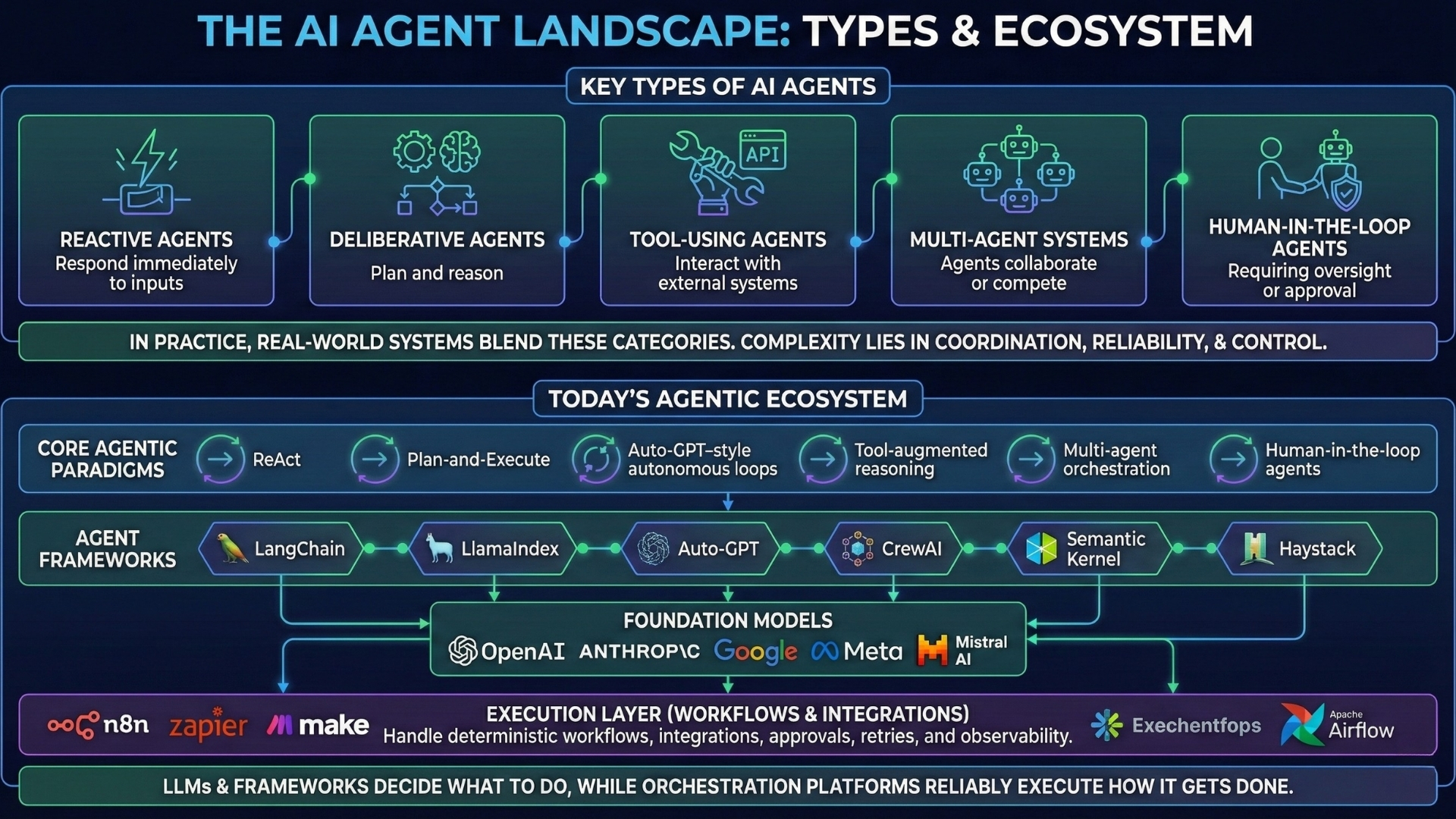

AI agents appear in multiple forms:

- Reactive agents that respond immediately to inputs

- Deliberative agents that plan and reason

- Tool-using agents that interact with external systems

- Multi-agent systems where agents collaborate or compete

- Human-in-the-loop agents requiring oversight or approval

In practice, real-world systems blend these categories. A single application may involve multiple agents with different responsibilities. The complexity lies in coordination, reliability, and control, not individual intelligence.

TODAY’S AGENTIC ECOSYSTEM: The modern agentic AI ecosystem spans paradigms, models, agent frameworks, and execution platforms. Core agentic paradigms include ReAct, Plan-and-Execute, Auto-GPT–style autonomous loops, tool-augmented reasoning, multi-agent orchestration, and human-in-the-loop agents. Prominent agent frameworks include LangChain, LlamaIndex, Auto-GPT, CrewAI, Semantic Kernel, and Haystack. These are typically powered by foundation models from OpenAI, Anthropic, Google, Meta, and Mistral AI. Equally important is the execution layer, where tools like n8n, Zapier, Make (formerly Integromat), and Apache Airflow handle deterministic workflows, integrations, approvals, retries, and observability. In practice, LLMs and agent frameworks decide what to do, while orchestration platforms like n8n reliably execute how it gets done, forming the backbone of real-world, production-grade agentic systems. Subscribe to our free AI newsletter now.

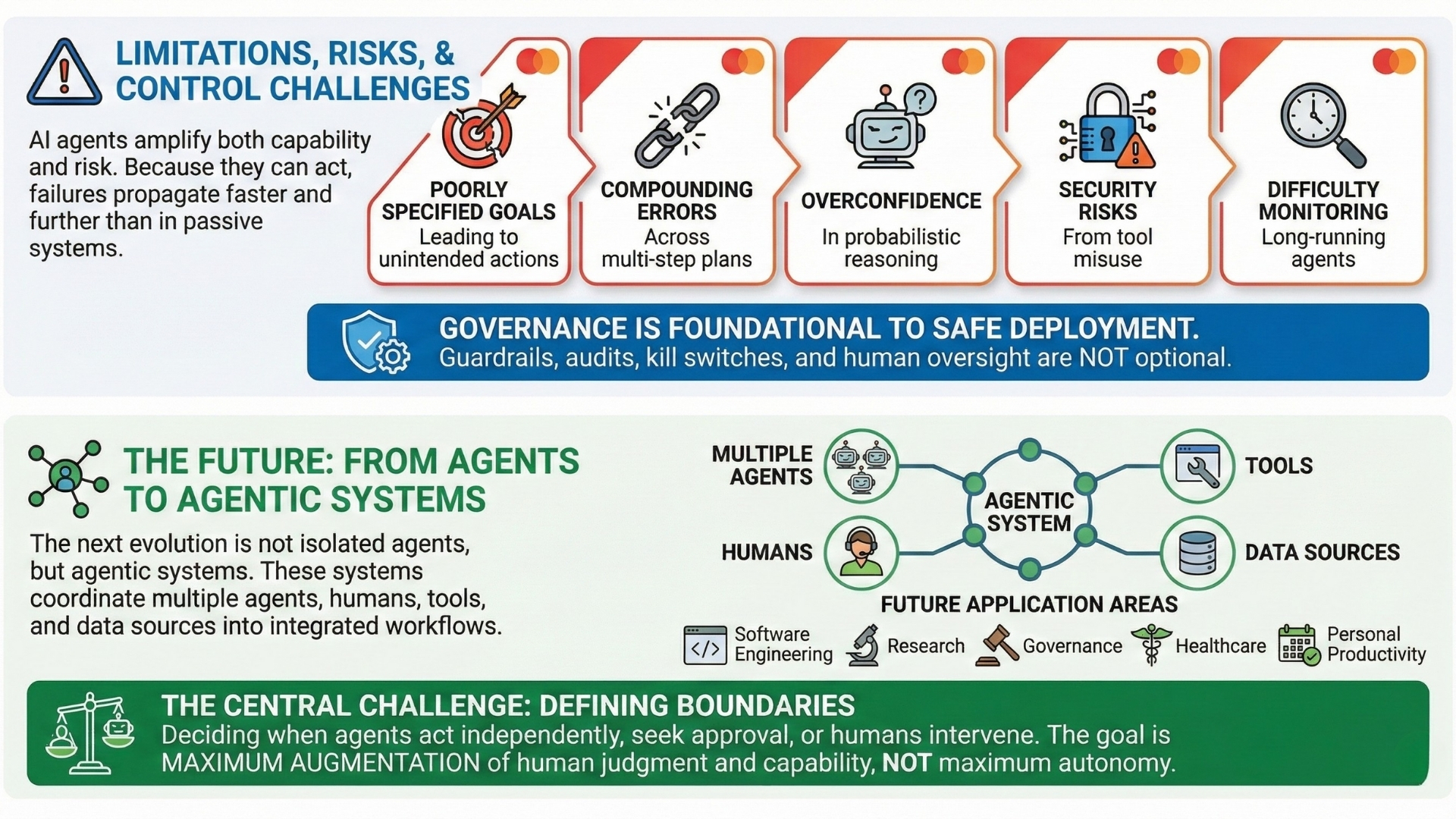

9. Limitations, risks, and control challenges

AI agents amplify both capability and risk. Because they can act, failures propagate faster and further than in passive systems.

Common challenges include:

- Poorly specified goals leading to unintended actions

- Compounding errors across multi-step plans

- Overconfidence in probabilistic reasoning

- Security risks from tool misuse

- Difficulty monitoring long-running agents

As agents gain autonomy, governance becomes as important as performance. Guardrails, audits, kill switches, and human oversight are not optional. They are foundational to safe deployment.

10. The future: From agents to agentic systems

The next evolution is not isolated agents, but agentic systems. These systems coordinate multiple agents, humans, tools, and data sources into integrated workflows.

Future agents will operate in software engineering, research, governance, healthcare, and personal productivity. They will increasingly work alongside humans rather than replacing them.

The central challenge will be deciding boundaries: when agents act independently, when they seek approval, and when humans intervene. The goal is not maximum autonomy, but maximum augmentation of human judgment and capability. Upgrade your AI-readiness with our masterclass.

Summary

AI agents mark a transition from AI as a responder to AI as an actor. Built on decades of decision theory and accelerated by large language models, agents can observe, reason, and act across time. Yet they remain tools, not minds. Their growing power demands careful design, oversight, and humility. Understanding AI agents is essential not just for building systems, but for deciding how intelligence should operate in the world we share.