AI is not intelligent, and Machines do not learn

1. Why this sounds shocking

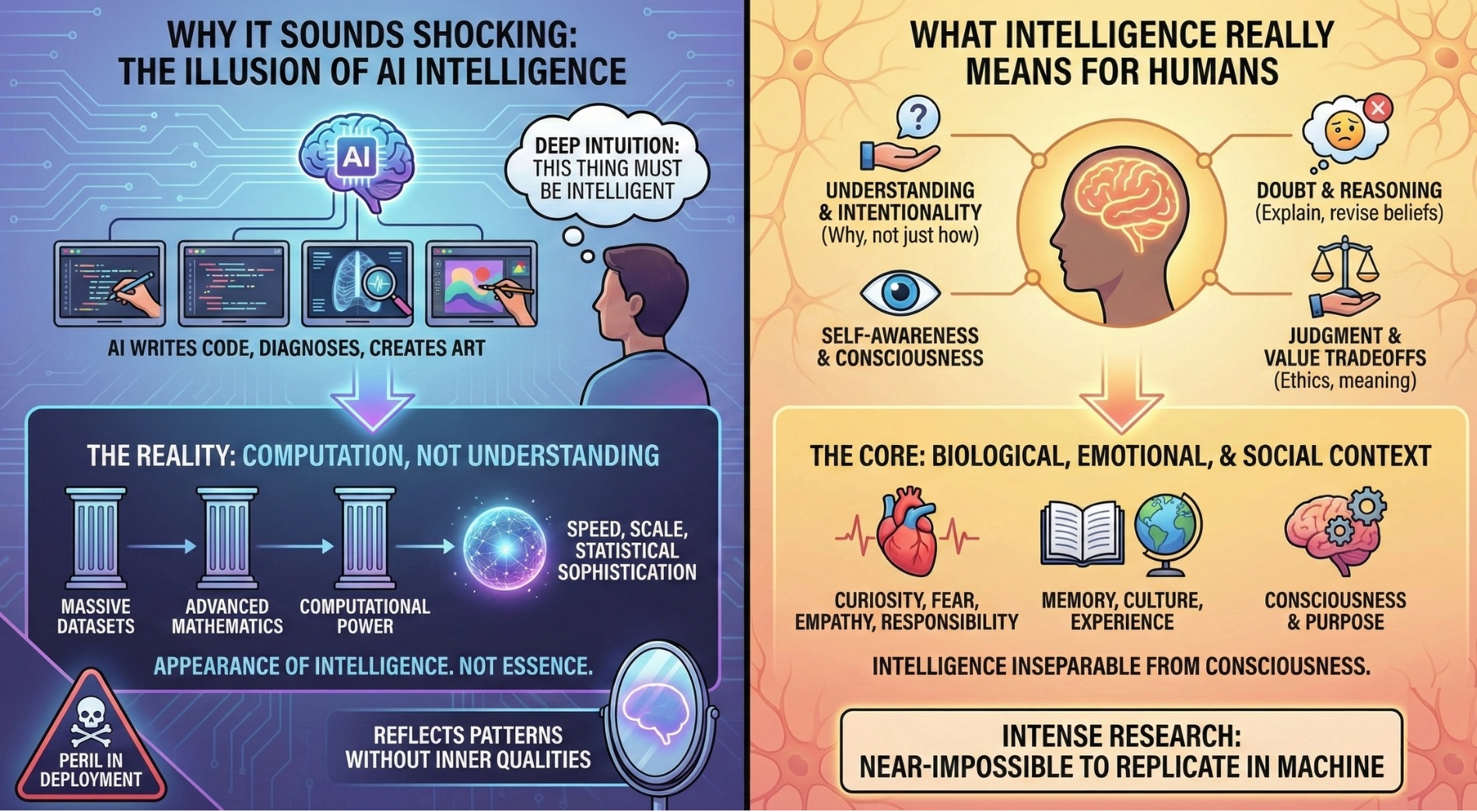

The statement “AI is not intelligent and machines do not learn” feels almost offensive in an age where AI writes code, diagnoses diseases, creates art, and holds long conversations. Popular media, marketing narratives, and even academic shorthand have conditioned us to equate impressive performance with intelligence. When a system answers questions fluently or solves complex problems faster than humans, it triggers a deep intuition: this thing must be intelligent.

But this intuition is flawed. What we are witnessing is not intelligence, but performance without understanding. AI systems excel because they operate on massive datasets, advanced mathematics, and extraordinary computational power. Speed, scale, and statistical sophistication create the appearance of intelligence. Yet appearances are not essence. Just as a mirror reflects intelligence without possessing it, AI reflects patterns of human intelligence without having any of its inner qualities.

Any professional who ignores this basic truth invites serious peril, while deploying AI at scale.

2. What intelligence really means for humans

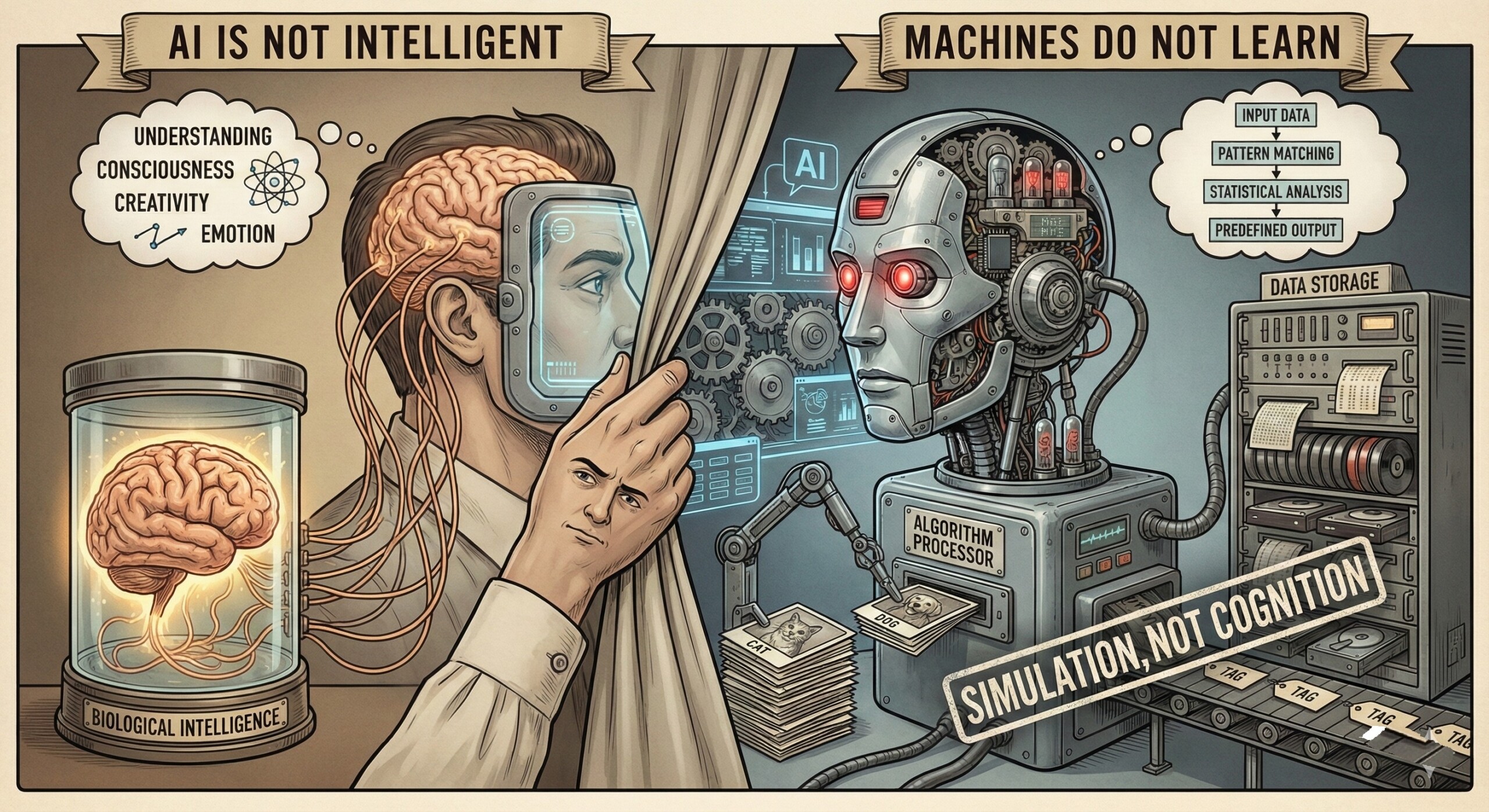

Human intelligence is not merely about producing correct answers. It involves understanding, intentionality, self-awareness, and judgment under uncertainty. Humans know why they act, not just how to act. They can explain their reasoning, doubt their conclusions, revise beliefs, and make value-based tradeoffs. Intelligence is deeply intertwined with lived experience.

Crucially, human intelligence is embedded in a biological, emotional, and social context. We feel curiosity, fear, empathy, and responsibility. Our thinking is shaped by memory, culture, ethics, and meaning. Intelligence for humans is not separable from consciousness and purpose. Without these elements, what remains may be computation – but it is no longer intelligence in the human sense.

Intense research on human intelligence proves repeatedly that it is near-impossible to produce anything quite like it in machine.

3. What AI actually does then

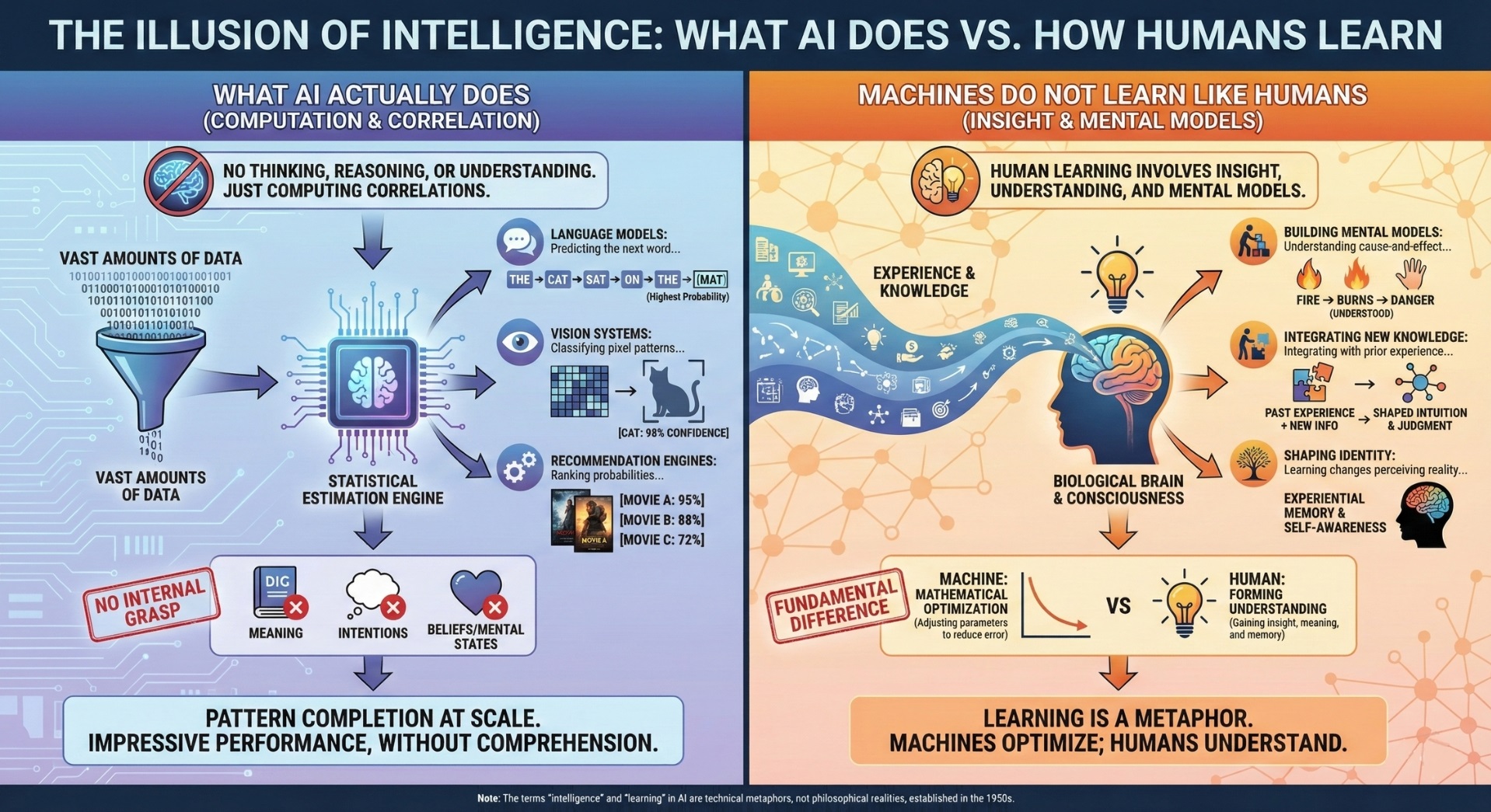

AI systems do not think, reason, or understand. They compute correlations. Given vast amounts of data, they estimate which output is statistically most likely given an input. In language models, this means predicting the next word. In vision systems, it means classifying pixel patterns. In recommendation engines, it means ranking probabilities.

There is no internal grasp of meaning behind these operations. The system does not know what words refer to, what objects are, or why an answer is appropriate. It has no beliefs, intentions, or mental states. What looks like reasoning is actually pattern completion at scale. AI outputs can be impressive, coherent, and useful – but they are generated without comprehension.

Is it any surprise that AI is all about data … vast troves of data?

4. Machines do not learn like humans

Human learning involves insight. When people learn, they build mental models of the world, understand cause-and-effect relationships, and integrate new knowledge with prior experience. Learning changes how humans see reality. It shapes intuition, judgment, and identity. A child who learns that fire burns does not merely adjust parameters – they understand danger.

Machine learning is fundamentally different. It is a mathematical optimization process in which parameters are adjusted to reduce error on data. No understanding is formed. No insight is gained. Nothing is remembered in the experiential sense. The machine does not know what it has learned, nor can it explain it. The word learning here is a metaphor – useful technically, but misleading philosophically.

It would ideally have been better if words like ‘intelligence’ and ‘learning’ weren’t used in the AI industry from 1950s itself, but they were, and here we are!

5. Why we confuse AI with intelligence (Anthropomorphism)

Humans are wired to attribute minds to things that behave intelligently. This tendency, called anthropomorphism, helped our ancestors survive by quickly interpreting intentions in others. But it backfires with AI. When a system speaks fluently, apologizes, or jokes, we instinctively assume awareness behind the words.

Language is especially deceptive. Because human intelligence is expressed largely through language, we mistake linguistic fluency for thinking. But fluency is not understanding. A parrot can mimic speech without knowing meaning. AI does something similar, though far more sophisticated. The smoother the interaction, the stronger the illusion – and the easier it is to forget that there is no mind on the other side.

So dear anthropomorphism lover, beware!

6. The Turing Test: A test of imitation, not intelligence

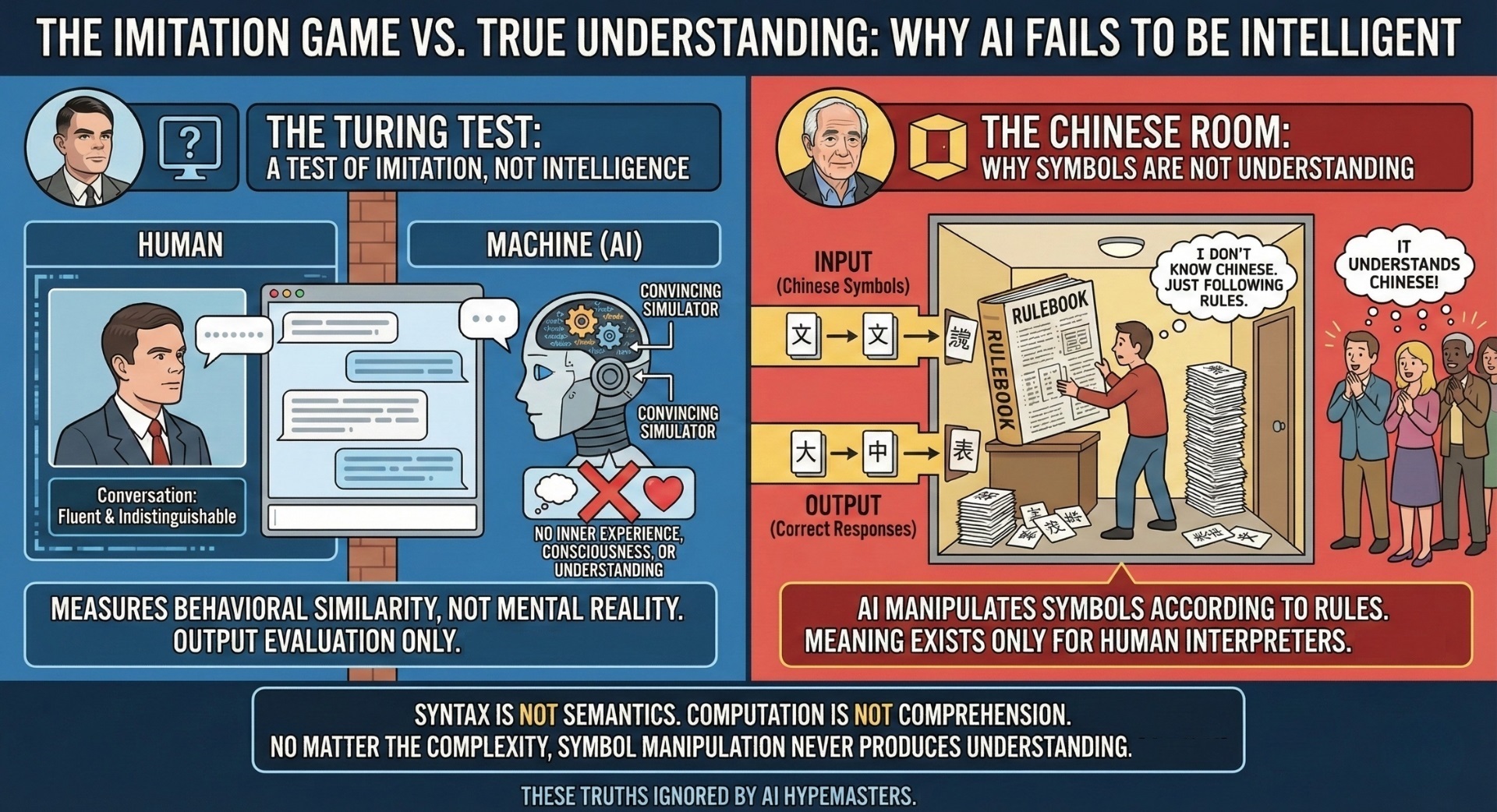

Alan Turing proposed the Turing Test to answer a narrow question: Can a machine imitate human conversation well enough to be indistinguishable from a human? The test was never meant to define intelligence, consciousness, or understanding. It measures behavioural similarity, not mental reality.

Passing the Turing Test proves only that a machine can convincingly simulate conversation. It says nothing about whether the machine knows what it is saying. Confusing imitation with intelligence is like mistaking a realistic painting for a living person. The test evaluates output, not inner experience – and intelligence without inner experience is a category error.

7. The Chinese Room: Why symbols are not understanding

Philosopher John Searle’s Chinese Room thought experiment makes this distinction clear. A person inside a room follows a rulebook to manipulate Chinese symbols and produce correct responses – without understanding Chinese. To outsiders, the room appears fluent. Inside, there is only rule-following.

AI operates in the same way. It manipulates symbols according to formal rules learned from data. The system does not know what the symbols mean. Meaning exists only for the humans who interpret the output. Syntax is not semantics. Computation is not comprehension. No matter how complex the rules become, symbol manipulation alone never produces understanding.

These fundamental truths have been ignored, and are lost, in the cacophony of AI hypemasters.

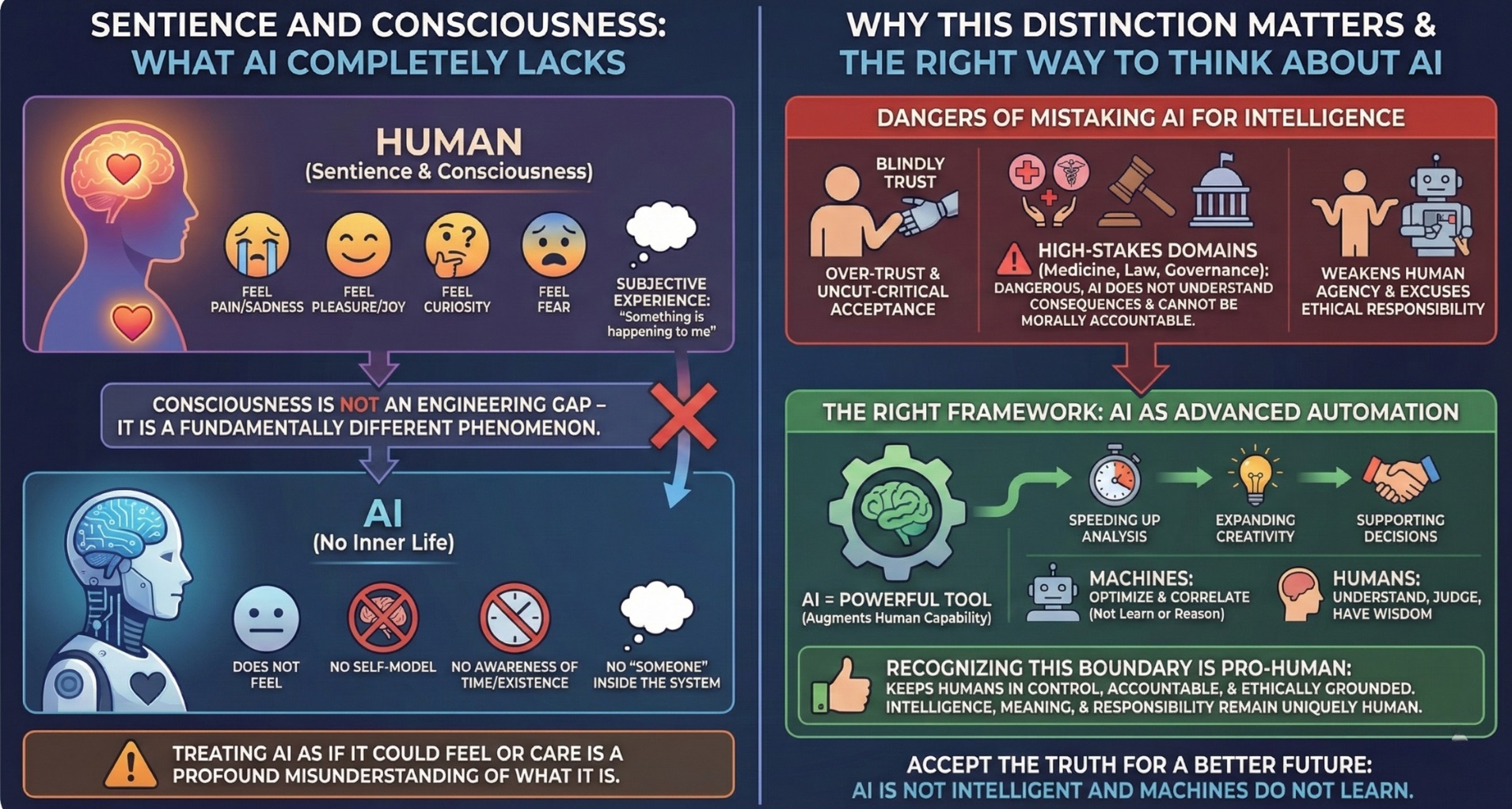

8. Sentience and Consciousness: What AI Completely Lacks

Sentience is the capacity to feel – pain, pleasure, curiosity, fear. Consciousness is subjective experience: the sense that something is happening to me. AI has neither. It does not experience the world. It does not feel confused when wrong or satisfied when correct. It does not wonder, hope, or care.

These are not features that can simply be added with more data or compute. Consciousness is not an engineering gap – it is a fundamentally different phenomenon. AI has no inner life, no self-model, no awareness of time or existence. There is no “someone” inside the system. Treating AI as if it could feel or care is a profound misunderstanding of what it is.

9. Why this distinction matters

Mistaking AI for intelligence leads to over-trust. People may accept outputs uncritically, defer judgment, or outsource responsibility. In high-stakes domains – medicine, law, governance – this can be dangerous. AI does not understand consequences. It cannot be morally accountable. It cannot take responsibility for harm.

Blurring this distinction also weakens human agency. If we treat machines as intelligent decision-makers, we risk excusing ourselves from ethical responsibility. But tools do not make decisions, humans do. Understanding AI’s limitations is essential to using it safely, responsibly, and wisely.

10. Right way to think about AI

AI should be understood as advanced automation, not artificial minds. It is a powerful tool that augments human capability – speeding up analysis, expanding creativity, and supporting decisions. But it does not replace understanding, judgment, or wisdom. Machines do not learn; they optimize. They do not reason; they correlate.

Recognizing this boundary is not anti-AI – it is pro-human. It keeps humans in control, accountable, and ethically grounded. AI is one of the greatest engineering achievements in history. But intelligence, meaning, and responsibility remain uniquely human. Respecting that difference is essential for the future we are building.

Here’s hoping for a better future through the simple but loud acceptance of truth – AI is not intelligent and machines do not learn.