AI Ethics, Governance & Responsible AI Careers – Fairness, bias, risk, and compliance roles

Artificial intelligence is no longer confined to labs or pilot projects. It is embedded in hiring systems, credit decisions, healthcare workflows, policing tools, welfare delivery, and national infrastructure. As AI’s influence expands, so do its social, legal, and ethical consequences. This has given rise to a critical set of roles focused on AI ethics, governance, and responsibility.

These roles are not philosophical add-ons. They exist at the intersection of technology, law, policy, and society, shaping how AI systems are designed, deployed, monitored, and corrected. Understanding these careers is essential for organizations that want AI systems that are not only powerful, but legitimate, trustworthy, and lawful.

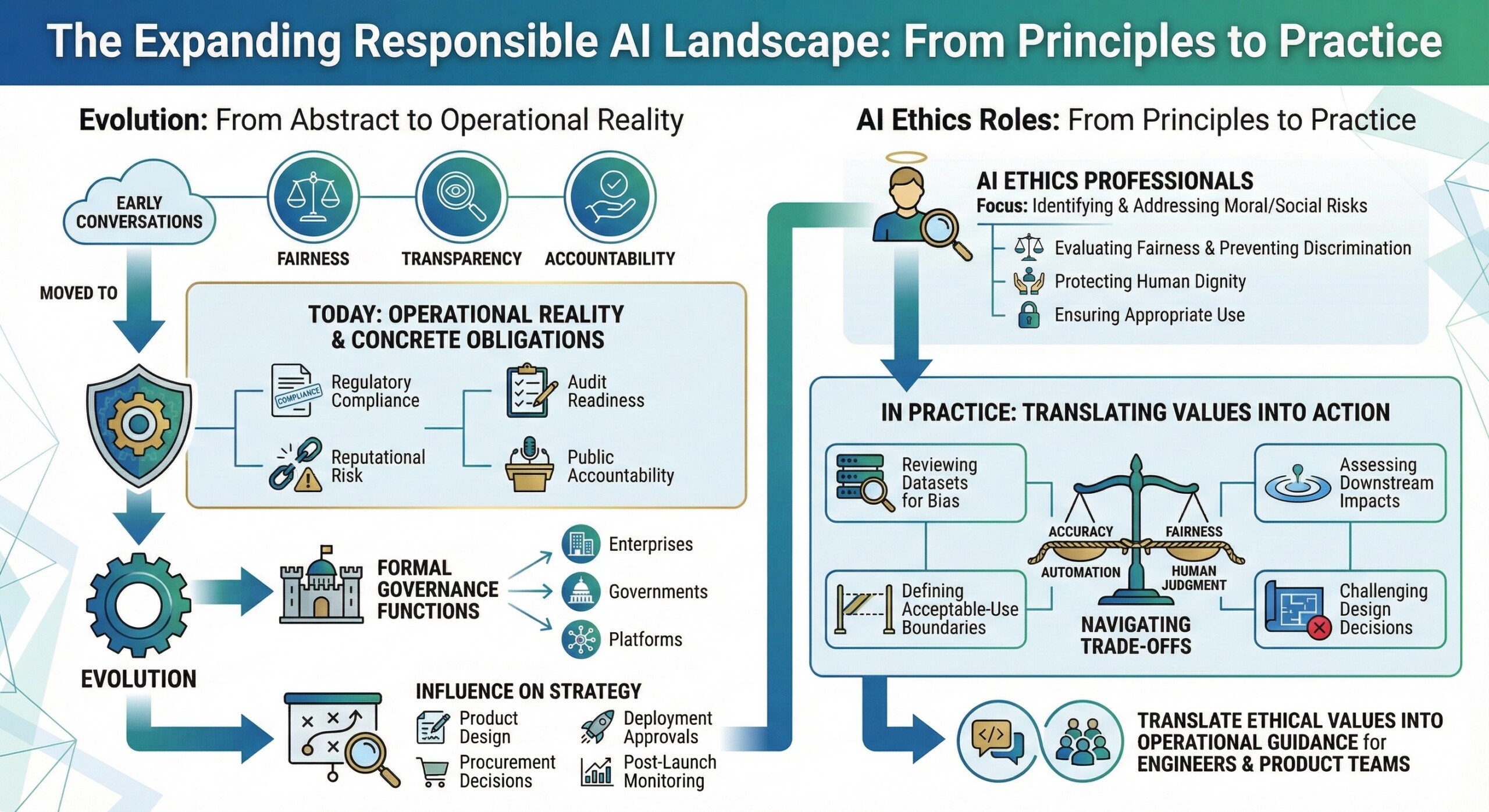

1. The expanding responsible AI landscape

Early conversations about AI ethics focused on abstract principles: fairness, transparency, accountability. Today, these concerns have moved firmly into operational reality.

Organizations now face concrete obligations—regulatory compliance, audit readiness, reputational risk, and public accountability. As a result, responsible AI has evolved from thought leadership into formal governance functions embedded within enterprises, governments, and platforms.

Ethics and governance roles increasingly influence product design, procurement decisions, deployment approvals, and post-launch monitoring. They are becoming central to AI strategy, not peripheral oversight.

2. AI Ethics roles: From principles to practice

AI Ethics professionals focus on identifying and addressing moral and social risks in AI systems. Their work includes evaluating fairness, preventing discrimination, protecting human dignity, and ensuring appropriate use.

In practice, this involves reviewing datasets for bias, assessing downstream impacts, defining acceptable-use boundaries, and challenging design decisions that may cause harm. Ethics roles require navigating trade-offs – accuracy versus fairness, automation versus human judgment.

These professionals translate ethical values into operational guidance that engineers and product teams can implement.

An excellent collection of learning videos awaits you on our Youtube channel.

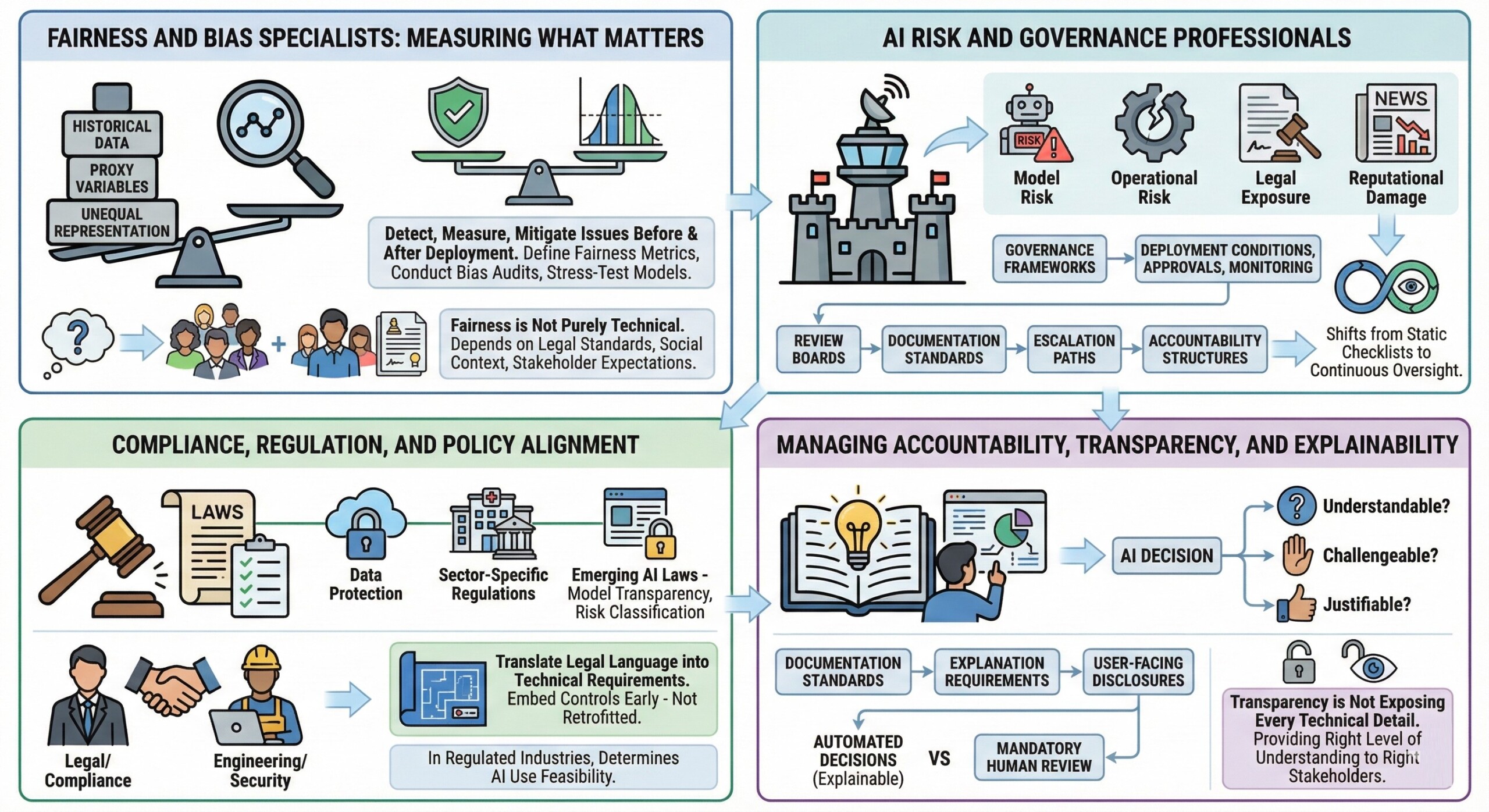

3. Fairness and bias specialists: Measuring what matters

Bias in AI systems often emerges from historical data, proxy variables, or unequal representation. Fairness specialists focus on detecting, measuring, and mitigating these issues before and after deployment.

Their work includes defining fairness metrics, conducting bias audits, stress-testing models across demographic groups, and recommending corrective actions. Importantly, fairness is not purely technical – it depends on legal standards, social context, and stakeholder expectations.

These roles demand both analytical rigor and contextual judgment, as fairness is rarely a one-size-fits-all concept.

4. AI risk and governance professionals

AI governance roles focus on managing systemic risk. This includes model risk, operational risk, legal exposure, and reputational damage arising from AI use.

Professionals in this space design governance frameworks that define who can deploy AI, under what conditions, with what approvals, and with what monitoring. They establish review boards, documentation standards, escalation paths, and accountability structures.

As AI systems become more autonomous and interconnected, governance shifts from static checklists to continuous oversight.

A constantly updated Whatsapp channel awaits your participation.

5. Compliance, regulation, and policy alignment

AI compliance roles ensure systems align with laws, regulations, and industry standards. This includes data protection rules, sector-specific regulations, and emerging AI laws such as model transparency and risk classification requirements.

Compliance professionals translate legal language into technical and organizational requirements. They work closely with legal, security, and engineering teams to ensure controls are embedded early – not retrofitted after deployment.

In regulated industries, these roles often determine whether AI can be used at all.

6. Managing accountability, transparency, and explainability

Responsible AI roles also address explainability – ensuring decisions can be understood, challenged, and justified. This is critical in domains like finance, healthcare, and public services.

Professionals define documentation standards, explanation requirements, and user-facing disclosures. They help determine when automated decisions must be explainable and when human review is mandatory.

Transparency is not about exposing every technical detail. It is about providing the right level of understanding to the right stakeholders.

Excellent individualised mentoring programmes available.

7. Cross-functional collaboration and institutional trust

Ethics and governance roles are inherently cross-functional. They collaborate with engineers, product managers, data scientists, lawyers, compliance officers, and leadership.

They must explain risks to executives, constraints to developers, and safeguards to regulators and the public. When failures occur, these professionals often lead root-cause analysis and remediation.

Their effectiveness depends as much on communication and credibility as on technical or legal expertise.

8. Skills that define responsible AI professionals

Successful professionals in this domain combine multiple skill sets: systems thinking, ethical reasoning, regulatory literacy, and practical understanding of AI technologies.

Key skills include risk assessment, impact analysis, policy interpretation, stakeholder negotiation, and governance design. Increasingly valuable capabilities include model documentation, audit processes, and monitoring frameworks.

Judgment is critical. Knowing when to slow down, restrict deployment, or say no is as important as enabling innovation.

Subscribe to our free AI newsletter now.

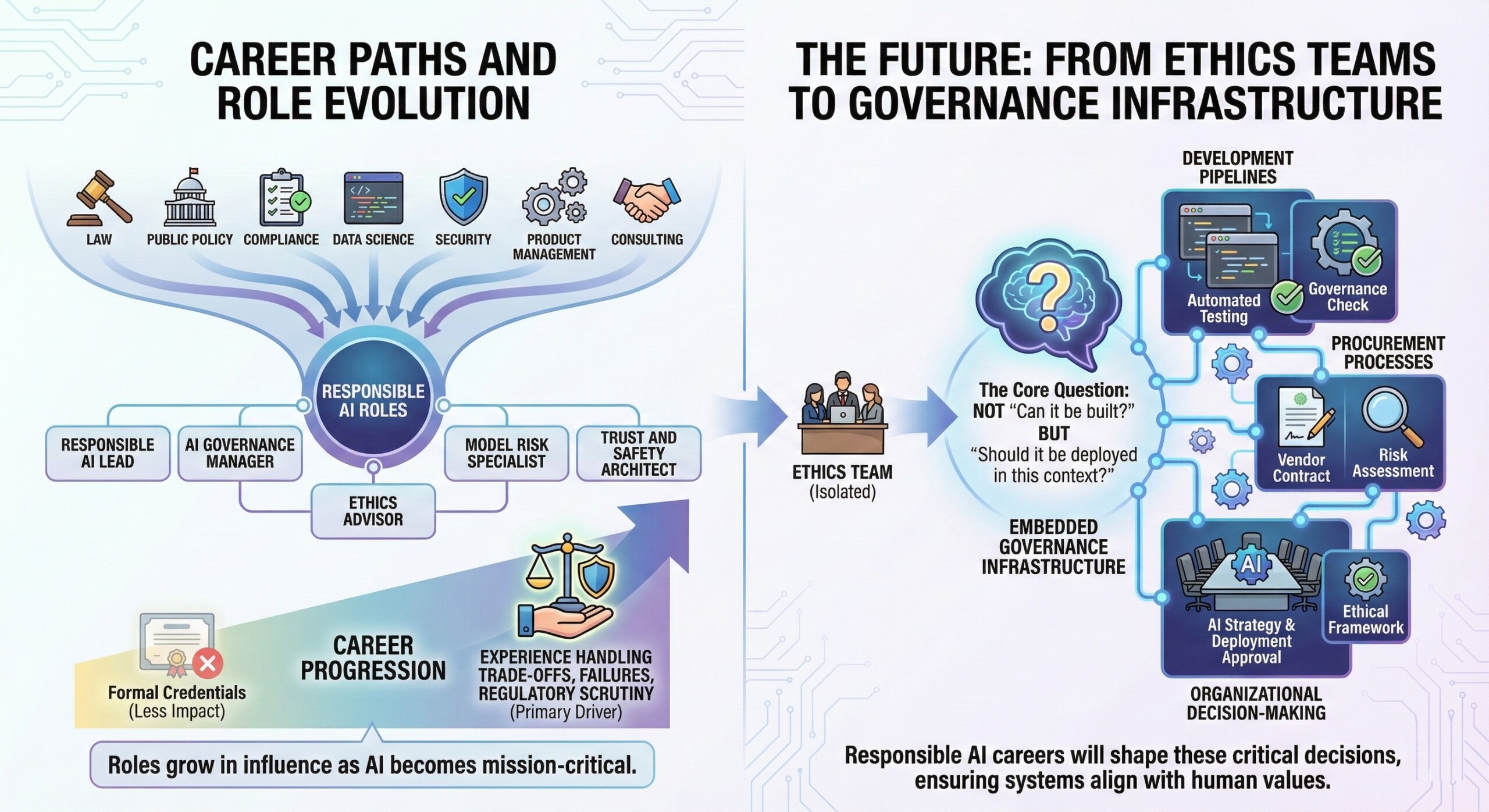

9. Career paths and role evolution

People enter responsible AI roles from law, public policy, compliance, data science, security, product management, and consulting backgrounds. Titles vary widely, including Responsible AI Lead, AI Governance Manager, Ethics Advisor, Model Risk Specialist, or Trust and Safety Architect.

Career progression depends less on formal credentials and more on experience handling real-world trade-offs, failures, and regulatory scrutiny. These roles grow in influence as AI becomes mission-critical.

10. The future: From ethics teams to governance infrastructure

The future of responsible AI lies in institutionalization. Ethics and governance will not remain isolated teams but become embedded infrastructure – built into development pipelines, procurement processes, and organizational decision-making.

As AI systems grow more powerful, the question will not be whether they can be built, but whether they should be deployed in specific contexts. Responsible AI careers will shape those decisions.

Upgrade your AI-readiness with our masterclass.

Billion Hopes summary

AI Ethics, Governance, and Responsible AI careers are about shaping power responsibly. They ensure that AI systems are fair, lawful, accountable, and worthy of trust. As AI becomes foundational to society, these roles will determine whether technology deepens inequality or supports human progress. The future belongs to professionals who can balance innovation with restraint, capability with care, and automation with accountability.