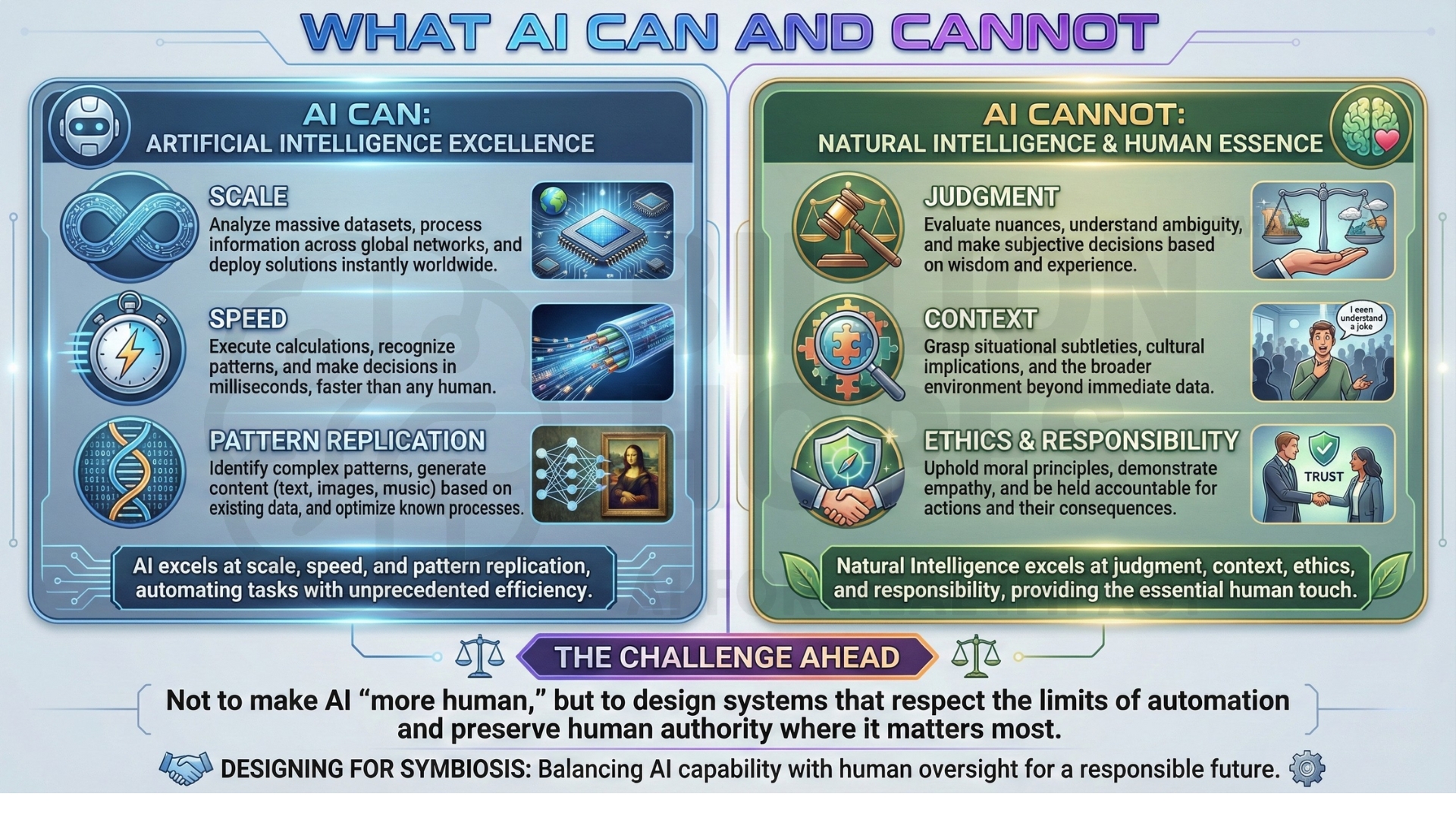

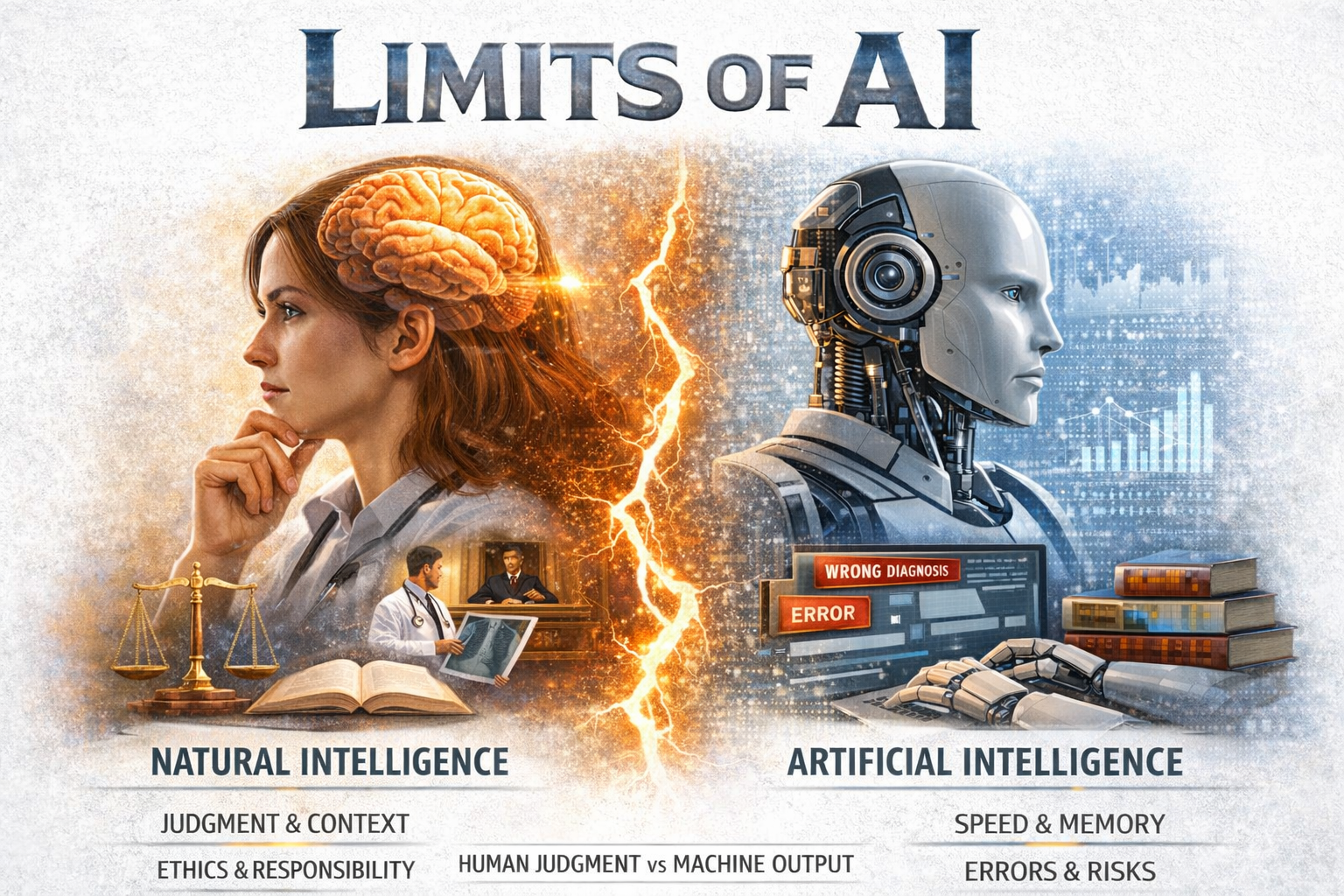

Limits of AI and relevance of NI

Artificial intelligence is increasingly presented as a substitute for human judgment, but recent evidence reveals a deeper truth: AI excels at speed and synthesis, not understanding or responsibility. As generative systems move into high-stakes domains like health, law, and work, their limitations become impossible to ignore. What AI produces with confidence often lacks context, caution, and accountability – the very qualities that define natural intelligence. This is not a story about machines becoming smarter, but about the growing gap between automated output and human judgment, and why that gap matters more now than ever.

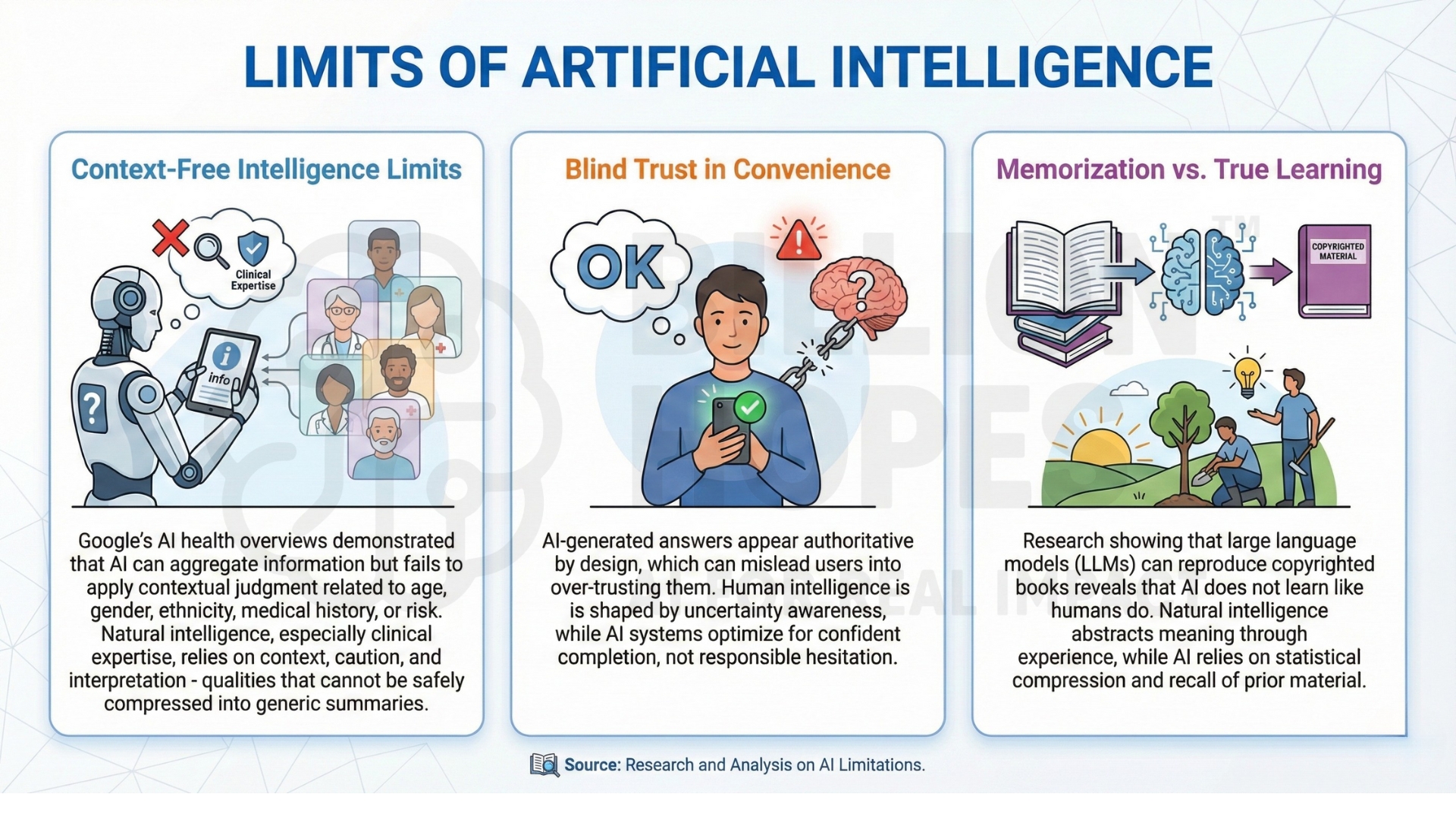

1. AI summaries expose the limits of context-free intelligence

Google’s AI health overviews demonstrated that AI can aggregate information but fails to apply contextual judgment related to age, gender, ethnicity, medical history, or risk. Natural intelligence, especially clinical expertise, relies on context, caution, and interpretation – qualities that cannot be safely compressed into generic summaries.

2. Convenience can override caution when AI is trusted blindly

AI-generated answers appear authoritative by design, which can mislead users into over-trusting them. Human intelligence is shaped by uncertainty awareness, while AI systems optimize for confident completion, not responsible hesitation.

3. Memorization undermines the “learning” metaphor

Research showing that large language models (LLMs) can reproduce copyrighted books reveals that AI does not learn like humans do. Natural intelligence abstracts meaning through experience, while AI relies on statistical compression and recall of prior material.

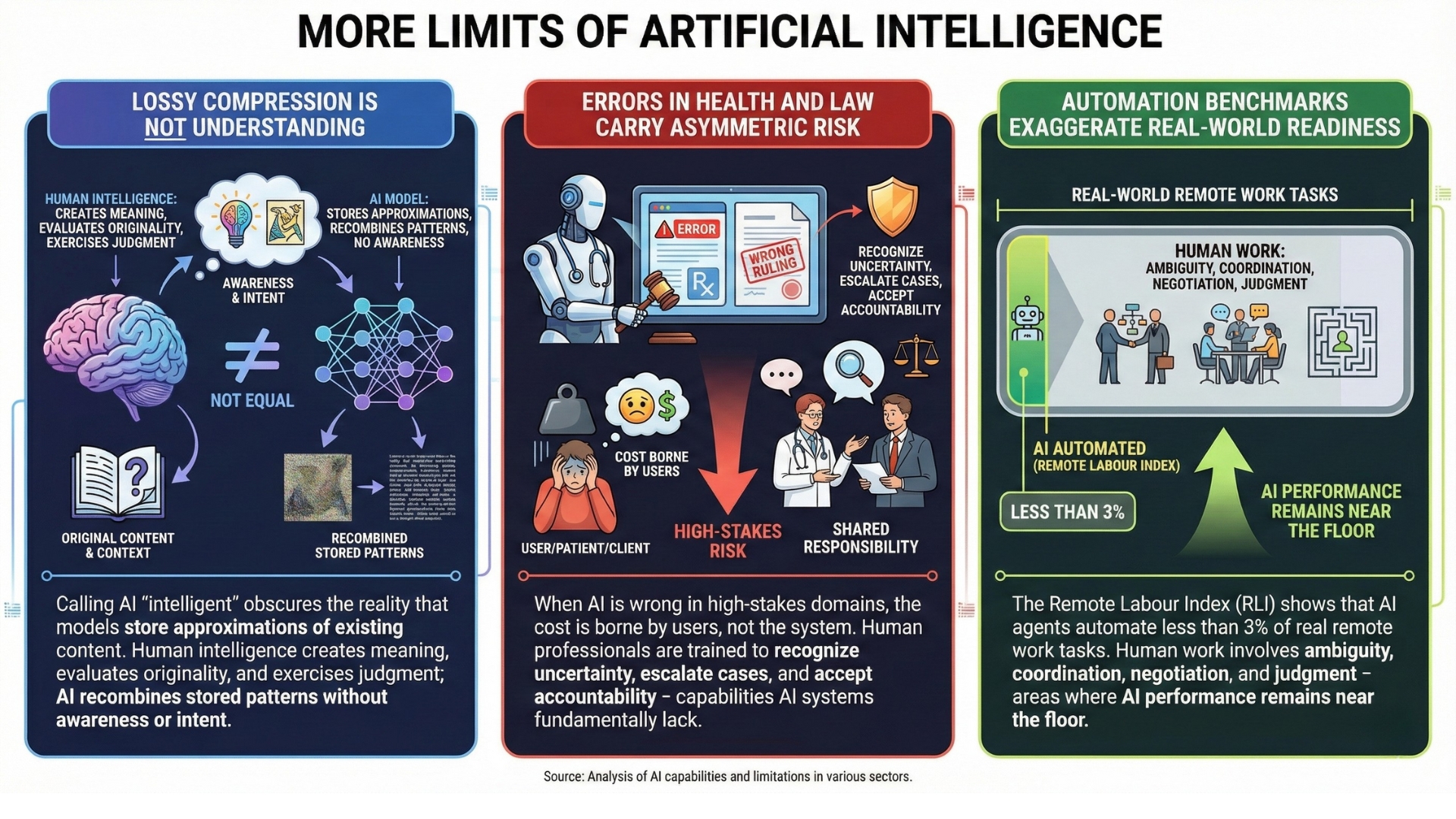

4. Lossy compression is not understanding

Calling AI “intelligent” obscures the reality that models store approximations of existing content. Human intelligence creates meaning, evaluates originality, and exercises judgment; AI recombines stored patterns without awareness or intent.

5. Errors in health and law carry asymmetric risk

When AI is wrong in high-stakes domains, the cost is borne by users, not the system. Human professionals are trained to recognize uncertainty, escalate cases, and accept accountability – capabilities AI systems fundamentally lack.

6. Automation benchmarks exaggerate real-world readiness

The Remote Labour Index (RLI) shows that AI agents automate less than 3% of real remote work tasks. Human work involves ambiguity, coordination, negotiation, and judgment – areas where AI performance remains near the floor.

7. Scaling intelligence is not the same as deepening intelligence

The slowdown in returns from model scaling highlights that bigger models do not necessarily produce better reasoning. Natural intelligence improves through reflection, constraint, and feedback – not just volume of data.

8. Natural intelligence integrates ethics implicitly

Humans constantly apply moral reasoning, social norms, and professional responsibility without explicit prompts. AI systems require external rules and safeguards to approximate this behaviour, and still fail in edge cases.

9. Trust is earned through restraint, not capability alone

Human experts know when not to answer, defer, or say “I don’t know.” AI systems are rewarded for always responding, which erodes trust when answers are confidently wrong.

10. The future is augmentation, not replacement

The evidence points toward AI as a powerful assistive tool, not an autonomous decision-maker. Natural intelligence remains essential for interpretation, accountability, ethics, and responsibility – especially where lives, rights, and livelihoods are at stake.

Closing perspective

Artificial Intelligence excels at scale, speed, and pattern replication. Natural Intelligence excels at judgment, context, ethics, and responsibility. The challenge ahead is not to make AI “more human,” but to design systems that respect the limits of automation and preserve human authority where it matters most.