Enterprise AI Operations & MLOps Careers – Deployment, monitoring, and lifecycle management

Artificial intelligence is no longer experimental inside enterprises. Models are being deployed into core business processes – credit decisions, supply chains, customer engagement, healthcare workflows, and national infrastructure. As AI systems move from notebooks to production environments, a new reality has emerged: building models is only a small part of making AI work. The harder challenge is operating AI reliably over time.

Enterprise AI now lives in environments defined by uptime requirements, regulatory scrutiny, data drift, security risks, and business accountability. Models must be deployed, monitored, retrained, governed, and eventually retired. This shift has given rise to a critical but often overlooked domain: AI Operations and MLOps. Careers in this space determine whether AI becomes a durable organizational capability – or an expensive, fragile experiment.

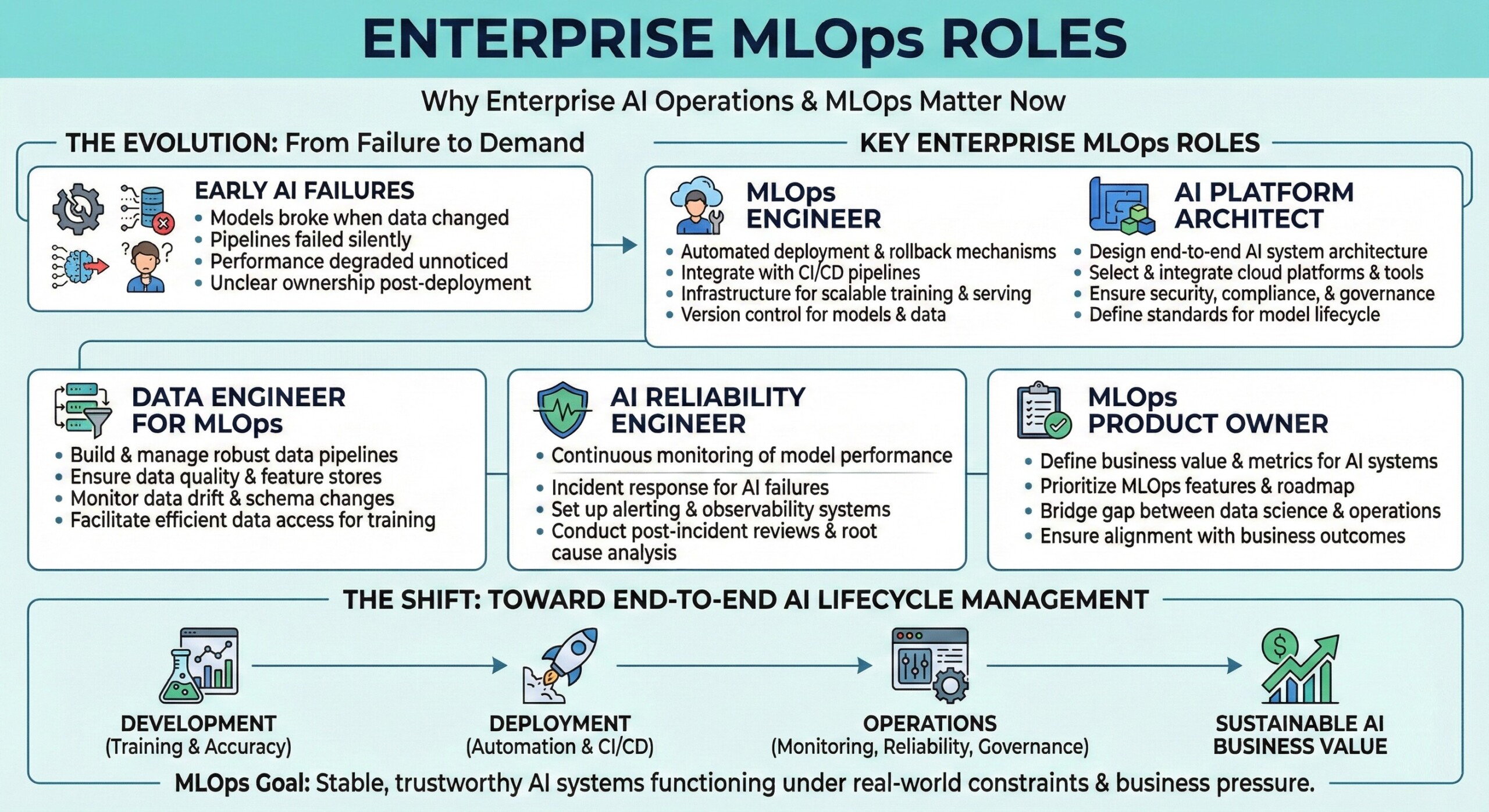

1. Why enterprise AI operations and MLOps roles now matter

Most early AI initiatives failed not because models were inaccurate, but because they could not be sustained. Models broke when data changed, pipelines failed silently, performance degraded unnoticed, and ownership became unclear once systems went live.

Enterprise leaders now ask different questions:

- How do we deploy AI models safely into production?

- How do we detect model drift before business impact occurs?

- Who owns AI systems after deployment?

- How do we audit, update, and retire models responsibly?

- How do we scale AI across teams without chaos?

These questions have created demand for professionals who treat AI as infrastructure, not experimentation. Enterprise AI operations and MLOps careers exist to ensure AI systems remain reliable, observable, compliant, and aligned with business outcomes throughout their lifecycle.

2. From model development to operational AI systems

Traditional AI work focused heavily on training accuracy and benchmarks. Once a model performed well in development, it was often handed off to IT with minimal operational context. This handoff routinely failed.

Modern enterprises are shifting toward end-to-end AI lifecycle management, which includes:

- Automated deployment and rollback mechanisms,

- Continuous monitoring of data quality and model performance,

- Integration with CI/CD pipelines and cloud platforms,

- Controlled retraining and version management,

- Incident response for AI failures.

AI operations professionals sit at the center of this transition. Their goal is not better models, but stable, trustworthy AI systems that function under real-world constraints and business pressure. An excellent collection of learning videos awaits you on our Youtube channel.

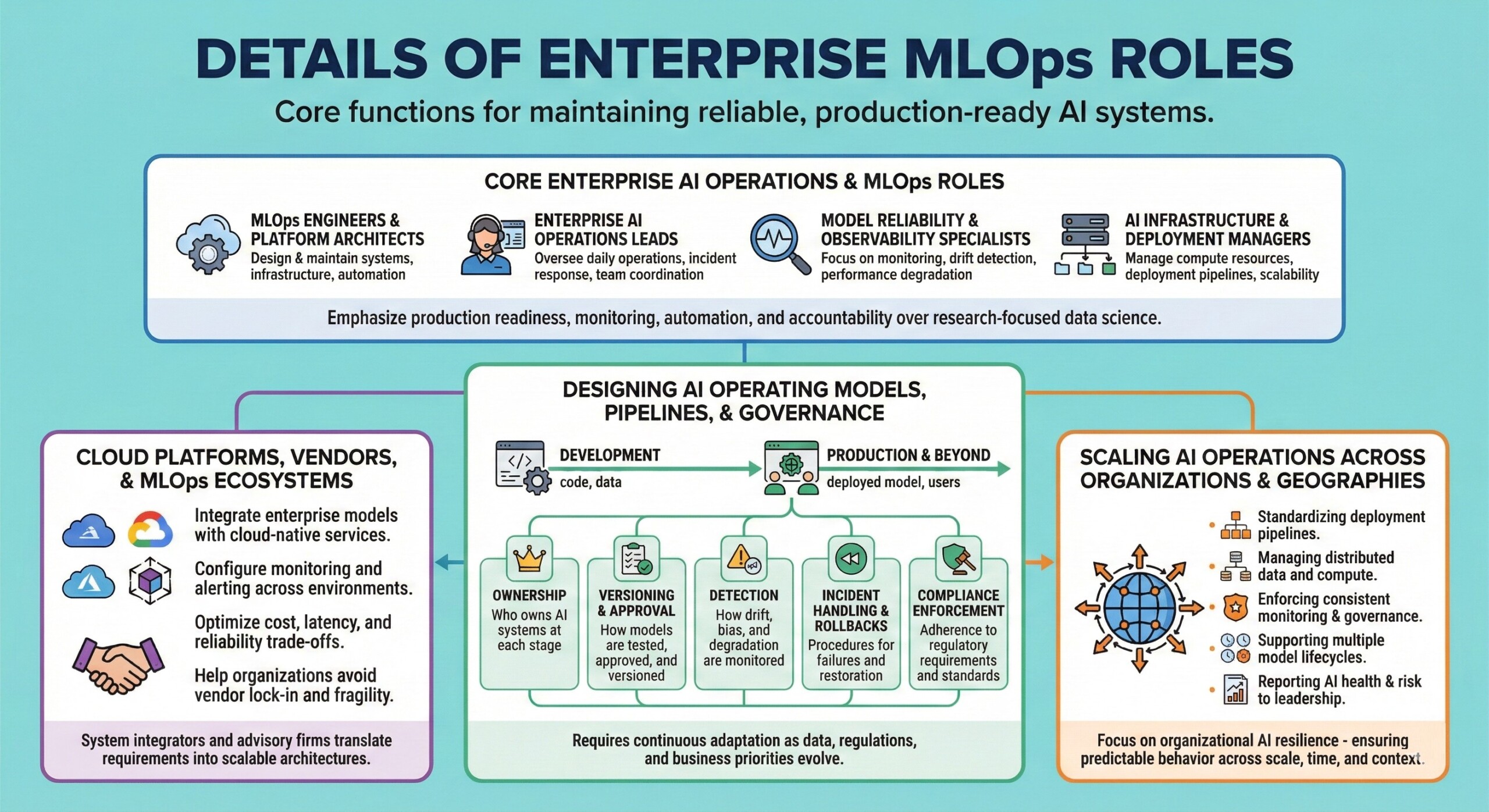

3. Core enterprise AI operations and MLOps roles

Enterprise AI operations roles span engineering, platform teams, data science, governance, and business units. These professionals design and maintain the systems that keep AI running reliably after deployment.

They may work as:

- MLOps engineers and platform architects

- Enterprise AI operations leads

- Model reliability and observability specialists

- AI infrastructure and deployment managers

Unlike research-focused data scientists, these roles emphasize production readiness, monitoring, automation, and accountability. Success requires fluency across software engineering, cloud infrastructure, data pipelines, and AI model behavior—often under uptime and compliance constraints.

4. Designing AI operating models, pipelines, and governance

A central responsibility of AI operations professionals is designing operating models that define how AI systems move from development to production and beyond. This includes decisions about deployment patterns, monitoring thresholds, retraining triggers, and failure handling.

Governance is equally critical. As AI systems influence business decisions, enterprises must ensure traceability, explainability, auditability, and regulatory alignment.

Professionals in these roles help define:

- Who owns AI systems at each lifecycle stage,

- How models are versioned, tested, and approved,

- How drift, bias, and degradation are detected,

- How incidents and rollbacks are handled,

- How AI compliance requirements are enforced.

AI operations is not static engineering. These roles require continuous adaptation as data, regulations, infrastructure, and business priorities evolve. A constantly updated Whatsapp channel awaits your participation.

5. Cloud platforms, vendors, and MLOps ecosystems

Much of enterprise AI operations is shaped by cloud providers, MLOps platforms, and AI infrastructure vendors. Tools from hyperscalers and specialized startups now support deployment automation, monitoring dashboards, feature stores, and model registries.

Professionals working in this ecosystem:

- Integrate enterprise models with cloud-native services,

- Configure monitoring and alerting across environments,

- Optimize cost, latency, and reliability trade-offs,

- Help organizations avoid vendor lock-in and architectural fragility.

System integrators and advisory firms also play a major role, translating enterprise requirements into scalable AI operating architectures. In practice, these actors influence how AI is run at scale more than academic research does.

6. Scaling AI operations across organizations and geographies

As enterprises scale AI across departments and regions, operational complexity increases sharply. Different teams deploy different models, data sources vary by geography, and regulations impose local constraints.

Challenges at scale include:

- Standardizing deployment pipelines across teams,

- Managing distributed data and compute environments,

- Enforcing consistent monitoring and governance,

- Supporting multiple model lifecycles simultaneously,

- Reporting AI health and risk to leadership.

AI operations professionals working at this level focus less on individual models and more on organizational AI resilience – ensuring systems behave predictably across scale, time, and context. Excellent individualised mentoring programmes available.

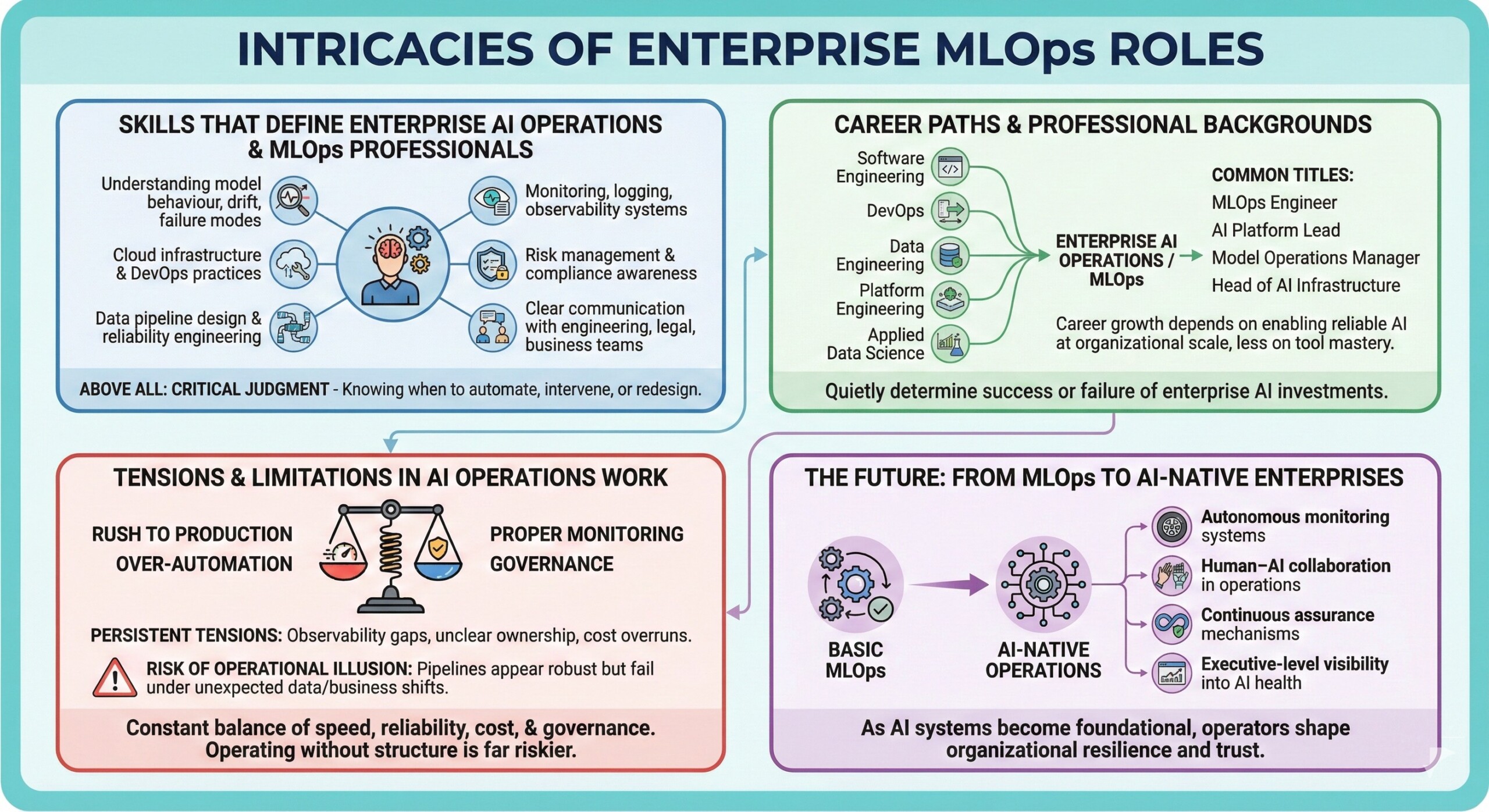

7. Skills that define enterprise AI operations and MLOps professionals

Careers in AI operations demand a hybrid skill set. Deep machine learning knowledge helps, but operational competence matters more.

Key capabilities include:

- Understanding model behaviour, drift, and failure modes,

- Cloud infrastructure and DevOps practices,

- Data pipeline design and reliability engineering,

- Monitoring, logging, and observability systems,

- Risk management and compliance awareness,

- Clear communication with engineering, legal, and business teams.

Above all, judgment is critical – knowing when automation is safe, when human intervention is required, and when a system should be paused or redesigned.

8. Career paths and professional backgrounds

AI operations professionals come from diverse backgrounds, including software engineering, DevOps, data engineering, platform engineering, and applied data science. Some transition from research roles after witnessing repeated production failures.

Common titles include MLOps Engineer, AI Platform Lead, Model Operations Manager, or Head of AI Infrastructure. Career growth depends less on mastering specific tools and more on enabling reliable AI at organizational scale.

These roles rarely attract public attention, but they quietly determine whether enterprise AI investments succeed or fail.

Subscribe to our free AI newsletter now.

9. Tensions and limitations in AI operations work

Enterprise AI operations face persistent tensions. Teams may rush models into production without proper monitoring, underestimate drift, or over-automate lifecycle decisions. Observability gaps, unclear ownership, and cost overruns are common.

There is also the risk of operational illusion – pipelines that appear robust but fail under unexpected data shifts or business changes. AI operations professionals must constantly balance speed, reliability, cost, and governance.

Despite these challenges, operating AI without structure is far riskier. AI operations careers exist to manage the tension between innovation and stability.

10. The future: From MLOps to AI-native enterprises

The future of enterprise AI lies beyond basic MLOps. Organizations are moving toward AI-native operations, where deployment, monitoring, retraining, governance, and risk management are deeply integrated.

This includes autonomous monitoring systems, human–AI collaboration in operations, continuous assurance mechanisms, and executive-level visibility into AI health. As AI systems become foundational to enterprises, the professionals who operate them will increasingly shape organizational resilience and trust. Upgrade your AI-readiness with our masterclass.

Billion Hopes summary

Enterprise AI Operations & MLOps careers are about transforming AI from fragile experiments into reliable, governable systems. These roles integrate deployment engineering, monitoring, lifecycle management, and human judgment to ensure AI delivers value safely at scale. As AI becomes core infrastructure for enterprises and societies, professionals who can operationalize AI responsibly will define the future of stability, accountability, and digital confidence.