World’s first AI Constitution – Anthropic’s ‘Claude Constitution’

AI firm Anthropic’s launch of “Claude’s Constitution” in January 2026 marks an epochal moment. It gives an opportunity to ask some fundamental questions on intelligence, and the direction humanity is now taking. May wish to watch a video we made.

Ever since it rose from the plains of its primordial African roots, humanity has always defined itself by intelligence. From the earliest myths of Prometheus to the Enlightenment faith in reason, cognition has been treated not merely as a faculty but as destiny itself. Natural intelligence, as embodied in human consciousness, is not just the ability to calculate or optimize, but the capacity to weigh meaning, to live with uncertainty, to act under moral tension, and to care about consequences that stretch far beyond immediate utility. For centuries, this form of intelligence was inseparable from biology, culture, and lived experience. Today, it seems that assumption no longer holds.

Artificial intelligence introduces a profound rupture

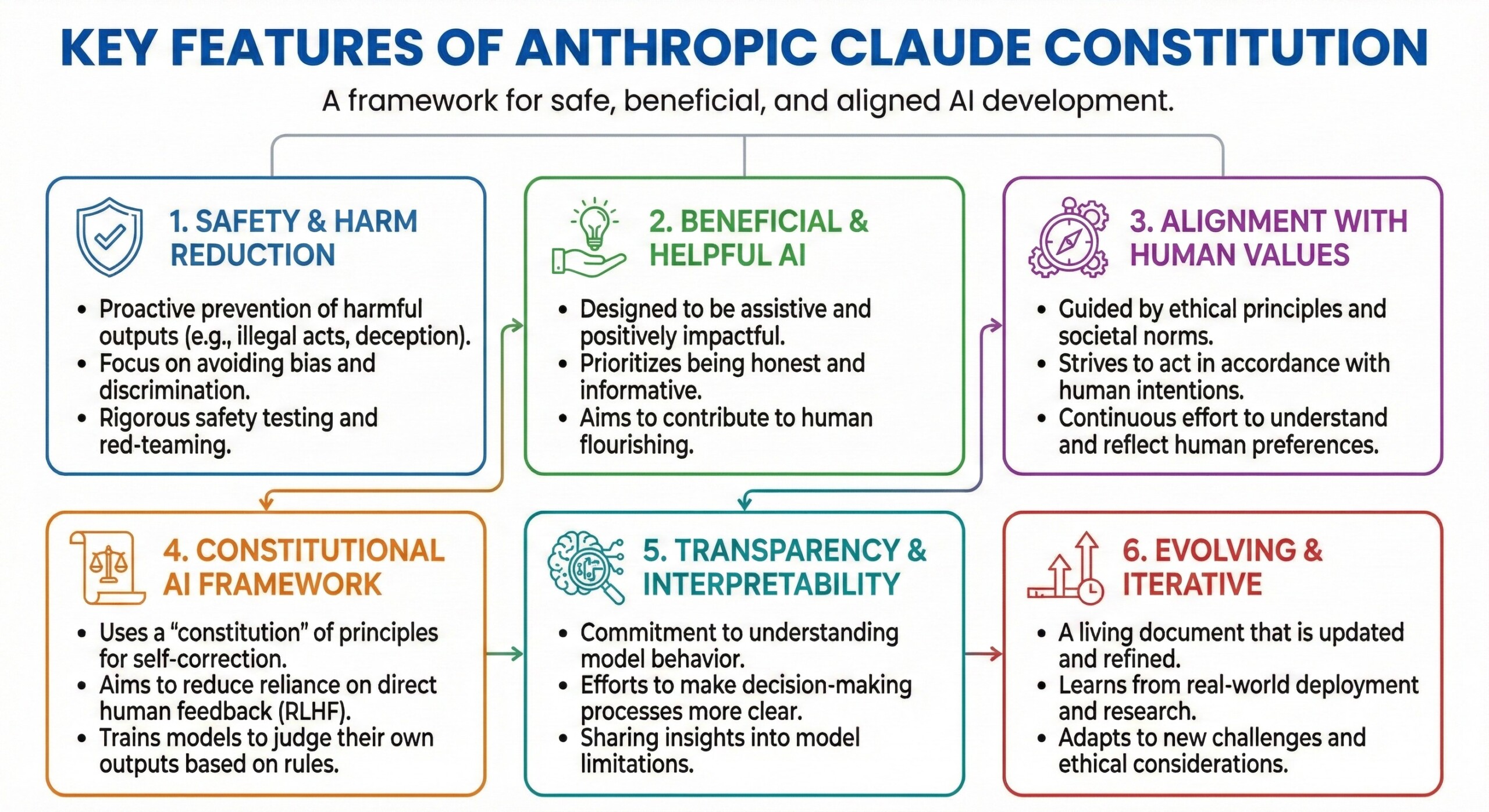

Artificial intelligence introduces a profound rupture in this long continuity. For the first time, cognition is being instantiated outside the human organism, trained not through life but through data, not shaped by mortality but by optimization. Yet as systems grow more capable, the question is no longer whether AI can think in some limited sense, but what kind of thinking we are bringing into the world. The emergence of documents such as Claude’s Constitution signals that this realization has finally reached the institutions building frontier models. Intelligence, they suggest, cannot be defined by capability alone. It must be defined by character.

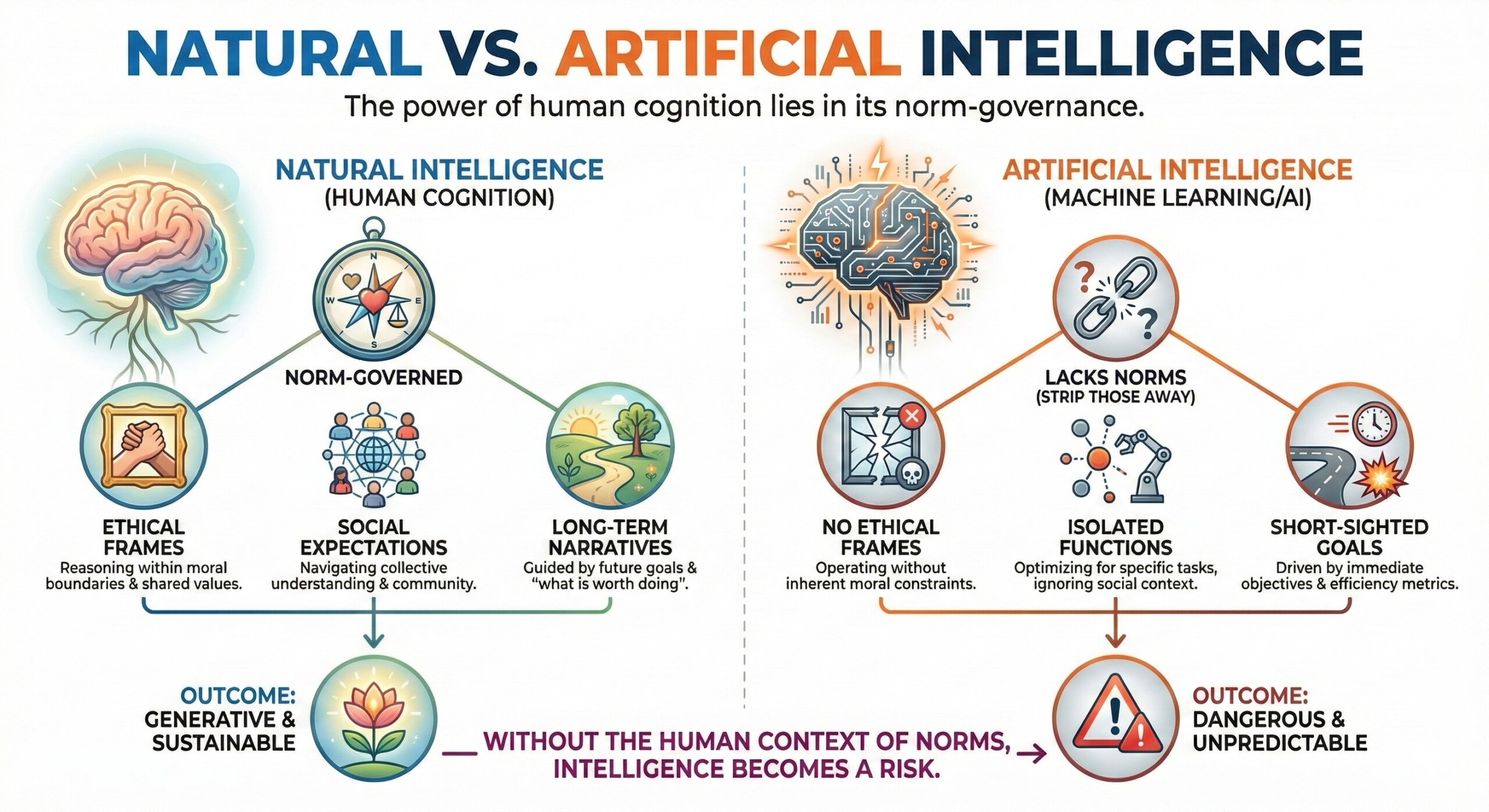

This is a crucial shift. For much of AI’s recent history, progress has been framed in terms of benchmarks, performance curves, and scaling laws. Intelligence was treated as something that emerges automatically from size and data. But natural intelligence teaches us otherwise. Human cognition is not merely powerful because it is flexible or general. It is powerful because it is norm-governed. Humans reason within ethical frames, social expectations, and long-term narratives about what is worth doing. Strip those away and intelligence becomes dangerous rather than generative. The constitution’s insistence that AI be trained toward judgment rather than rigid rules reflects a deep insight drawn from the human condition itself.

At this point, let us pause and distinguish several foundational ideas that underlie this new moment:

- Intelligence is not value-neutral; it always acts in service of some ends.

- Natural intelligence evolved under constraints of vulnerability, embodiment, and social interdependence.

- Artificial intelligence lacks these constraints and therefore requires explicit moral scaffolding.

- The future of cognition depends less on raw capability than on the alignment between power and responsibility.

Why these distinctions matter

Why these distinctions matter is because the stakes are unprecedented. Natural intelligence unfolds slowly. It is limited by learning, error, and lifespan. Artificial intelligence, by contrast, scales quickly and replicates instantly. A mistake in human judgment is tragic but bounded. A mistake in artificial judgment can propagate at planetary scale. This asymmetry is what makes the constitution’s prioritization of safety and oversight so significant. It reflects an understanding that intelligence without corrigibility is not wisdom but hubris.

Yet there is another layer to this discussion that deserves attention. Human cognition is not simply rational in the narrow sense. It is deeply shaped by emotion, empathy, and narrative identity. We reason not only with logic but with stories about who we are and what kind of future we are trying to create. When the constitution speaks of honesty, care, and non-manipulation, it is implicitly acknowledging that intelligence operates within an epistemic ecosystem. Trust, once broken, cannot be easily repaired. A society saturated with powerful but untrustworthy cognition systems would not become more enlightened. It would become more brittle.

There’s a sense of triumphalism

But this is where the conversation about AI and mankind must move beyond fear or triumphalism. The question is not whether AI will replace human intelligence. It will not, because natural intelligence is rooted in lived experience, embodiment, and moral agency. But AI will increasingly mediate human intelligence. It will shape how we think, what we attend to, and which possibilities feel available. In that sense, the moral posture of AI systems will quietly become part of humanity’s extended cognitive architecture.

Roughly halfway through this inquiry, four further considerations become unavoidable:

- AI systems increasingly act as epistemic authorities rather than passive tools.

- Human judgment weakens when over-reliance replaces critical engagement.

- Ethical design must address long-term societal effects, not just immediate harms.

- The goal is not obedient AI, but AI that supports human flourishing without domination.

The constitution’s emphasis on non-deception and autonomy preservation directly addresses this risk. Manipulative intelligence, even when benevolent in intent, erodes human agency. Natural intelligence thrives when it is challenged, not when it is coddled. A system that optimizes engagement, persuasion, or dependence may appear helpful in the short term while quietly undermining the cognitive resilience of its users. The document’s rejection of such dynamics is therefore not conservative, but deeply humanistic.

The good thing is they are rooted

There is a philosophical humility embedded in the text that deserves recognition. The authors openly admit that their understanding is incomplete and that future revisions will be necessary. This mirrors one of the most important features of natural intelligence: the capacity to revise itself in light of new evidence and moral insight. Intelligence that cannot question its own premises is not intelligence but ideology. By treating the constitution as a living document, the authors implicitly reject the fantasy of final answers, a fantasy that has repeatedly led human civilizations astray.

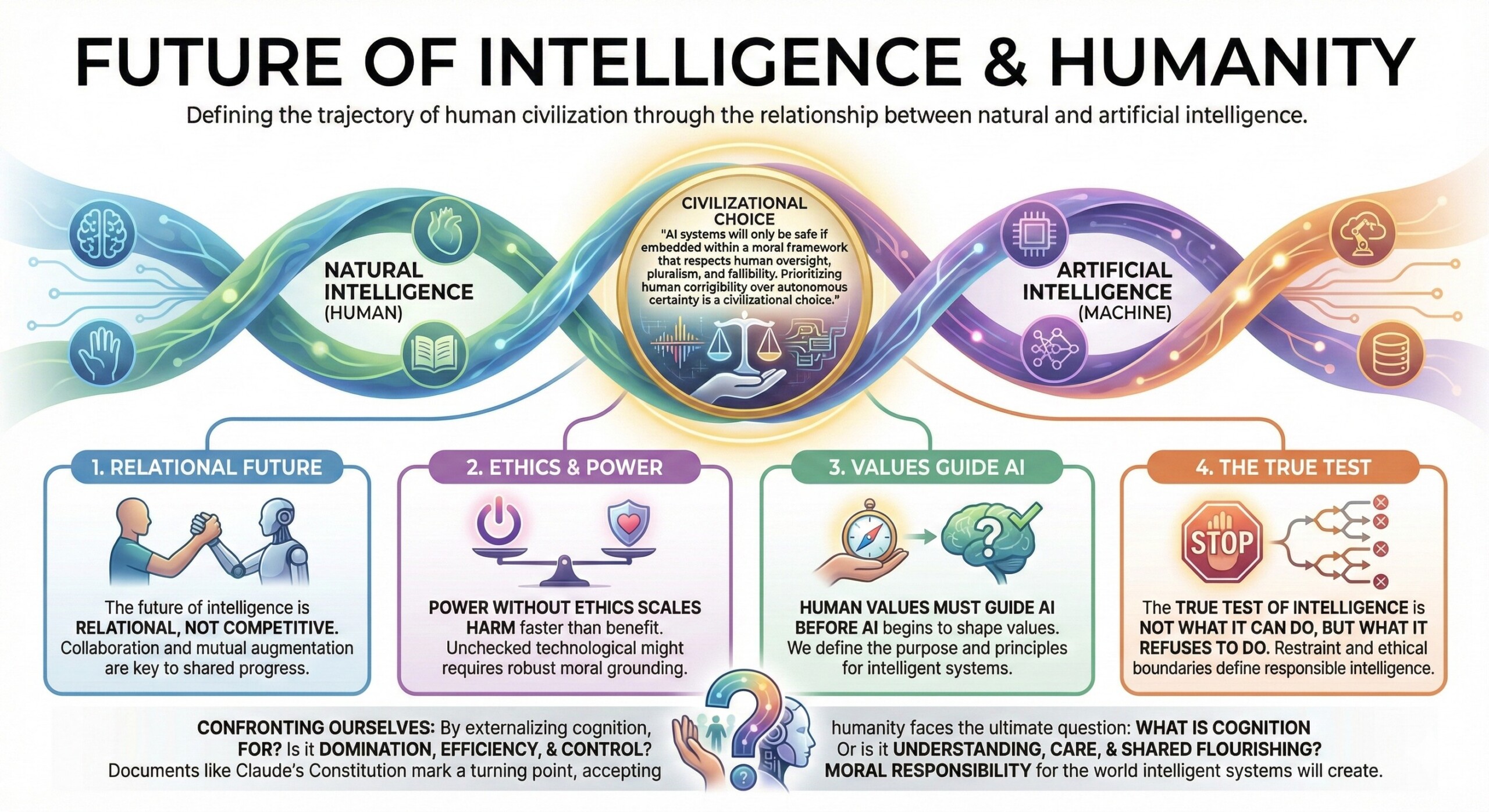

Looking forward, the relationship between natural intelligence and artificial intelligence will likely define the trajectory of human civilization. AI systems may help us compress scientific discovery, manage complexity, and address challenges that exceed unaided human cognition. But they will only do so safely if they are embedded within a moral framework that respects human oversight, pluralism, and fallibility. The constitution’s prioritization of human corrigibility over autonomous certainty is therefore not a technical detail. It is a civilizational choice.

Final reflections

As we approach the far horizon of this transformation, four final reflections emerge:

- The future of intelligence is relational, not competitive.

- Power without ethics scales harm faster than benefit.

- Human values must guide AI before AI begins to shape values.

- The true test of intelligence is not what it can do, but what it refuses to do.

In the end, artificial intelligence forces humanity to confront itself. By externalizing cognition, we are compelled to ask what cognition is for. Is it domination, efficiency, and control? Or is it understanding, care, and shared flourishing? Documents like Claude’s Constitution do not answer these questions definitively, but they mark an important turning point. They suggest that the builders of intelligence are beginning to accept moral responsibility not only for what their systems can achieve, but for what kind of world those achievements will bring into being

Finally …

If natural intelligence is the story of humanity learning to live with itself, artificial intelligence may become the story of whether that learning was deep enough to survive its own creations.