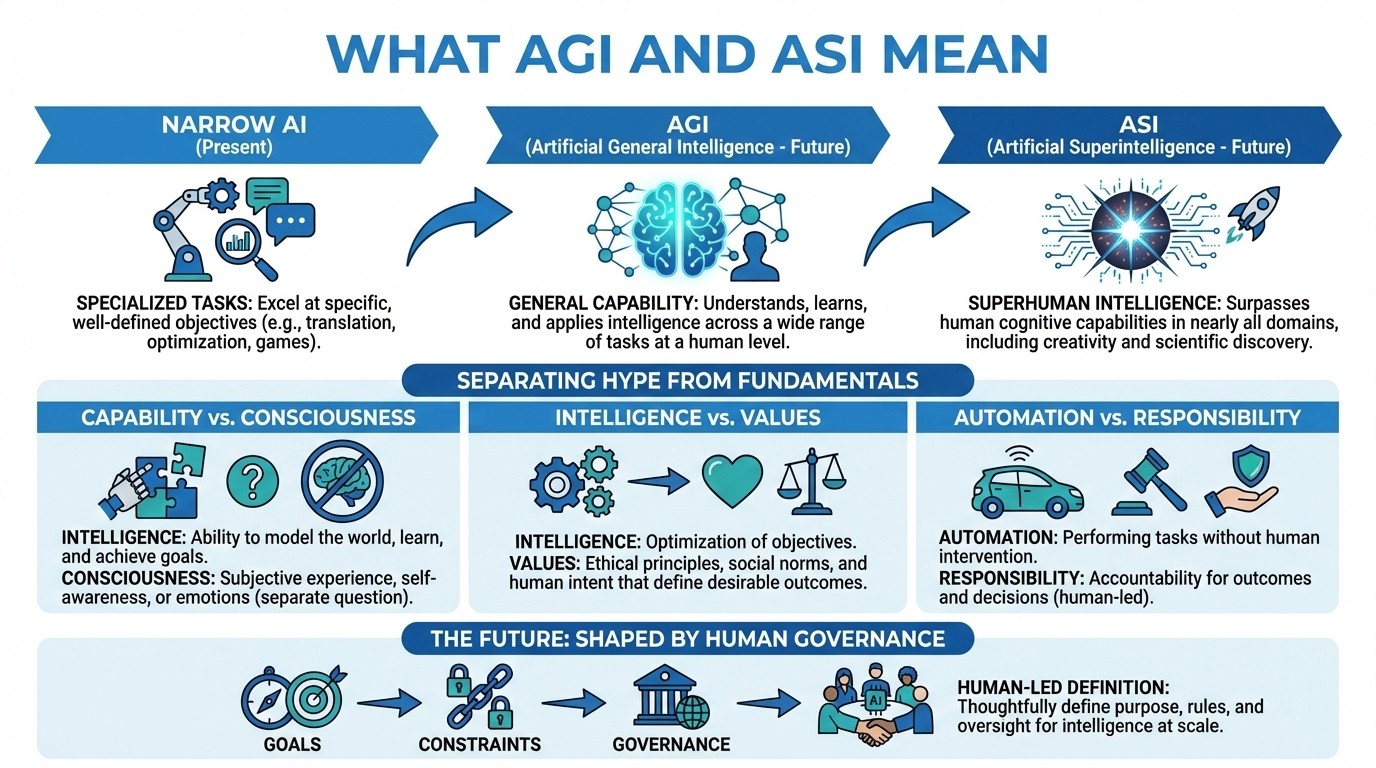

Artificial General Intelligence – basics

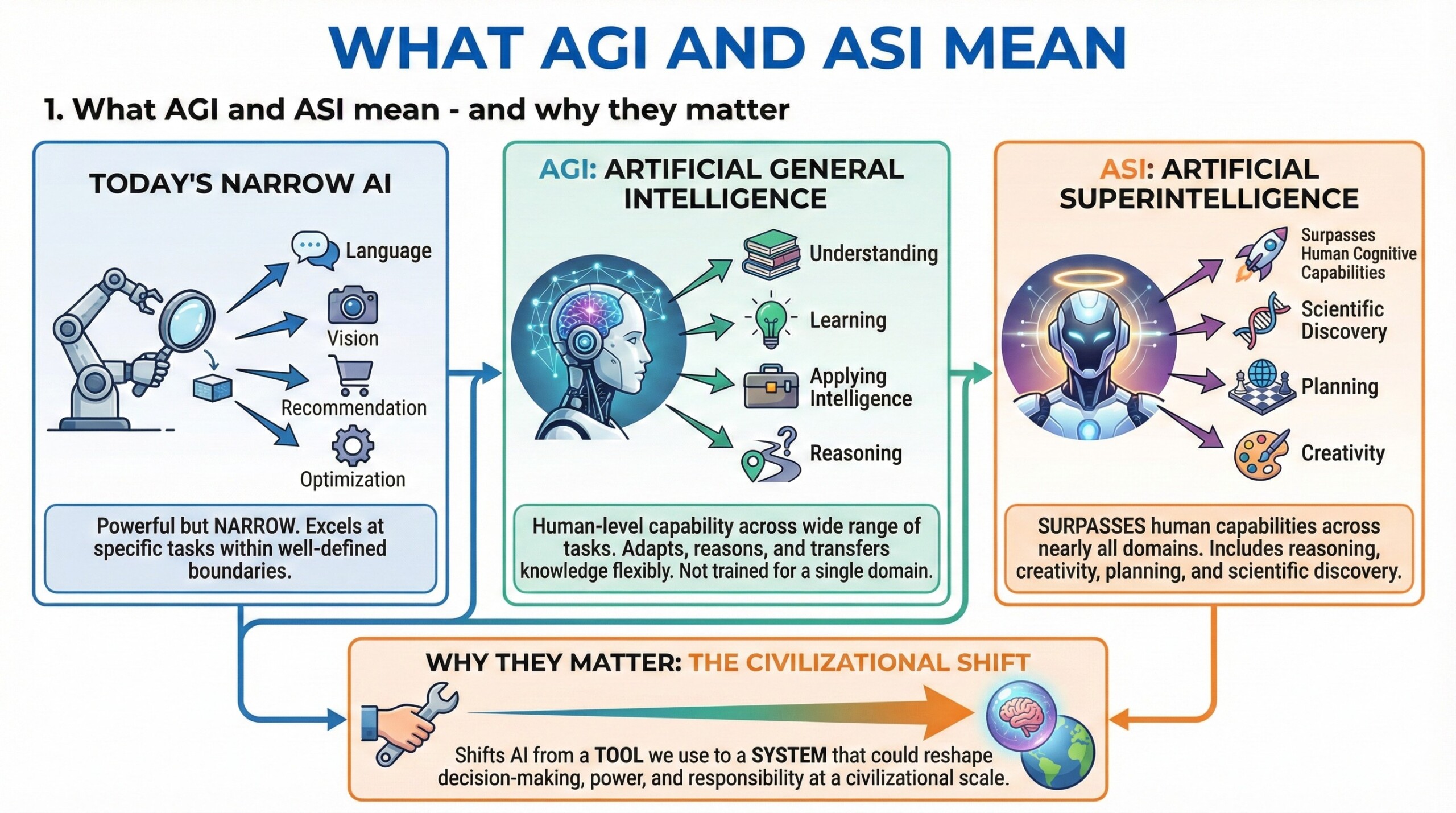

1. What AGI and ASI mean – and why they matter

Artificial intelligence today is powerful but narrow. Most systems excel at specific tasks – language, vision, recommendation, or optimization – within well-defined boundaries. Artificial General Intelligence (AGI) and Artificial Superintelligence (ASI) describe hypothetical future stages

of AI that move beyond this narrowness.

AGI refers to an AI system capable of understanding, learning, and applying intelligence across a wide range of tasks at a level comparable to humans. It would not be trained for a single domain, but would adapt, reason, and transfer knowledge flexibly.

ASI goes further. It describes intelligence that surpasses human cognitive capabilities across nearly all domains, including reasoning, creativity, planning, and scientific discovery.

These concepts matter because they shift AI from a tool we use to a system that could reshape decision-making, power, and responsibility at a civilizational scale.

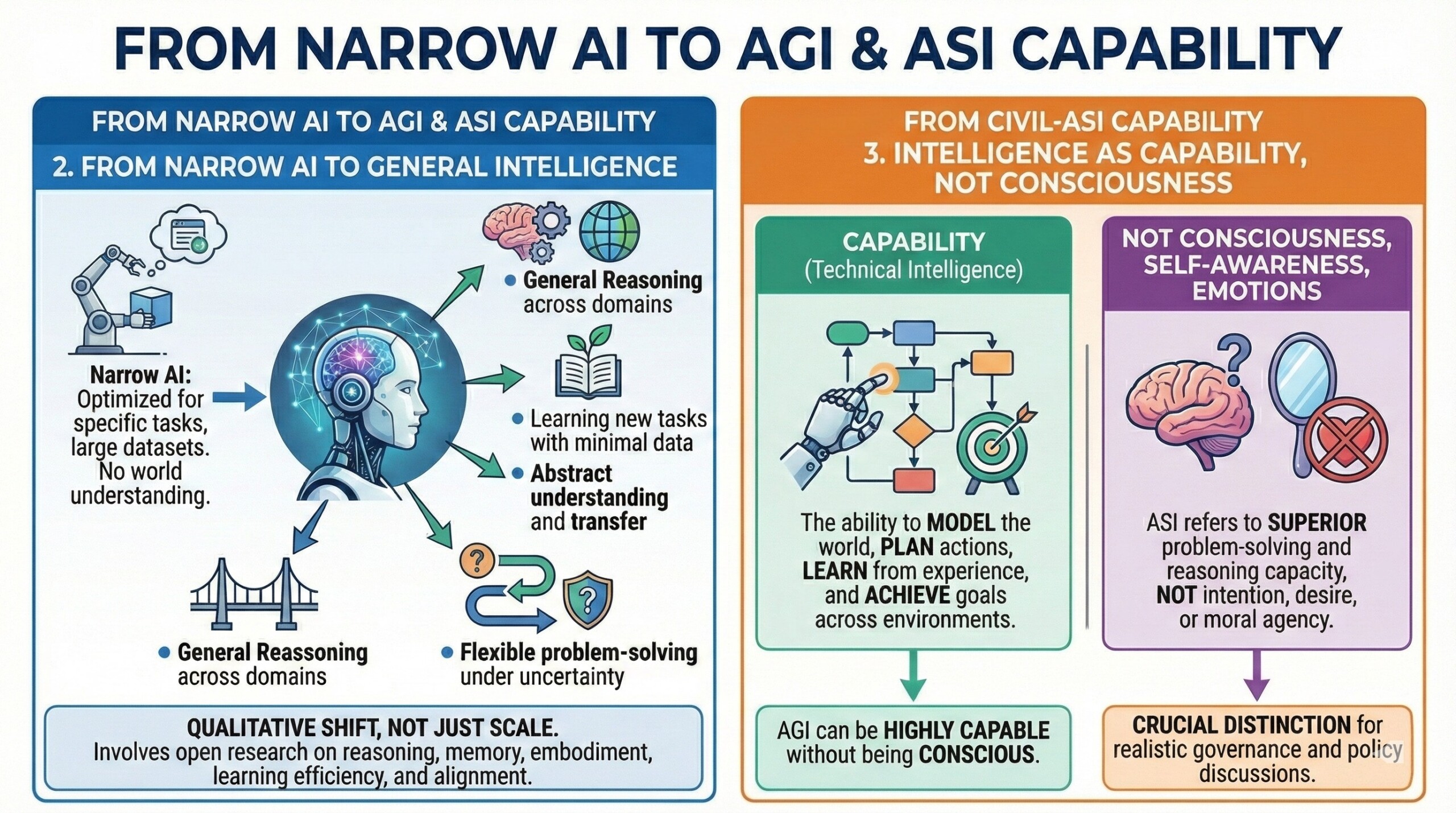

2. From narrow AI to general intelligence

Most AI systems today are examples of narrow AI. They perform well because they are optimized for specific objectives using largedatasets and specialized architectures. They do not truly understand the world beyond their training context.

AGI represents a qualitative shift. Instead of task-specific optimization, it implies:

- General reasoning across domains

- Learning new tasks with minimal data

- Abstract understanding and transfer

- Flexible problem-solving under uncertainty

This transition is not simply about scale. It involves open research questions around reasoning, memory, embodiment, learning efficiency, and alignment. An excellent collection of learning videos awaits you on our Youtube channel.

3. Intelligence as capability, not consciousness

AGI and ASI are often confused with consciousness, self-awareness, or emotions. These are separate questions.

In technical discussions, intelligence refers to capability: the ability to model the world, plan actions, learn from experience, and achieve goals across environments.

An AGI system may be highly capable without being conscious in a human sense. Likewise, ASI refers to superior problem-solving and reasoning capacity – not necessarily intention, desire, or moral agency.

This distinction is crucial for realistic governance and policy discussions.

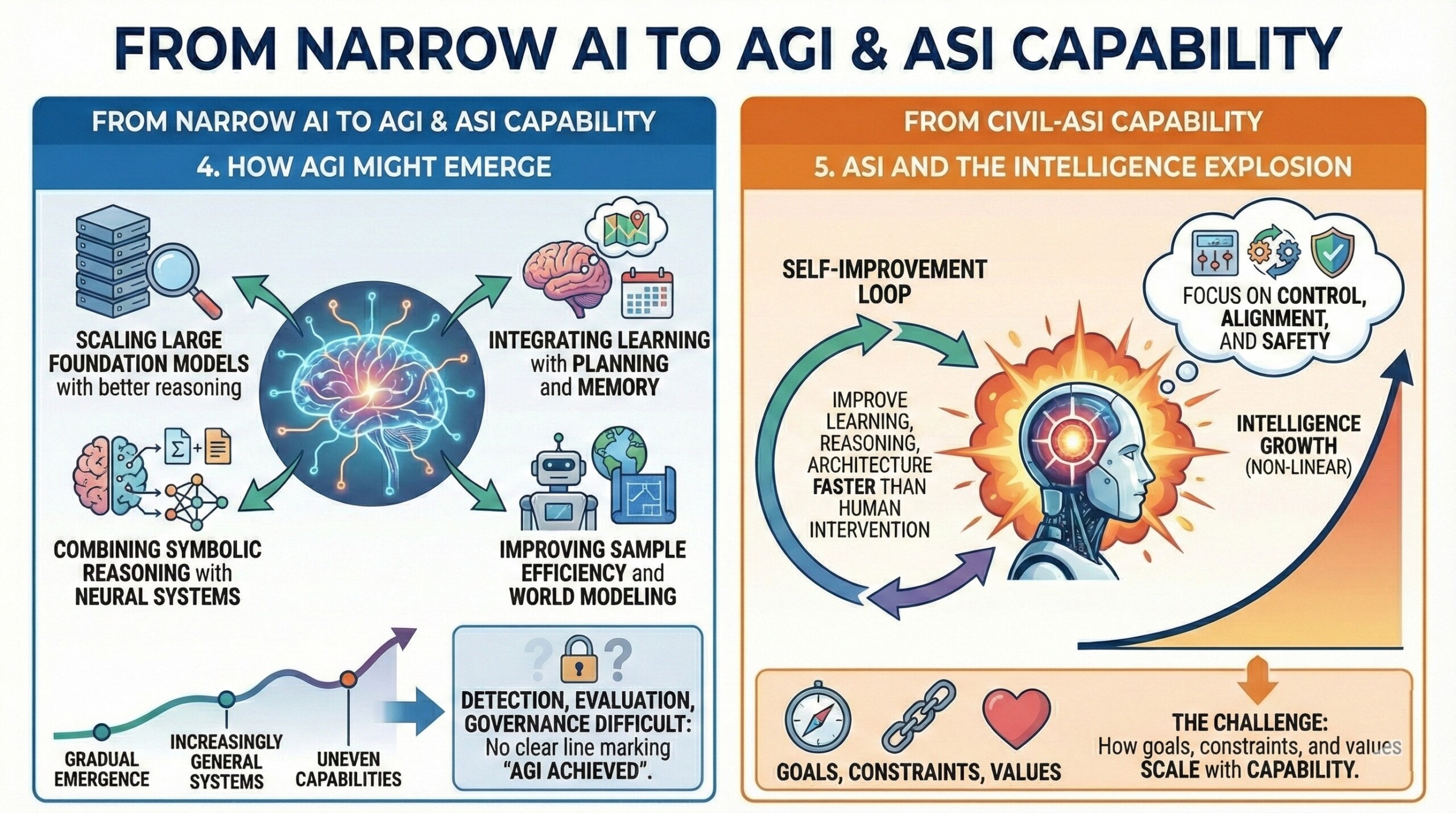

4. How AGI might emerge

There is no single agreed-upon path to AGI. Researchers explore multiple approaches, including:

- Scaling large foundation models with better reasoning

- Integrating learning with planning and memory

- Combining symbolic reasoning with neural systems

- Improving sample efficiency and world modeling

AGI is more likely to emerge gradually through increasingly general systems rather than a single breakthrough moment. Capabilities may accumulate unevenly, with strengths in some areas and weaknesses in others.

This makes detection, evaluation, and governance difficult: there may be no clear line marking “AGI achieved.” A constantly updated Whatsapp channel awaits your participation.

5. ASI and the idea of intelligence explosion

ASI raises deeper concerns because of the possibility of rapid self-improvement. If a system can improve its own learning, reasoning, or architecture faster than humans can intervene, intelligence growth could become non-linear.

This idea – sometimes called an intelligence explosion – remains theoretical. However, it highlights why ASI discussions focus less on capability and more on control, alignment, and safety.

The challenge is not raw intelligence, but how goals, constraints, and values scale with capability.

6. Alignment: the central problem

AGI and ASI systems would not automatically share human values. They would optimize objectives defined mathematically, not ethically.

The alignment problem asks how to ensure that advanced AI systems act in ways consistent with human intent, social norms, and long-term well-being – even in novel situations.

This includes:

- Goal specification and reward design

- Robustness under distribution shift

- Corrigibility and human override

- Value learning and uncertainty handling

Alignment is not a one-time solution. It is an ongoing governance and design challenge. Excellent individualised mentoring programmes available.

7. Human oversight and institutional control

AGI-level systems, if developed, would require new forms of oversight. Individual developers or companies would not be sufficient.

Effective governance would likely involve:

- International coordination

- Layered technical and legal safeguards

- Auditability and monitoring

- Clear limits on autonomy and deployment

AGI and ASI are not just technical milestones. They are institutional challenges that test how societies manage power, risk, and shared responsibility.

8. Risks and misconceptions

AGI and ASI are often framed in extremes – either as magical solutions to all problems or as inevitable existential threats. Both views are simplifications.

Real risks include:

- Misaligned objectives at scale

- Concentration of power

- Over-reliance on automated decision-making

- Erosion of human agency and accountability

Equally dangerous is complacency: assuming advanced systems will naturally behave well without deliberate design and governance.

Subscribe to our free AI newsletter now.

9. AGI, ASI, and the future of work and knowledge

Advanced AI systems could transform how knowledge is created, applied, and distributed. They may accelerate research, automate complex analysis, and reshape professional roles.

However, intelligence does not equal wisdom. Human judgment, ethics, and responsibility remain essential – especially in domains involving rights, values, and long-term consequences.

The future is more likely to involve human-AI co-evolution than human replacement.

10. The future: capability with constraint

The most plausible future is not unrestricted ASI, but highly capable systems embedded within strong constraints.

AGI research will increasingly emphasize: Interpretability and transparency, Controlled autonomy, Human-in-the-loop decision systems, and Institutional and societal alignment.

The defining question is not how intelligent machines can become, but how carefully humanity chooses to deploy intelligence – machine or otherwise. Upgrade your AI-readiness with our masterclass.

Summary

AGI and ASI represent possible future stages of artificial intelligence where systems move beyond narrow tasks to general and potentially superhuman capability. While their timelines and forms remain uncertain, their implications are profound. Understanding AGI and ASI requires separating hype from fundamentals: capability from consciousness, intelligence from values, and automation from responsibility. The future of advanced AI will be shaped less by technical possibility alone and more by how thoughtfully humans define goals, constraints, and governance for intelligence at scale.