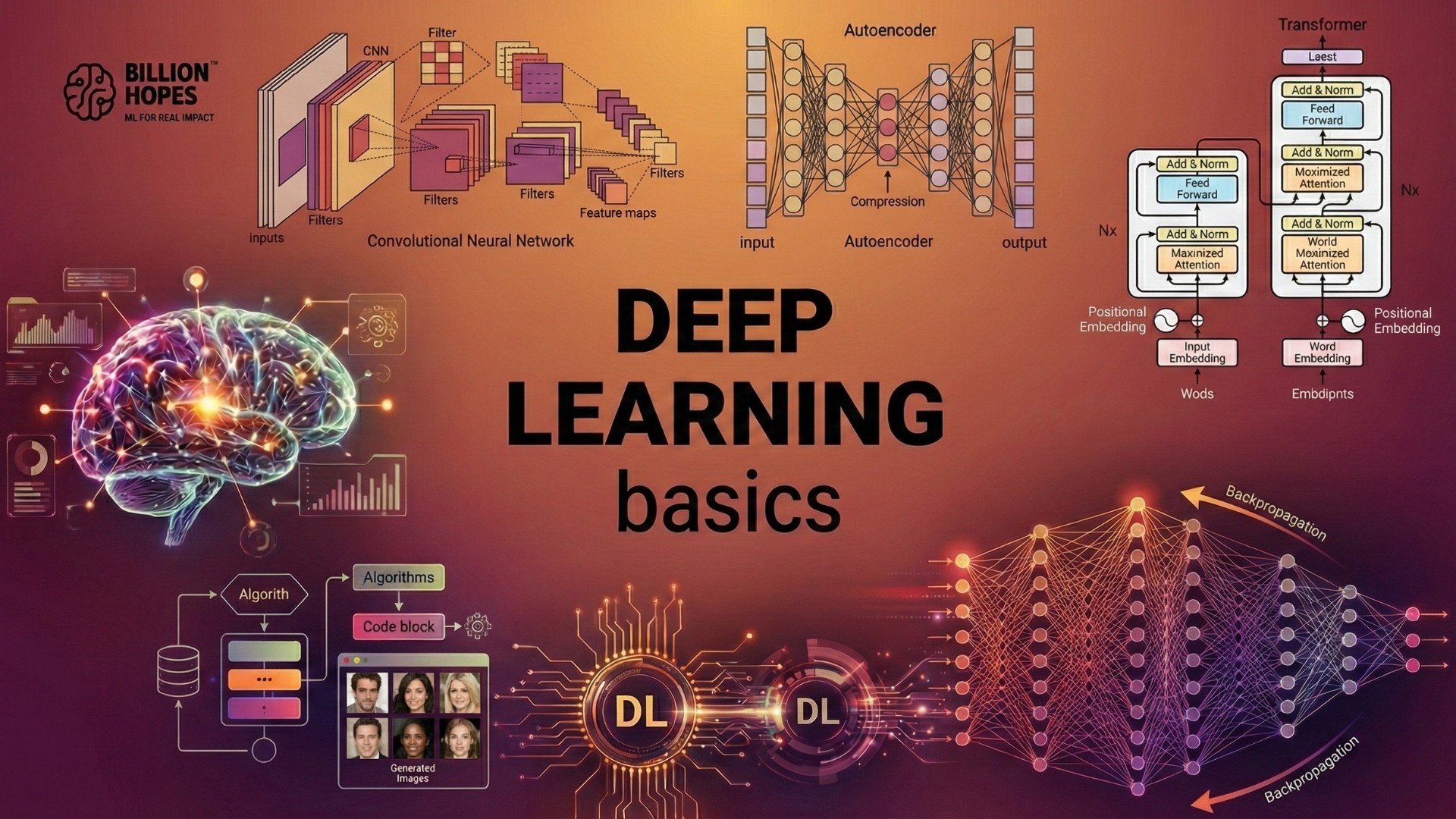

DL basics

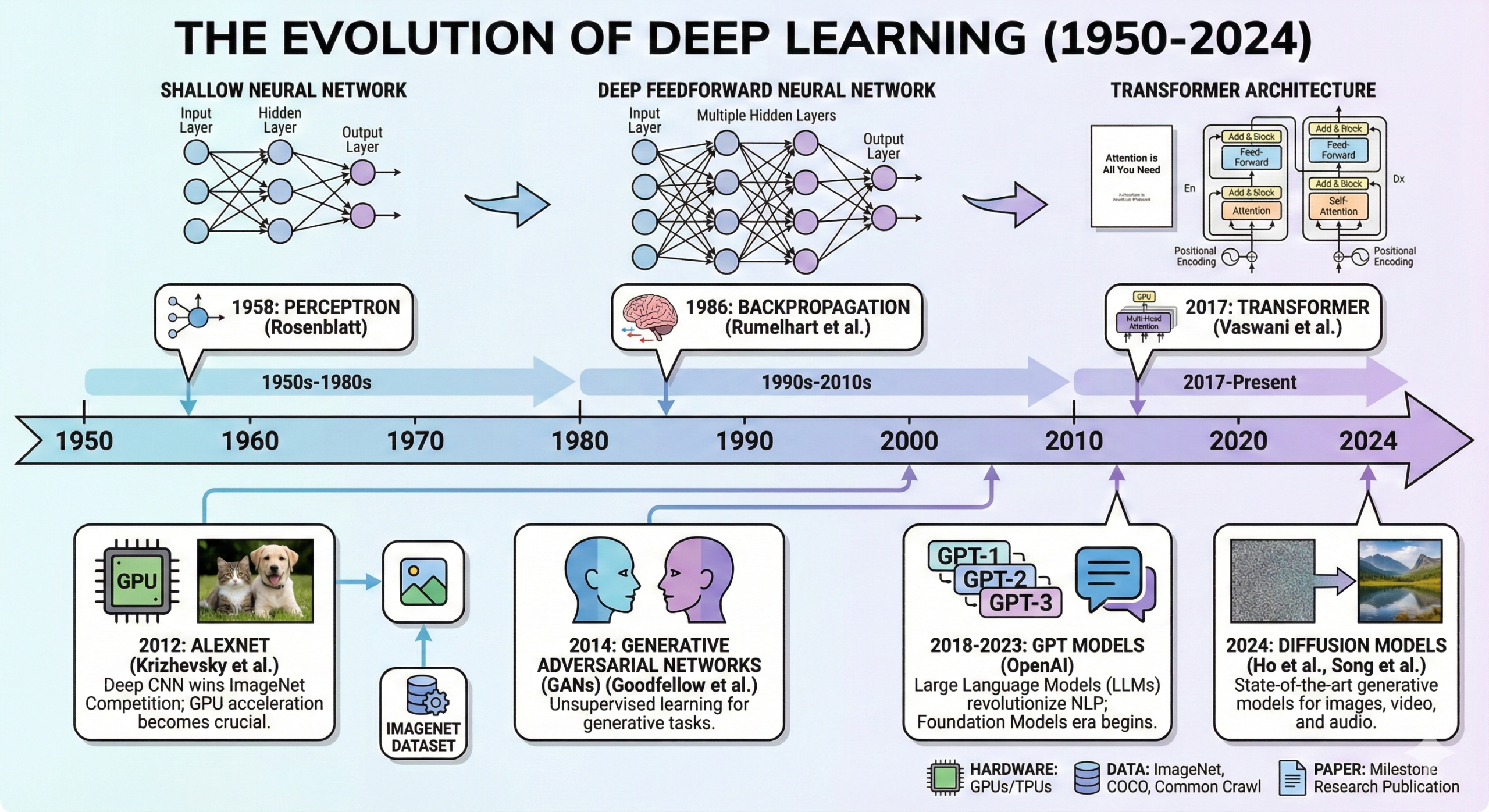

1. Introduction to Deep Learning (DL): What it is and why it matters

Deep Learning (DL) is a specialized branch of machine learning that uses multi-layered artificial neural networks to learn complex patterns from vast amounts of data. While early neural networks date back to the 1950s, deep learning became mainstream after 2012, when AlexNet dramatically outperformed all competitors in the ImageNet competition. This single event triggered the modern AI revolution. Deep Learning is a revolution in machine learning, and a new paradigm away from Symbolic AI that depended on human-crafted rules.

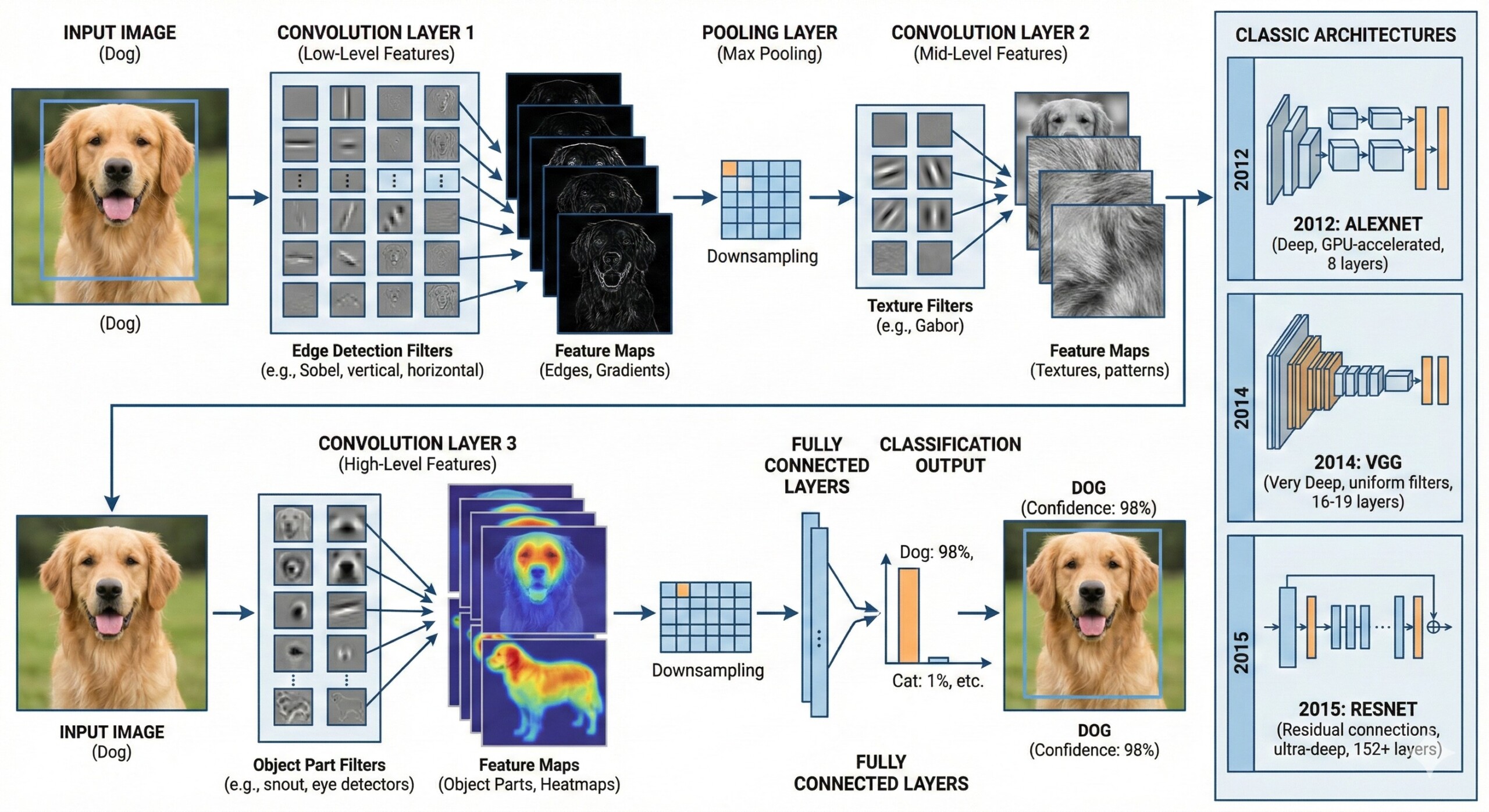

Deep learning excels because it can automatically learn hierarchical representations. For example:

- Early layers detect edges in images

- Middle layers detect textures or shapes

- Deep layers recognize objects like dogs, tumours, or traffic signs

This ability to learn features directly from data – without human-engineered rules – makes DL the dominant method in computer vision, language modeling, medical imaging, robotics, and generative AI. Today’s most advanced systems – GPT-4/5, Claude 3, Gemini, Stable Diffusion, Whisper, AlphaFold – are all deep learning systems.

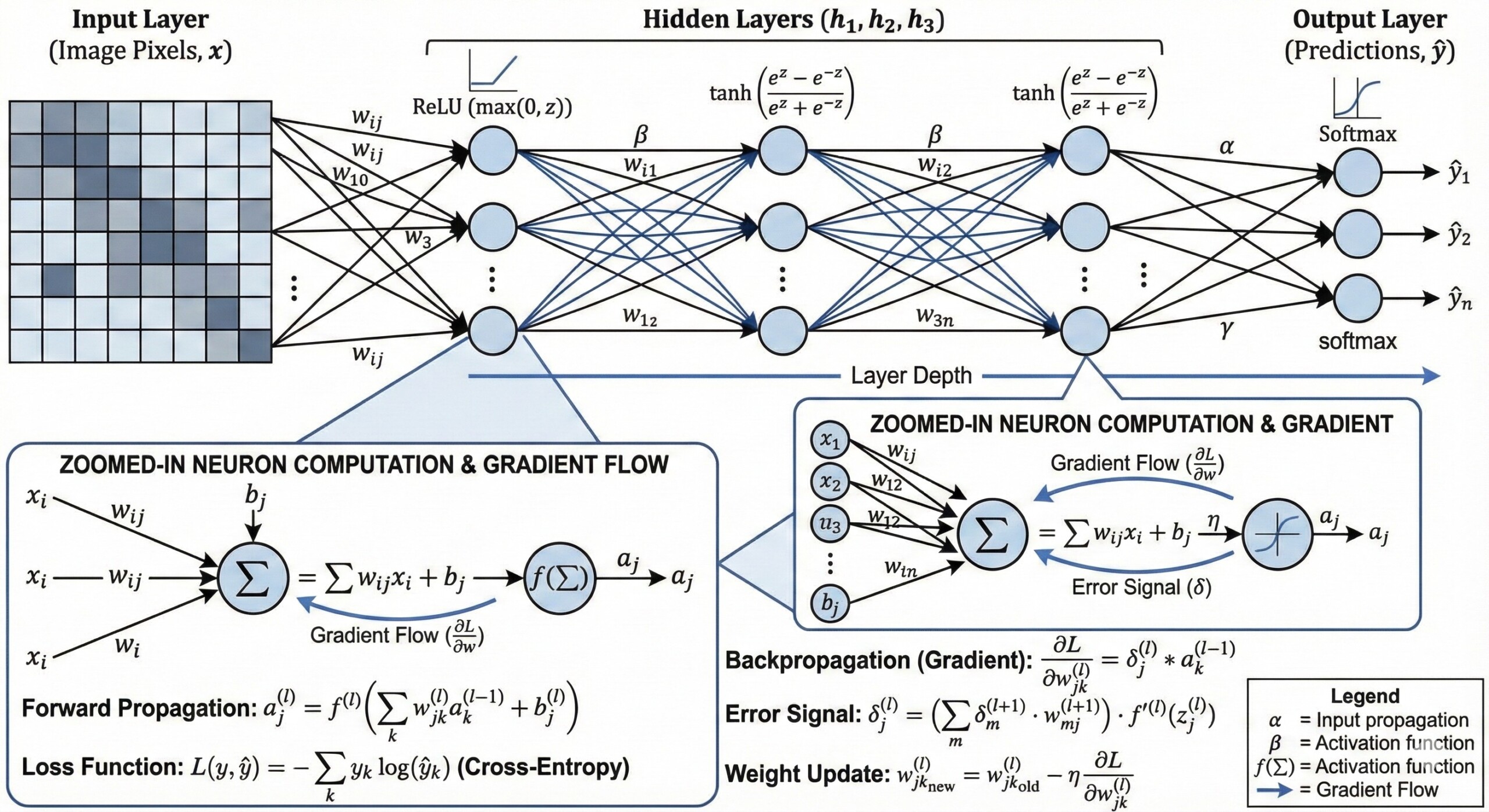

2. Neural Network Architecture: Neurons, Layers, and Gradients

Deep learning models are composed of interconnected building blocks called neurons, organized into layers.

Core Components

- Input Layer: Feeds raw data (pixels, text tokens, audio waveforms).

- Hidden Layers: Multiple layers where computations happen.

- Output Layer: Gives predictions (class scores, generated text, bounding boxes).

Each neuron computes a weighted sum, applies an activation function (ReLU, sigmoid, tanh), and passes information forward.

The Power of Depth

A network becomes “deep” when it uses many hidden layers. Depth enables the model to build complex hierarchical abstractions. CNNs may have 100+ layers; transformers can exceed 80 layers; GPT-4–level models contain hundreds of attention blocks.

Gradient Descent

Neural networks learn through gradient-based optimization. Backpropagation computes gradients of the loss function with respect to the weights, and optimizers like SGD, Adam, and RMSProp update the parameters.

Example

A tumor-detection model input takes a 224×224 MRI image, passes it through dozens of convolutional layers, and outputs the probability of malignancy. This process involves millions of parameters and billions of gradient computations. An excellent collection of learning videos awaits you on our Youtube channel

3. Convolutional Neural Networks (CNNs): Vision Intelligence

Convolutional Neural Networks (CNNs) are the foundation of modern computer vision.

Why CNNs work

- Convolution layers capture spatial patterns like edges, textures, shapes.

- Weight-sharing ensures efficiency.

- Pooling layers provide translation invariance.

Breakthrough Architectures

- LeNet-5 (1998): Handwritten digit recognition.

- AlexNet (2012): First major deep CNN to dominate vision.

- VGG16/19 (2014): Deep but simple stack of convolutions.

- GoogLeNet / Inception (2014): Efficient multi-branch architecture.

- ResNet (2015): Skip connections enabling ultra-deep networks (152 layers).

- EfficientNet (2019): Compound scaling for optimal accuracy/compute balance.

Applications

- Self-driving car perception (Tesla Vision, Waymo).

- Medical imaging diagnostics.

- Industrial defect detection.

- Facial recognition (FaceID, security).

- Satellite and drone imagery analysis.

CNNs remain dominant for 2D/3D visual processing even as transformers gain ground.

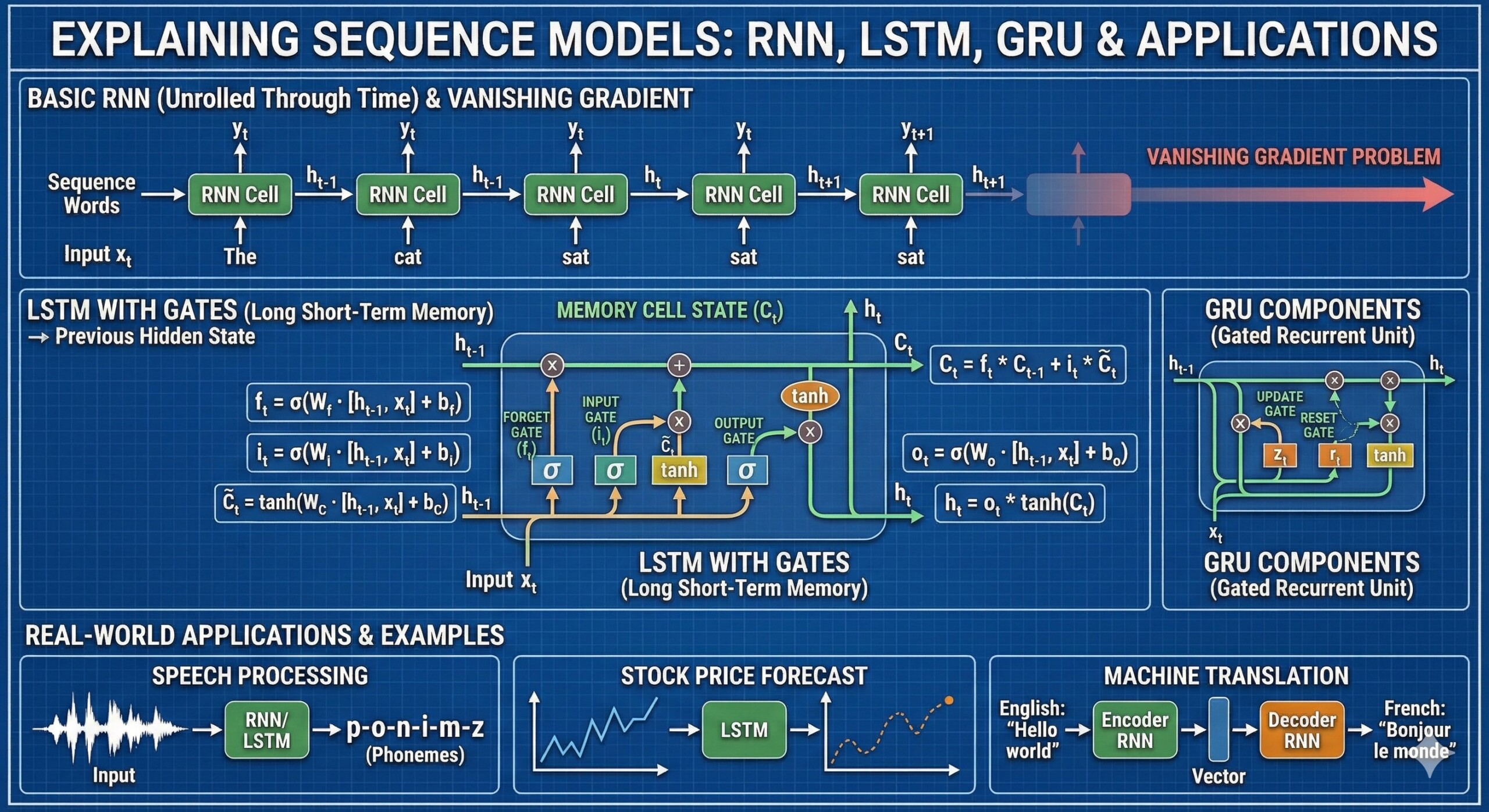

4. Recurrent Networks: Sequence learning and memory

Before transformers took over, Recurrent Neural Networks (RNNs) and their variants were the primary models for sequential data.

Key Architectures

- RNNs: Basic sequence models but suffer from vanishing gradients.

- LSTMs (1997): Introduced memory cells and gates; solved long-term dependency issues.

- GRUs (2014): Simplified but powerful RNN variant.

Applications

- Speech recognition (early versions of Siri, Google ASR).

- Language modeling before transformers.

- Time-series forecasting in finance and energy.

- Machine translation (Seq2Seq models).

- Music generation.

Even though transformers replaced them at scale, RNNs still remain crucial in on-device, low-power applications. A constantly updated Whatsapp channel awaits your participation.

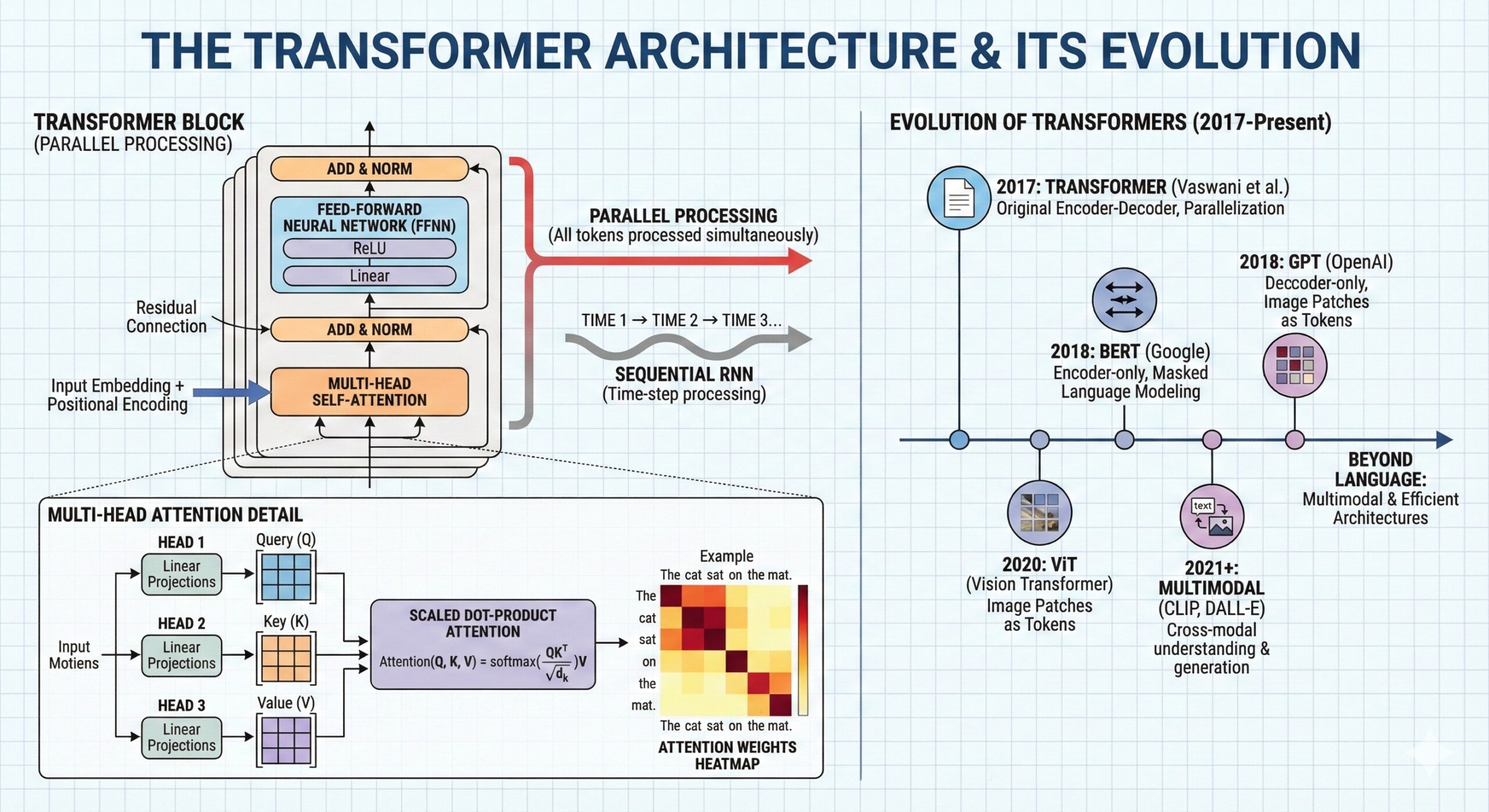

5. The Transformer Revolution: Attention, Scalability & Foundation Models

The transformer architecture, introduced in the 2017 paper “Attention Is All You Need,” transformed the AI landscape.

Self-Attention Mechanism

Transformers compute relationships between all elements in a sequence simultaneously, instead of step-by-step like RNNs. This allows:

- Parallelization

- Better long-range dependency modeling

- Scalability to huge datasets

Architectures Built on Transformers

- BERT (2018): Bidirectional understanding for NLP.

- GPT series (2018–2025): Autoregressive text generation models.

- Vision Transformers (ViT, 2020): Transformer adaptation for image patches.

- Multimodal Transformers (2022–2025): Supporting text, image, audio, video.

Foundation Models

These are large, general-purpose models trained on diverse datasets and adaptable to industry use cases:

- GPT-4/5 → general reasoning, coding, writing

- Claude 3 → safe, long-context assistant

- Gemini 2 → multimodal reasoning

- Llama 3 → open-source LLM family

Transformers are the architecture behind 90% of the breakthroughs in modern AI.

6. Training Deep Learning Models: Data, Compute & Optimization

Training deep networks requires huge compute and data. This is why huge capital is invested by large tech firms (or startups like OpenAI) in building these models. This also acts as an entry barrier for any new firm trying to build a so-called “Foundation Model”.

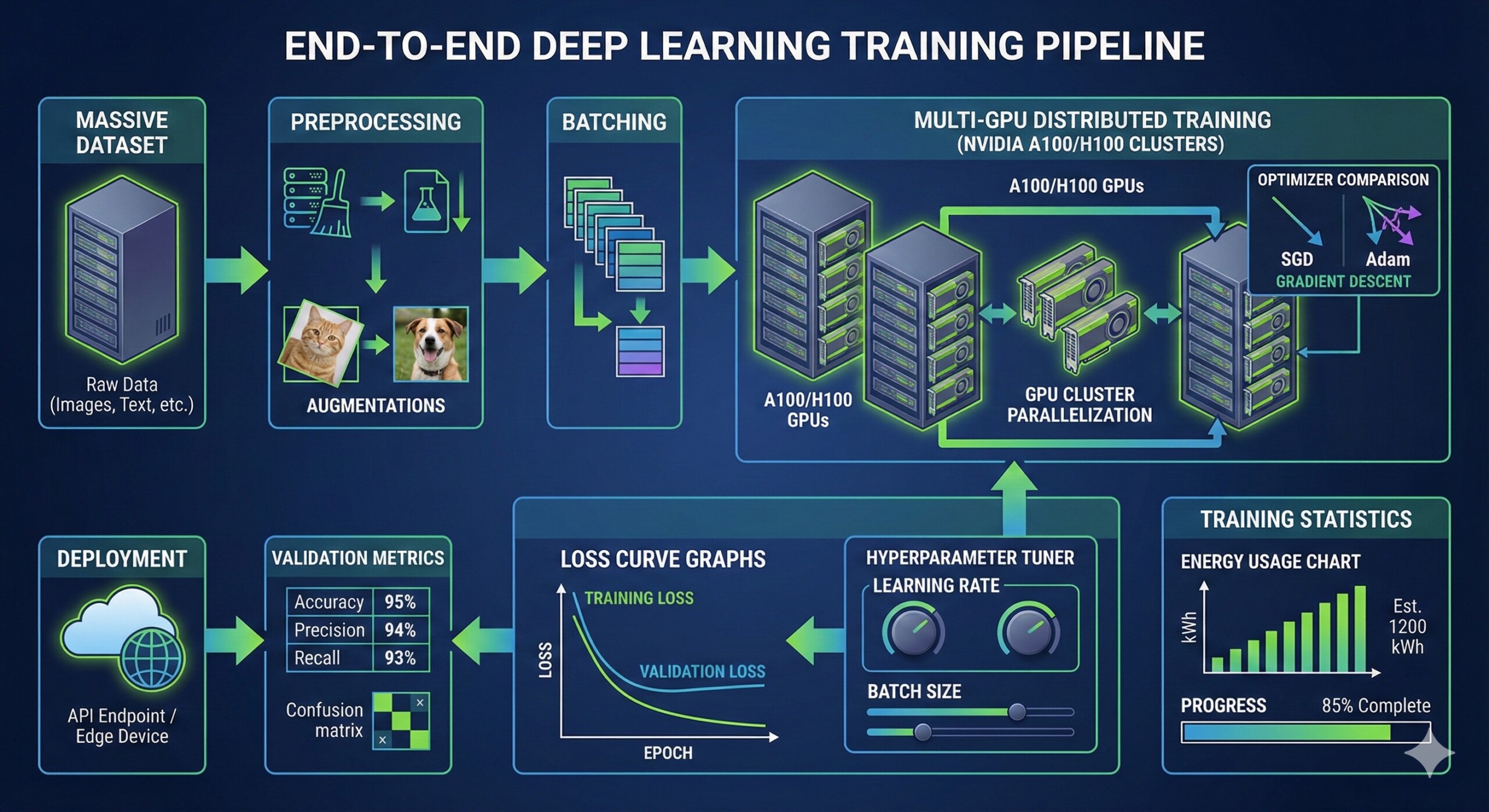

Training Pipeline

- Massive dataset collection

- Preprocessing and augmentations

- Multi-GPU or TPU distributed training

- Hyperparameter tuning

- Validation and safety checks

- Deployment and fine-tuning

Compute Infrastructure

- NVIDIA A100, H100, B100 GPUs

- Google TPU v4/v5e pods

- Distributed training frameworks (DeepSpeed, Horovod, PyTorch DDP)

Challenges

- Training GPT-4–scale models requires thousands of GPU-years.

- Energy consumption and carbon footprint management (“Green AI”).

- Model collapse in generative training loops.

Example

DeepMind’s AlphaFold was trained on decades of protein structure data using specialized architectures and TPU pods—resulting in breakthroughs in biology. Excellent individualised mentoring programmes available.

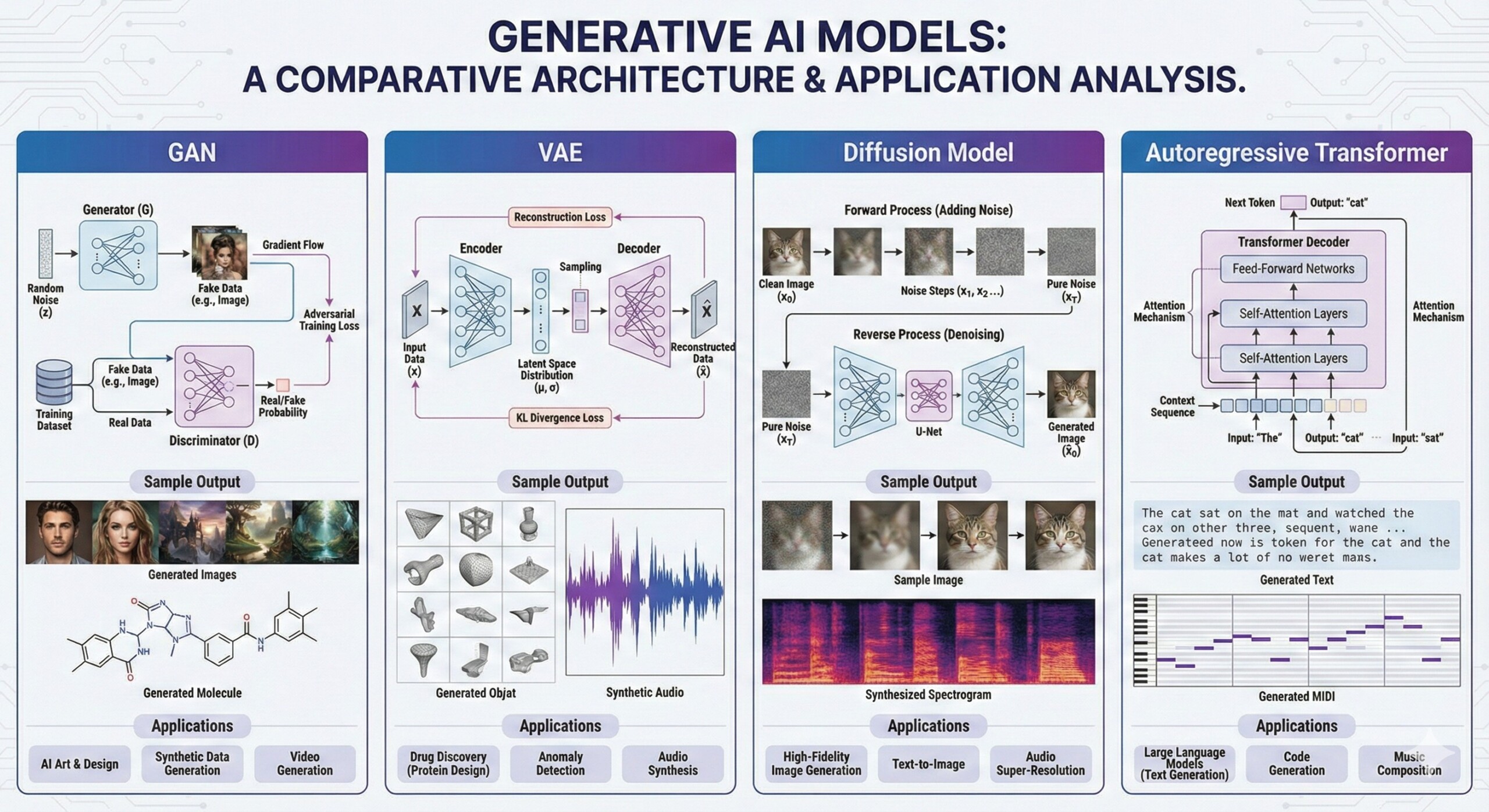

7. Generative Deep Learning: Creating Text, Images, Audio & more

Generative models are among the most impactful inventions in deep learning. They became readily accepted by the consuming masses due to their immense ability to “generate” outputs on simple prompts.

Types of Generative Models

- GANs (Generative Adversarial Networks): Image synthesis, face generation

- VAEs (Variational Autoencoders): Statistical generative modeling

- Diffusion Models: State-of-the-art in image generation (Stable Diffusion, Midjourney)

- Autoregressive Models: GPT-like systems creating text/audio

- Flow Models: High-quality density modeling

Applications

- Synthetic training data for autonomous driving

- AI art, design, and marketing content

- Drug molecule and protein structure generation

- Voice cloning and speech synthesis

- Video generation (Runway Gen-2, OpenAI Sora)

Example

OpenAI’s Sora (2024) demonstrated high-fidelity video generation using transformer-based diffusion models, capable of producing coherent motion and physics. There was no looking back after that.

Generative deep learning represents the creative dimension of AI.

8. Deep Learning in the real world: Industry applications

Deep learning is used everywhere, even in places most people don’t notice.

Healthcare

- Radiology: detecting cancer, fractures, brain abnormalities

- Pathology: cellular classification

- Genomics: DNA sequence modeling

- Drug discovery: molecule design with GNNs and transformers

Finance

- Transaction fraud detection

- Portfolio optimization

- Document processing (KYC, compliance)

- Risk prediction

Transport

- Self-driving car perception

- Autonomous drones

- Traffic optimization systems

Retail & E-commerce

- Visual search (Amazon StyleSnap)

- Recommendation engines

- Inventory optimization

- Virtual try-on systems

Agriculture

- Crop health prediction using satellite imagery

- Automated harvesting robots

- Soil pattern analysis

Deep learning has become a horizontal technology layer that accelerates every digital system. Its use is magnified by the many applications that use Foundation Models as the backend. Subscribe to our free AI newsletter now.

9. Limitations, risks & responsible Deep Learning

Deep learning is powerful but not perfect. It has many unresolved problems and edges that need careful handling and deliberation by users.

Limitations

- Requires massive datasets

- High computational cost

- Difficult to explain (“black-box” problem)

- Vulnerable to adversarial attacks (tiny pixel changes → wrong predictions)

Risks

- Bias in healthcare decisions due to skewed datasets

- Privacy concerns from training data extraction attacks

- Deepfakes creating misinformation

- Dependence on proprietary foundation models

Responsible AI Practices

- Model interpretability tools (SHAP, LIME, Grad-CAM)

- Bias audits and fairness testing

- Data anonymization and synthetic data

- Governance frameworks (EU AI Act, U.S. AI EO 2023)

Example

A widely cited 2019 study showed that commercial face-recognition models had error rates of over 35% for darker-skinned women—highlighting the urgent need for better dataset diversity.

Responsible deep learning is now as important as the technology itself.

10. Future directions: Beyond today’s Deep Learning

Deep learning continues to evolve rapidly.

Key future trends

- Multimodal Generalist AI: Systems that understand text, images, audio, video, 3D, and sensor streams simultaneously.

- Agentic AI: Autonomous AI agents performing multi-step tasks.

- Neurosymbolic AI: Combining reasoning + neural networks.

- Bio-inspired Architectures: Brain-like learning, neuromorphic chips.

- Efficient DL: Sparse models, quantization, low-precision inference.

- Open-Source Foundation Models: Llama 3, Mistral, DeepSeek.

Long-Term possibilities

- AI copilots for every profession

- Real-time translation between all languages

- AI-designed medicines in months instead of years

- Robots capable of general-purpose household work

- AI that collaborates with humans in scientific discovery

Deep learning will be the engine behind nearly all breakthroughs in AI for the next decade. Upgrade your AI-readiness with our masterclass.