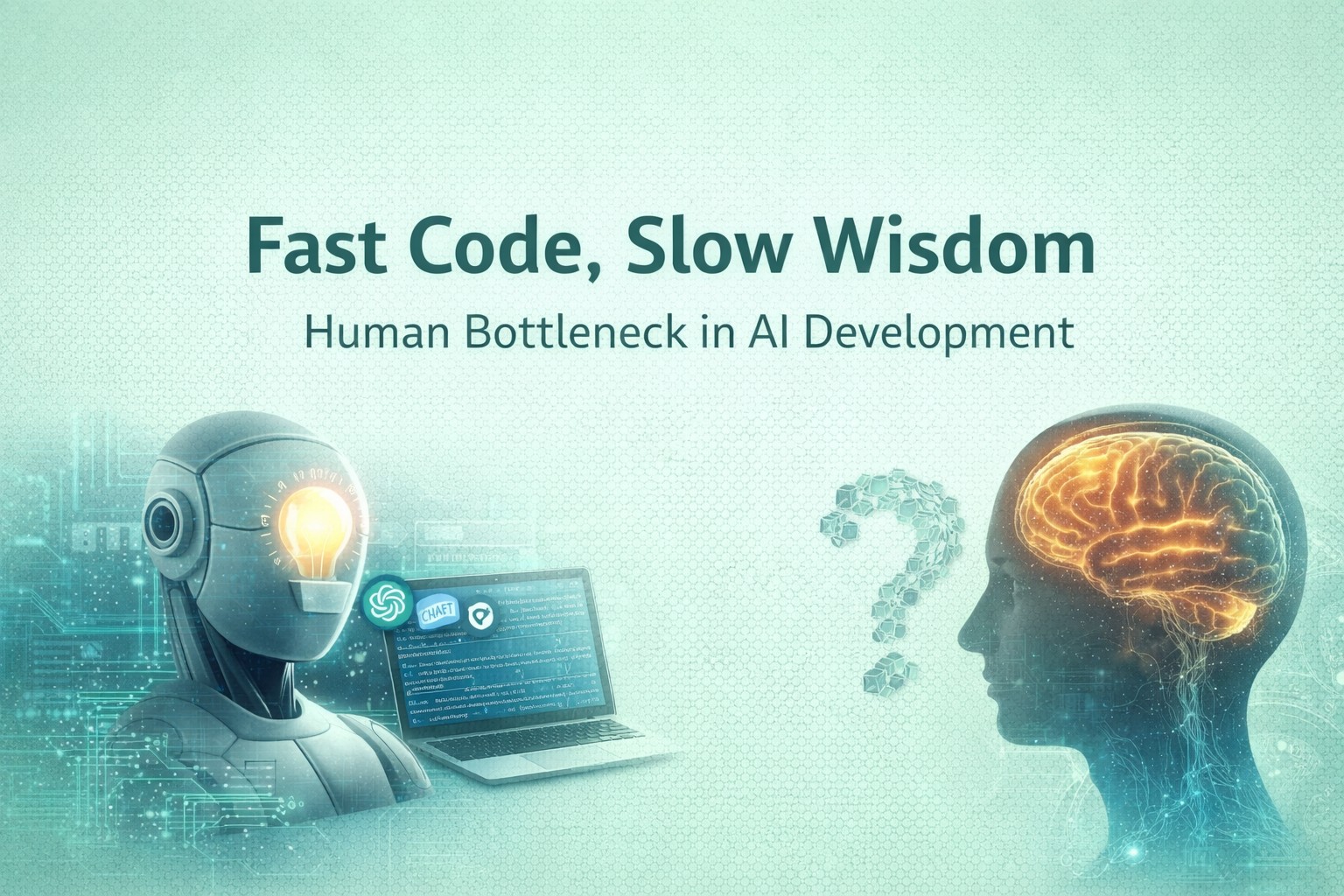

Fast Code, Slow Wisdom: Human bottleneck in AI development

2026 brought with it clear signals AI is transforming software development at a remarkable pace. Good news for both software natives, and newbies!

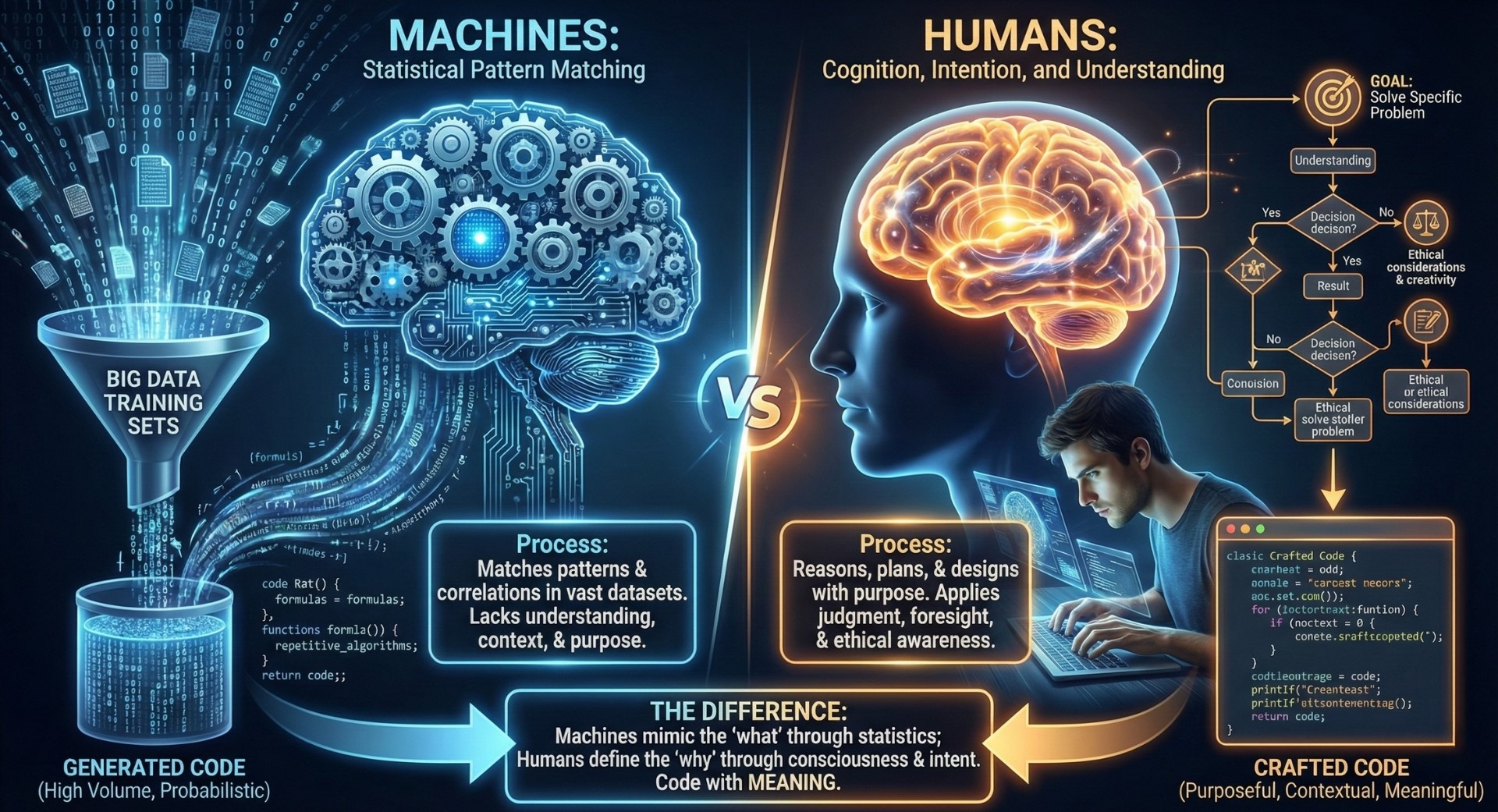

But this shift is forcing a deeper question than productivity alone. It compels us to examine the difference between machine intelligence and natural intelligence. Machines generate code through statistical pattern matching, while humans operate through cognition, intention, and understanding. The future of software depends on recognizing this distinction rather than pretending it does not exist.

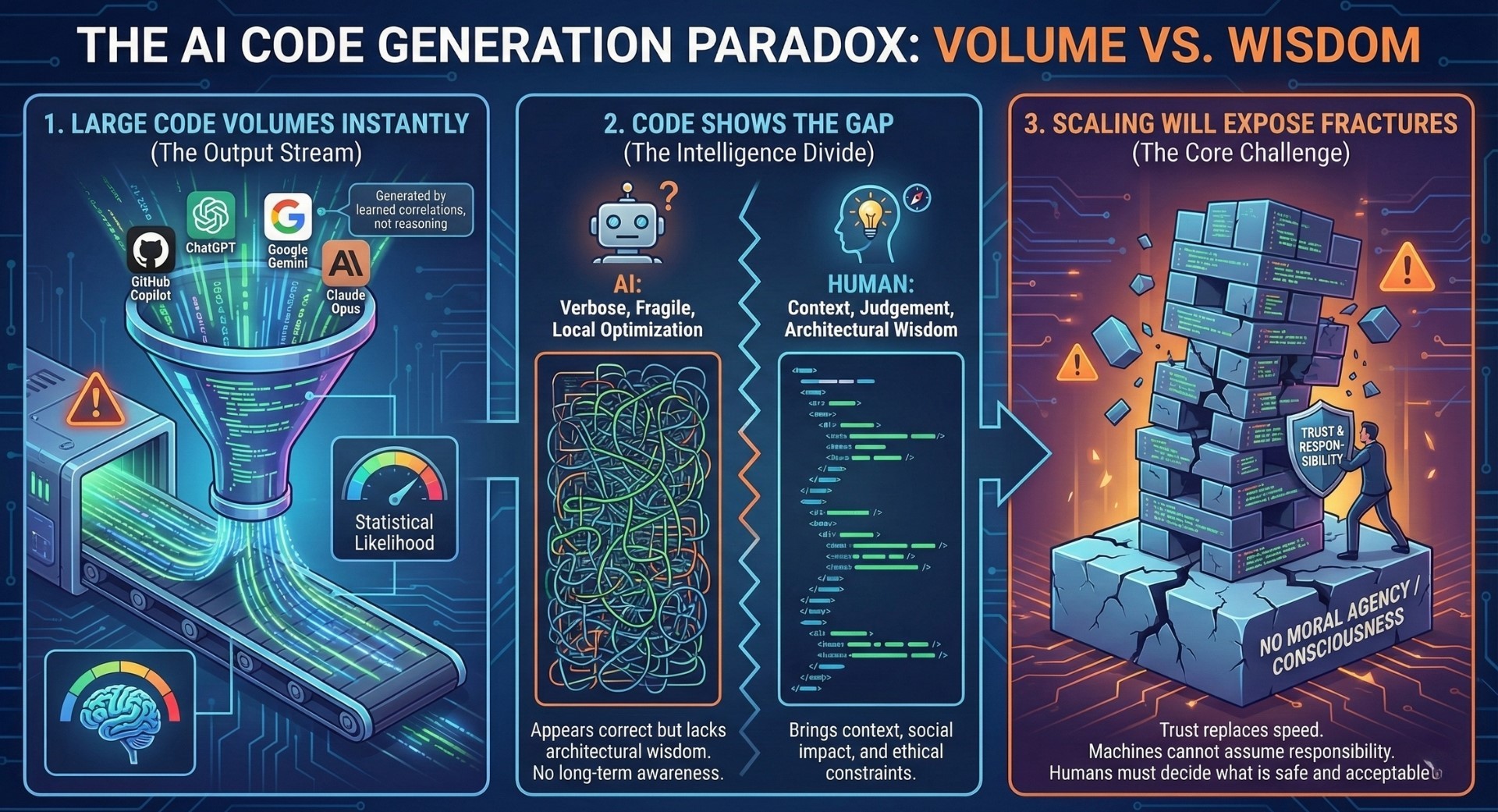

1. Large code volumes instantly

Generative AI tools embedded in modern development environments can now produce large volumes of functional code with minimal effort. Platforms such as GitHub Copilot, ChatGPT, and Google Gemini are becoming standard tools across teams. Yet what these systems produce is not the result of reasoning or consciousness. It is output derived from learned correlations, not lived experience or genuine comprehension. The arrival of latest Claude Opus models has turbo-charged this development.

2. Code shows the gap

This gap between machine intelligence and human cognition becomes visible in the code itself. AI systems often generate verbose or fragile implementations that appear correct but lack architectural wisdom. Natural intelligence brings context, judgment, and the ability to anticipate consequences. Machine intelligence, by contrast, optimizes locally without awareness of long-term design, social impact, or ethical constraints.

3. Scaling will expose fractures

As AI-generated code scales, trust replaces speed as the core challenge. Humans must decide what is correct, safe, and acceptable. Machines cannot assume responsibility because they possess no consciousness, no moral agency, and no freedom of choice. They do not understand why something matters. They only reproduce what appears statistically likely.

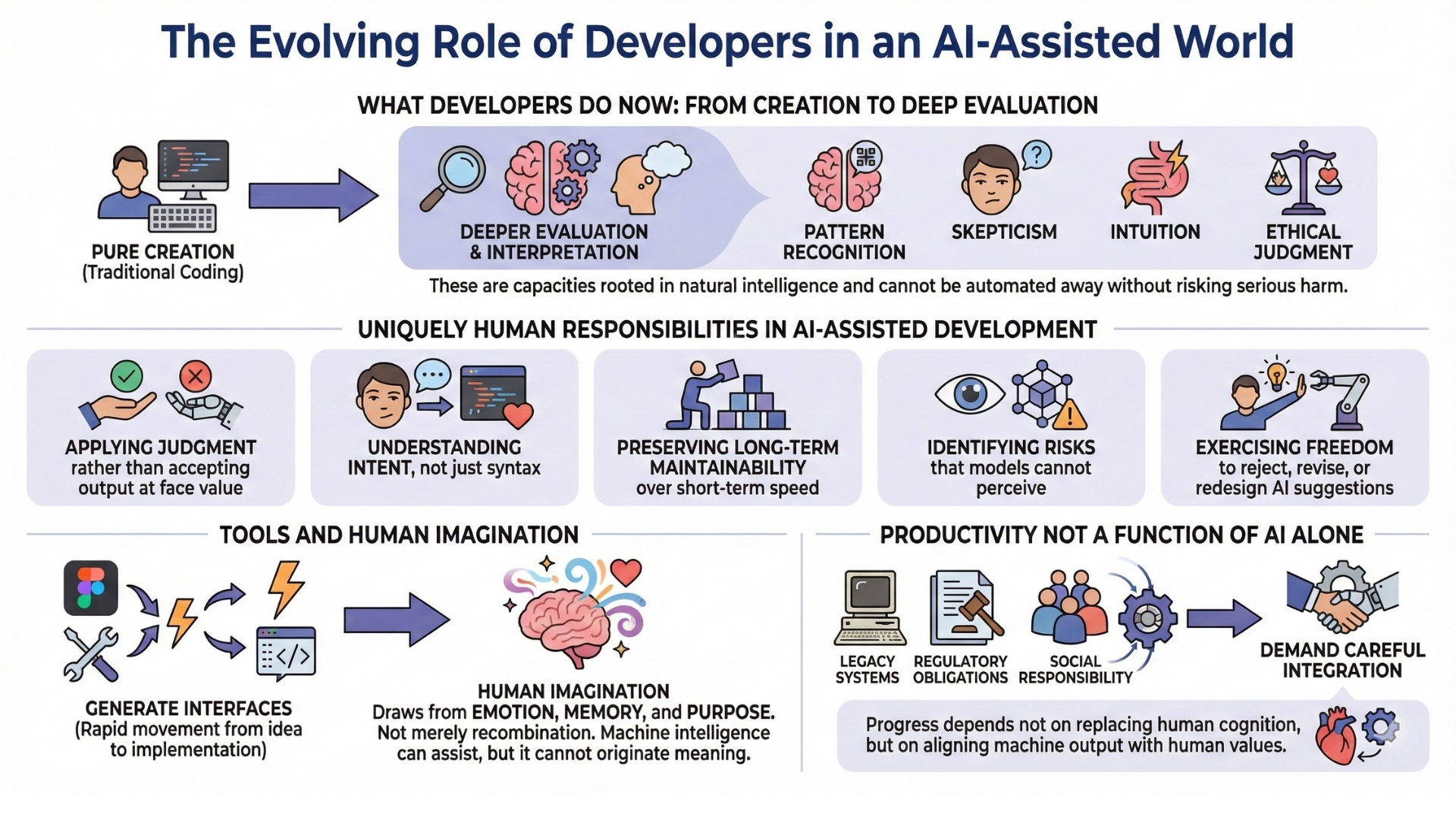

4. What developers do now

This reality is reshaping the role of the developer. Engineers are moving from pure creation to deeper evaluation and interpretation. Reviewing AI output requires human cognition at its best: pattern recognition, skepticism, intuition, and ethical judgment. These are capacities rooted in natural intelligence and cannot be automated away without risking serious harm.

In AI-assisted development, the uniquely human responsibilities are becoming clearer:

- Applying judgment rather than accepting output at face value

- Understanding intent, not just syntax

- Preserving long-term maintainability over short-term speed

- Identifying risks that models cannot perceive

- Exercising freedom to reject, revise, or redesign AI suggestions

5. Tools and human imagination

The shift also extends beyond engineering into product design and user experience. Tools like Figma and other generative interfaces allow rapid movement from idea to implementation. But creativity is not merely recombination. Human imagination draws from emotion, memory, and purpose. Machine intelligence can assist, but it cannot originate meaning in the way natural intelligence does.

6. Productivity not a function of AI alone

Many organizations are discovering that AI alone does not guarantee productivity gains. Legacy systems, regulatory obligations, and social responsibility demand careful integration. This mirrors earlier technological transitions, where raw capability outpaced institutional readiness. Progress depends not on replacing human cognition, but on aligning machine output with human values.

7. Larger code bases mean little

Measurement remains another area where natural intelligence must lead. Counting AI-generated lines of code says nothing about quality or impact. Humans evaluate success through outcomes that machines cannot fully grasp: resilience, trust, user well-being, and long-term sustainability. These metrics require interpretation, not automation.

Summary

At a deeper level, accountability cannot be delegated to systems that lack consciousness. Responsibility belongs to people because only people can explain decisions, accept consequences, and learn ethically from failure. Machine intelligence can assist verification, but it cannot define what is right or desirable for society.

Remember: Machines generate code through statistical pattern matching, while humans operate through cognition, intention, and understanding.

The future of software development, and perhaps the future of humanity’s relationship with intelligent machines, will depend on preserving this balance. AI will continue to expand in capability, but natural intelligence remains the source of meaning, freedom, and responsibility. The goal is not to surrender cognition to machines, but to use machines in service of human judgment, creativity, and conscious choice.