ML basics

1. Introduction to Machine Learning: Definition, Origins, and Why It Matters

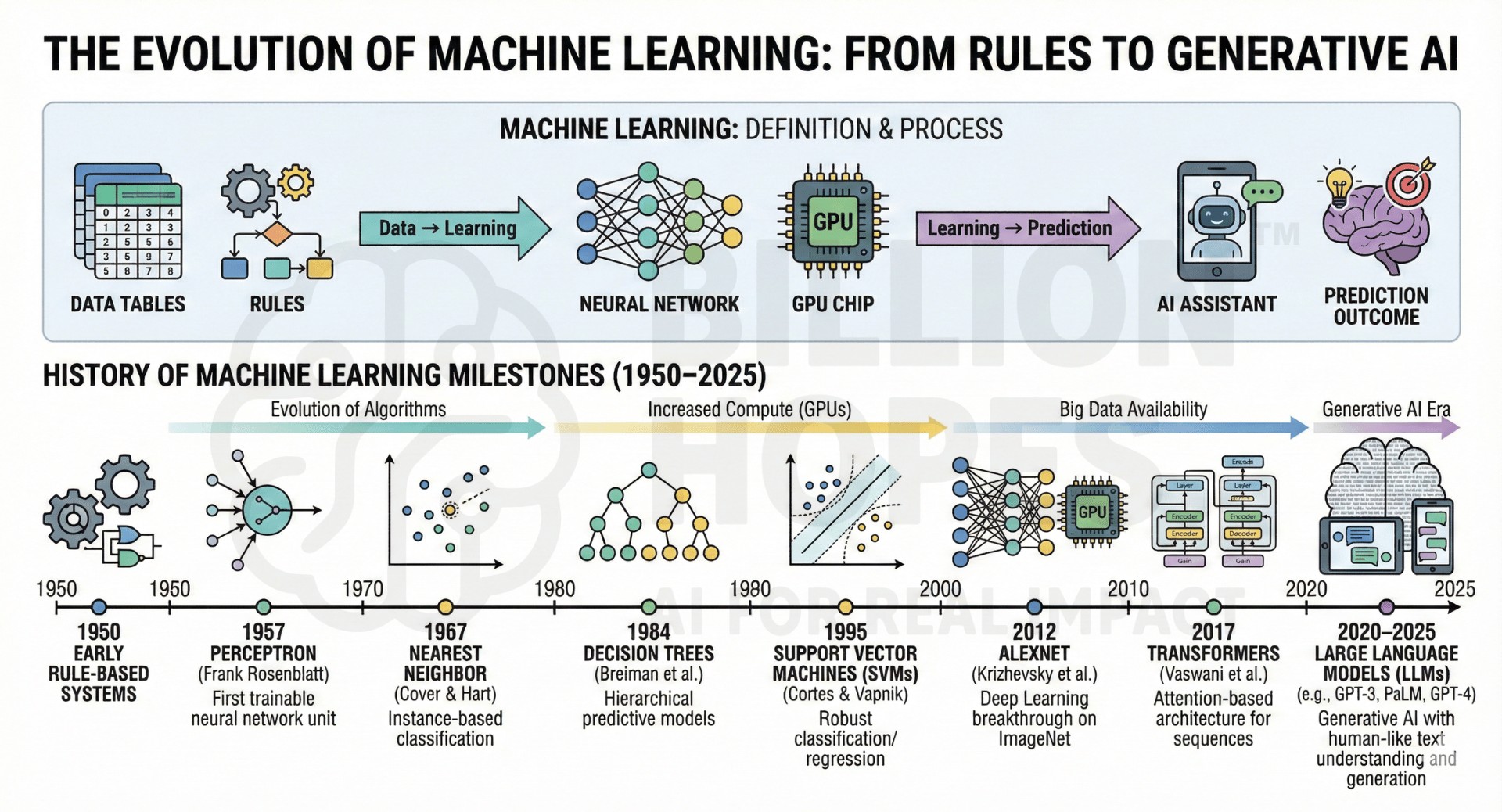

Machine Learning (ML) is the field of study in which computers learn patterns from data and improve their performance without being explicitly programmed. Arthur Samuel defined ML in 1959 as the “field of study that gives computers the ability to learn without being explicitly programmed.” In modern usage, machine learning sits at the heart of artificial intelligence, enabling systems to adaptively respond to new information.

ML emerged from statistics, optimization, and computing theory. Early algorithms – like perceptrons (1957), nearest neighbours (1967), and decision trees (1984) – laid the groundwork. But ML truly accelerated with increases in computing power, especially after the 2000s when NVIDIA GPUs enabled scalable neural-network training.

Machine learning matters because it solves problems too complex for human-coded rules: detecting fraud in billions of transactions, classifying millions of photos, predicting customer churn, and supporting medical diagnosis. Today, almost every industry – from finance and healthcare to education and agriculture – relies on ML systems to operate efficiently and intelligently.

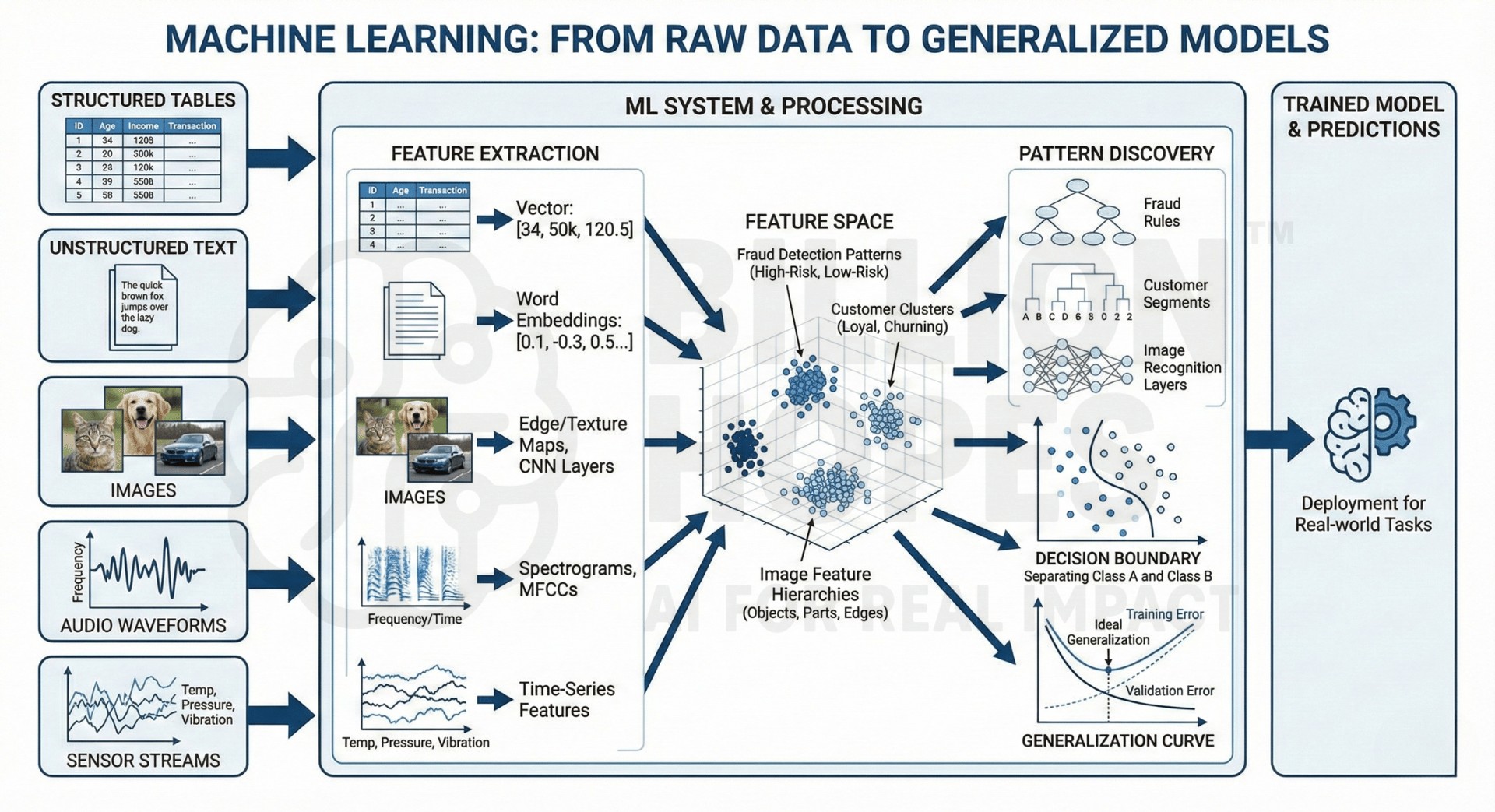

2. How Machine Learning Works: Data, Patterns, and Generalization

ML operates on three essential mechanisms: data, model, and learning process.

- Data

Machine learning learns from examples.

Types of data include:

- Structured data: tables, spreadsheets, transaction logs

- Unstructured data: images, audio, text, social media posts

- Semi-structured data: XML files, JSON logs

- Streaming data: IoT sensors, real-time events

Big datasets like ImageNet (14 million images), COCO, LibriSpeech, and Wikipedia corpora enabled modern breakthroughs.

- Patterns & Representation

Machine learning works by discovering statistical patterns – clusters, correlations, boundaries, and latent structures – that humans cannot code manually.

For example:

- Fraud detection systems learn patterns of unusual transactions.

- Recommendation systems learn user preferences from past behavior.

- Weather models learn atmospheric structures from decades of climate data.

- Generalization

An ML model must not simply memorize data; it must generalize to new, unseen data.

Techniques like cross-validation, regularization, dropout, and learning rate scheduling ensure models stay robust.

Generalization is what makes ML useful in the real world. An excellent collection of learning videos awaits you on our Youtube channel

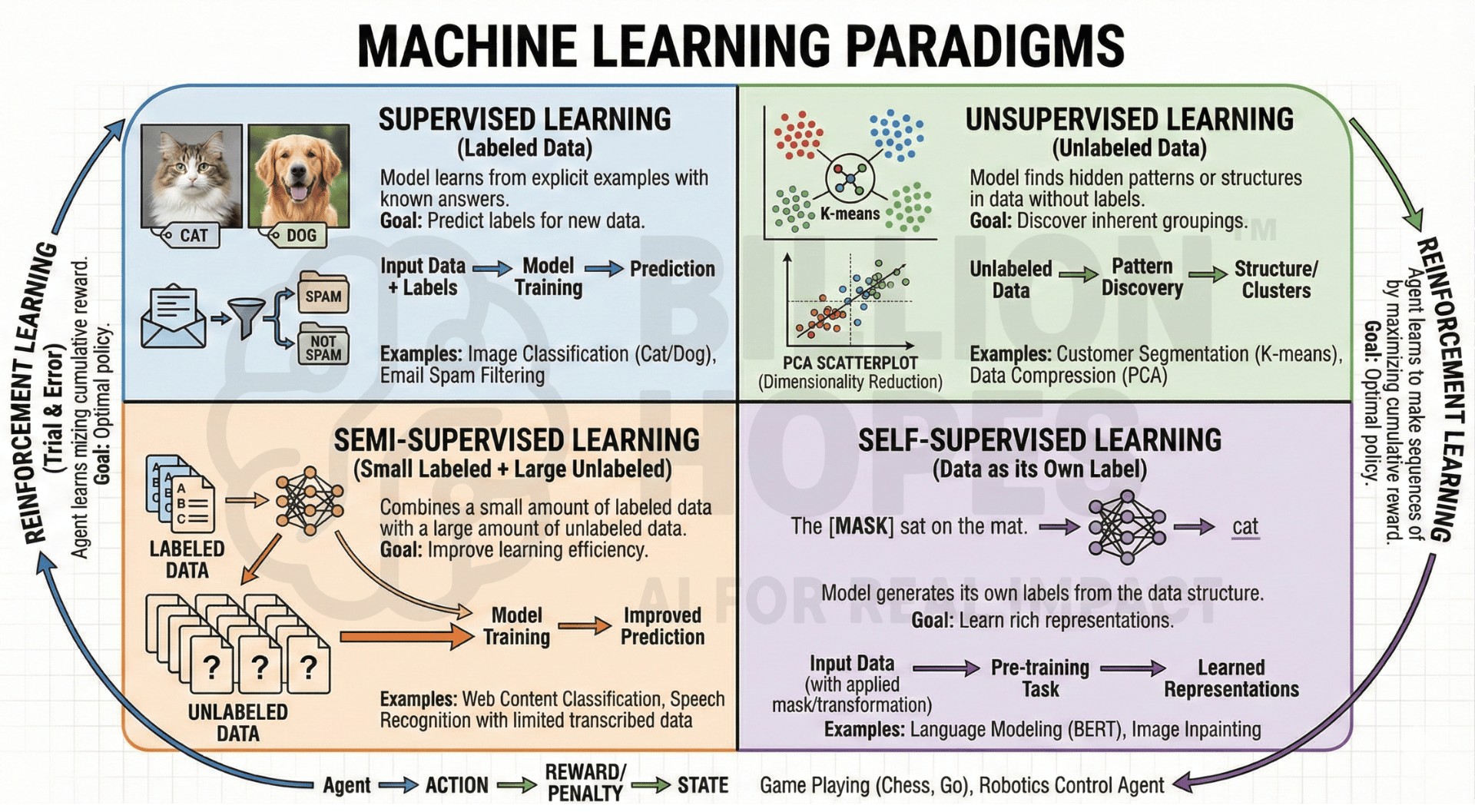

3. Types of Machine Learning: Supervised, Unsupervised, Semi-Supervised, and Beyond

Machine learning has multiple paradigms, each suited to different problem types.

- Supervised Learning

The most common category: learning from labeled examples.

Examples include:

- Image classification: Cat vs dog images

- Spam detection: Email → spam or not

- Loan approval models: Predicting credit risk

- Medical diagnosis models: Classifying X-rays as normal or abnormal

Famous algorithms:

- Linear regression, logistic regression

- Random forests, gradient boosting (XGBoost, LightGBM)

- Deep neural networks

- Transformers for NLP

- Unsupervised Learning

The model finds patterns without labels.

Examples:

- Customer segmentation using clustering

- Dimensionality reduction for visualization

- Anomaly detection in cybersecurity

- Topic modeling from text

Algorithms: k-means, DBSCAN, PCA, t-SNE, autoencoders.

- Semi-Supervised Learning

Combines a small labeled dataset with a large unlabeled one.

Used in:

- Medical imaging (labels costly)

- Voice recognition

- Autonomous driving

- Web-scale NLP

- Self-Supervised Learning

Models generate their own labels.

This powers modern LLMs like GPT-4, Llama 3, and Gemini.

Example: Masking words and predicting them back.

- Reinforcement Learning

Learning via rewards (covered deeply in its own article later).

Used in: robotics, AlphaGo, resource optimization, and gaming AI.

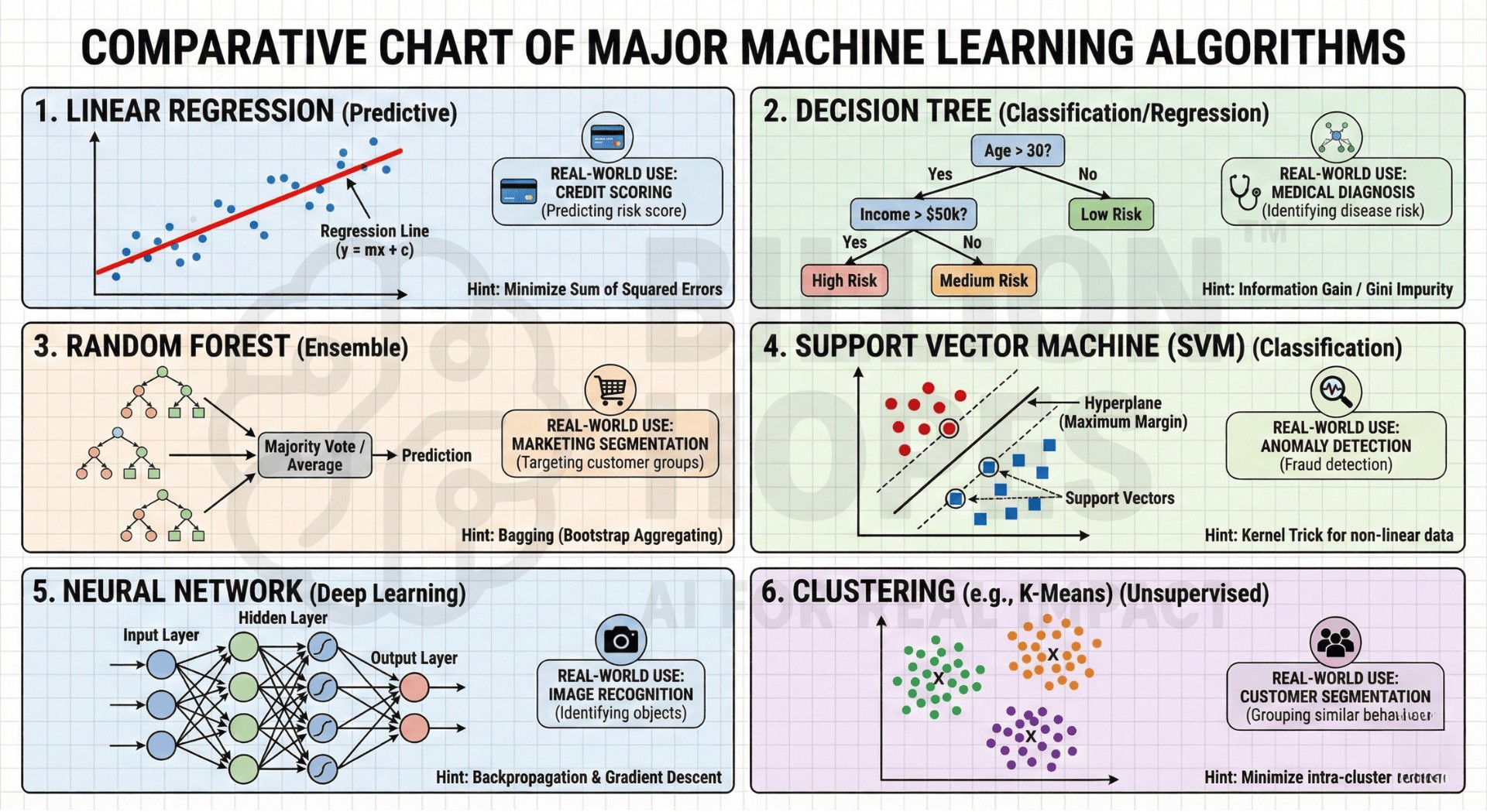

4. Important Algorithms and Models in Machine Learning

Machine learning has hundreds of algorithms, each excelling in specific scenarios.

- Linear Models

Useful for interpretability and speed.

Examples:

- Advertising spend → sales

- Predicting house prices

- Decision Trees & Ensembles

Popular for tabular business data.

Examples:

- Credit scoring

- Insurance claim prediction

- Churn prediction

Gradient boosting algorithms – XGBoost, CatBoost, LightGBM – dominate Kaggle competitions.

- Support Vector Machines

Work well on high-dimensional data like text.

- Neural Networks

Used for nearly all complex perception tasks:

- CNNs for images

- RNNs/LSTMs for sequences

- Transformers for language, vision, and multi-modal models

- Probabilistic Models

Gaussian Mixture Models, Hidden Markov Models for speech, Bayesian networks for risk modeling.

- Clustering Models

Used in marketing, biological classification, fraud detection.

- Generative Models

GANs, VAEs, and Diffusion Models enable:

- Image synthesis

- Voice cloning

- Drug molecule generation

- Synthetic data creation

Real-world example: GAN-based systems helped NVIDIA generate realistic training data for autonomous driving when real data was insufficient. A constantly updated Whatsapp channel awaits your participation.

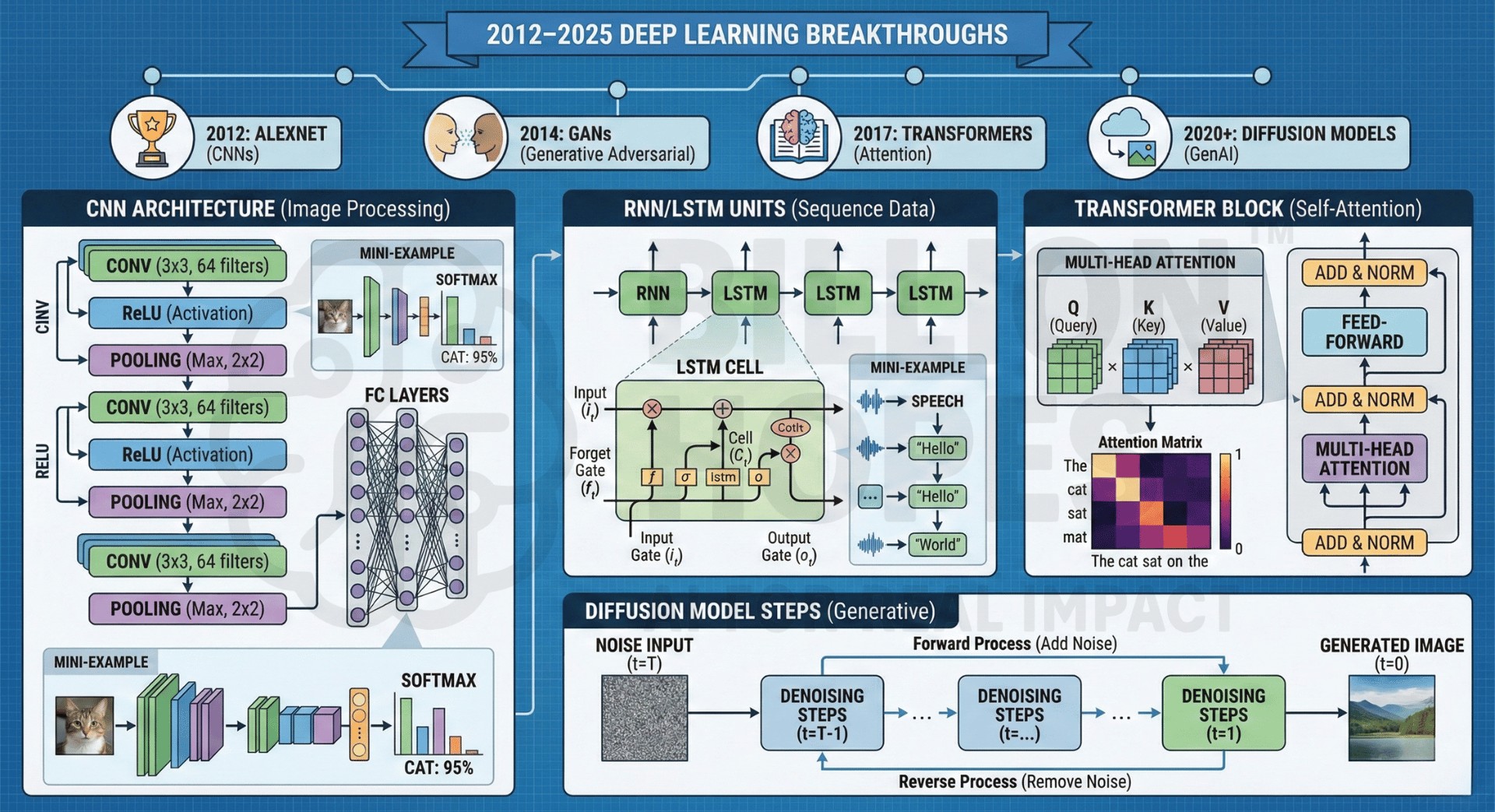

5. Neural Networks and Deep Learning: The Modern ML Engine

Deep learning is the subset of ML responsible for today’s revolution.

Key breakthroughs:

- 2012: AlexNet wins ImageNet with record accuracy → beginning of deep-learning dominance.

- 2014: GANs introduced by Ian Goodfellow.

- 2017: Transformer architecture revolutionizes NLP.

- 2020–2025: Multi-modal AI (text + images + audio + video) becomes mainstream.

Why deep learning works so well

- Learns hierarchical representations (edges → textures → objects).

- Scales with large data and compute.

- Minimizes need for manually-engineered features.

Examples

- Medical Imaging: DeepMind’s retina disease detection.

- Autonomous Vehicles: Tesla, Waymo perception systems.

- Speech Recognition: Whisper, Siri, and Google ASR.

- LLMs: GPT-4, Claude 3, Gemini 2 – trained on trillions of words.

Architectures

- CNNs – Vision

- RNN/LSTM/GRU – Speech, sequences

- Transformers – Universal architecture

- Graph Neural Networks – Molecule prediction, social networks

- Diffusion Models – Stable Diffusion, Midjourney

Deep learning is now the default approach for complex AI tasks.

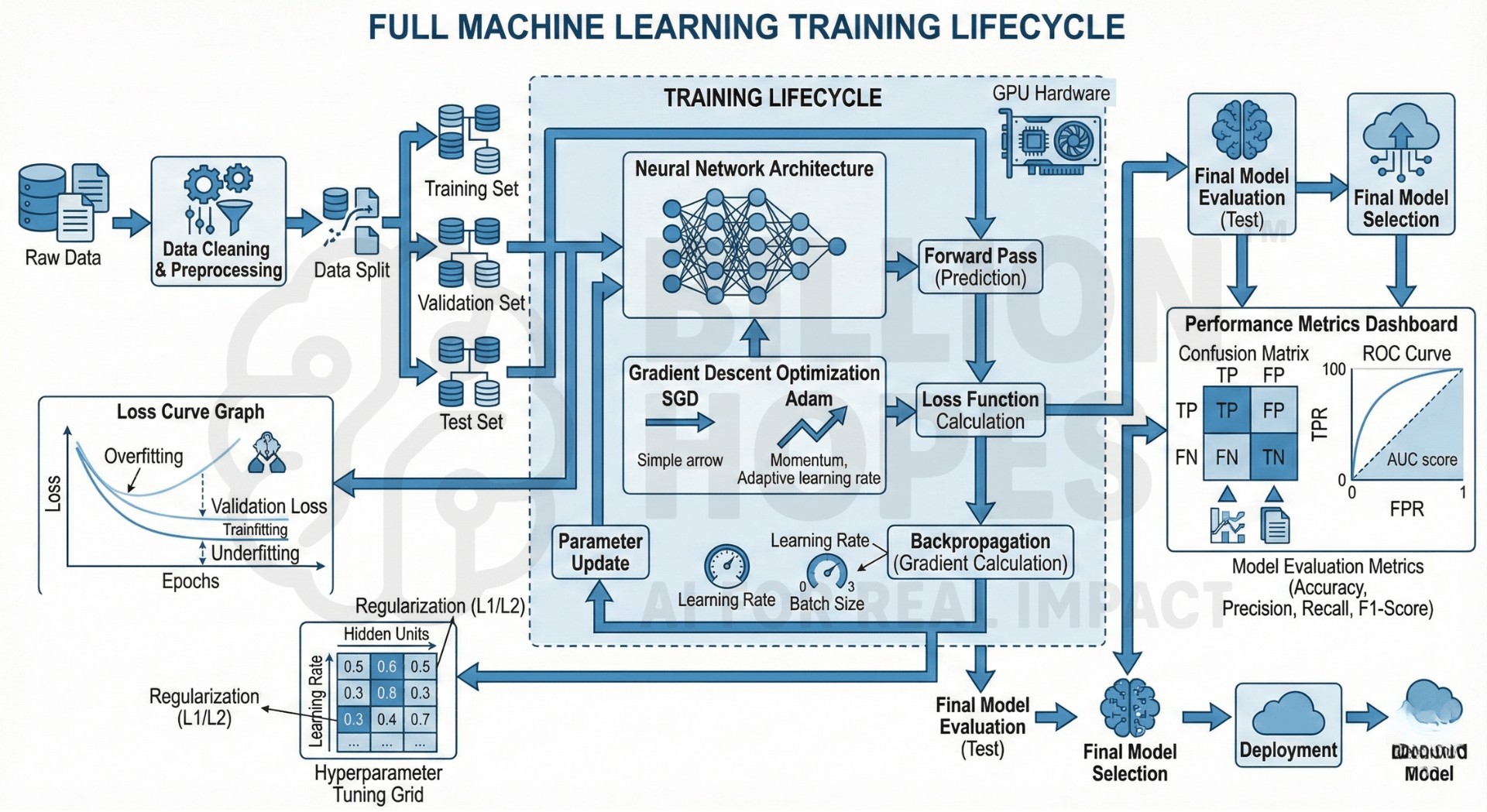

6. Model Training: Optimization, Tuning & Evaluation

Training ML models is a scientific and engineering process.

- Training Steps

- Collect data

- Clean & preprocess

- Split into train/validation/test

- Choose model

- Optimize using gradient descent variants (Adam, RMSProp, SGD)

- Regularize to avoid overfitting

- Evaluate performance

- Deploy

- Hyperparameter Tuning

Common methods:

- Grid search

- Random search

- Bayesian optimization

- Hyperband

- Ray Tune, Optuna for large-scale tuning

- Evaluation Metrics

- Regression: RMSE, MAE, R²

- Classification: Accuracy, AUC, precision, recall, F1

- NLP: BLEU, ROUGE

- Vision: mAP, IoU

- Generative AI: FID score

- Example

In healthcare AI development:

- 200,000 MRI scans collected

- Data augmentation applied

- A CNN trained on GPU clusters

- Performance evaluated using sensitivity/specificity

- Model integrated into radiology workflow

Real-world ML development requires engineering rigor and domain expertise. Excellent individualised mentoring programmes available.

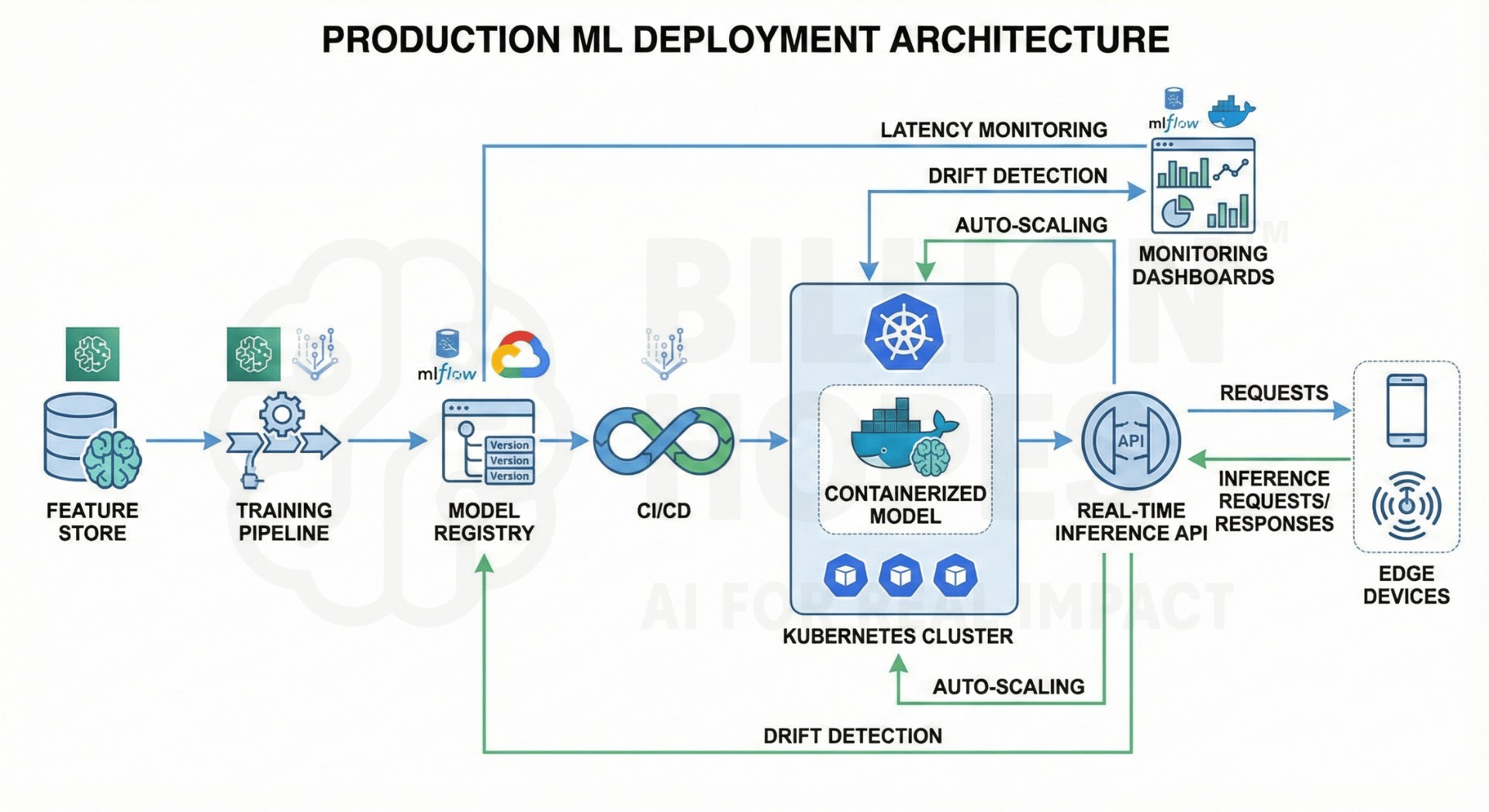

7. Deploying Machine Learning Systems: MLOps, Cloud & Real-Time Inference

Once a model is trained, it must be deployed reliably.

- MLOps (Machine Learning Operations)

Combines DevOps + Data Engineering + ML.

Tools:

- MLflow, Kubeflow

- Airflow, Prefect

- AWS Sagemaker, Google Vertex AI

- Docker, Kubernetes

- Deployment Options

- Cloud: AWS, GCP, Azure

- Edge devices: Mobile phones, drones, IoT devices

- On-premise: Banks, defense organizations

- Browser inference: WebGPU, ONNX Runtime

- Real-World Example

- Netflix uses ML for recommendations, artwork selection, encoding optimization.

- Uber uses ML for ETA prediction and surge pricing.

- Tesla pushes neural-network autopilot updates to cars weekly via OTA.

- Monitoring

Models degrade over time due to concept drift.

AI teams monitor:

- Latency

- Accuracy drop

- Data distribution shifts

Deployment is a continuous lifecycle, not a one-time task.

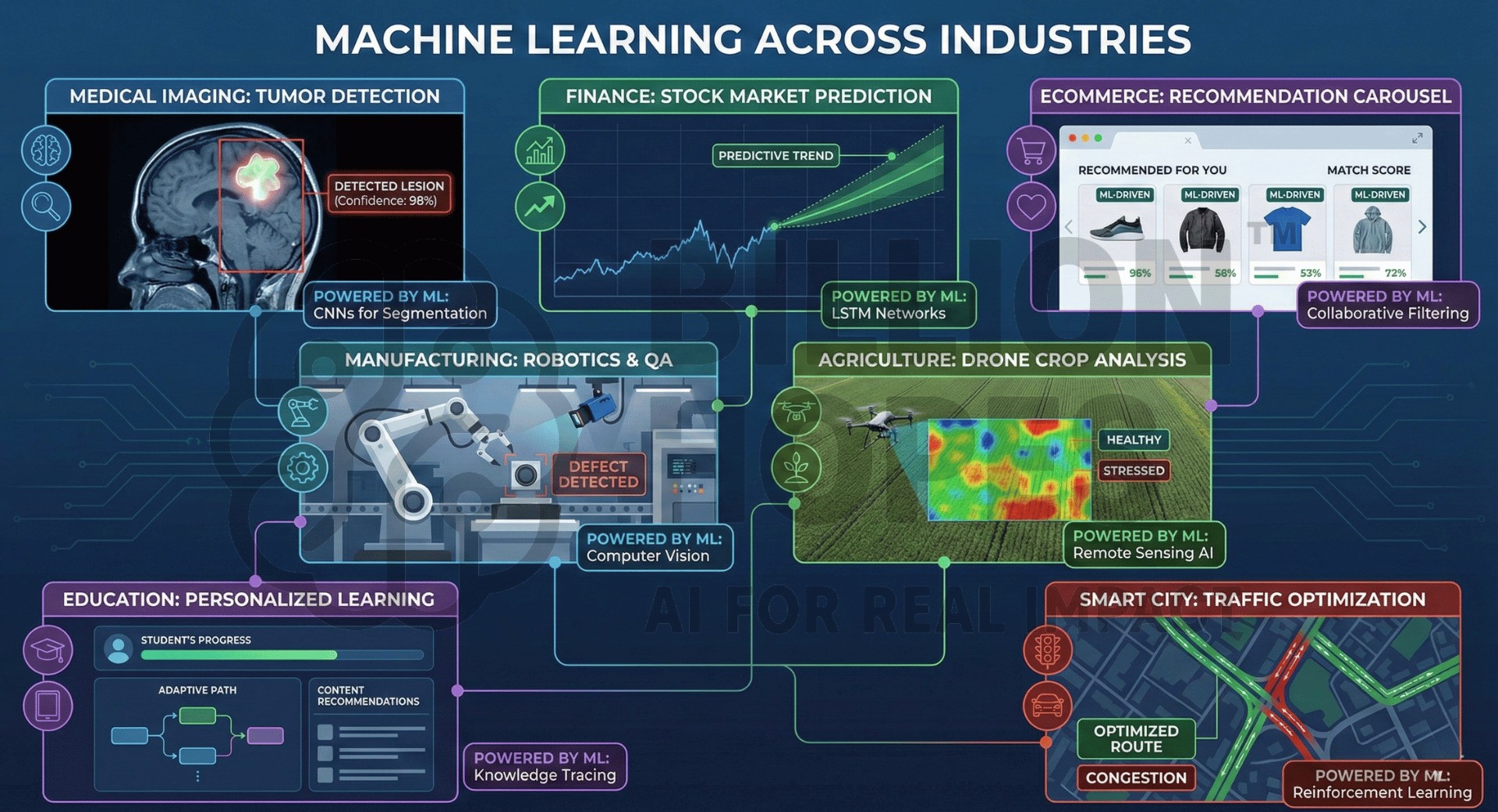

8. ML Applications Across Industries

Machine learning is the engine behind digital transformation worldwide.

- Healthcare

- Cancer detection from images

- Drug discovery models (AlphaFold revolution)

- Predicting patient deterioration in ICUs

- Finance

- Algorithmic trading

- Fraud detection

- Risk modeling and compliance

- Retail/E-commerce

- Personalized recommendations

- Supply chain forecasting

- Dynamic pricing

- Manufacturing

- Predictive maintenance using IoT

- Vision-based quality control

- Robotics optimization

- Agriculture

- Crop yield prediction

- Soil health modeling

- Drone-based plant monitoring

- Education

- AI tutors, adaptive learning

- Automated grading systems

- Personalized learning pathways

- Transport & Logistics

- Route optimization

- Demand forecasting

- Autonomous vehicle systems

By some estimates, ML-driven automation will add $15 trillion to global GDP by 2030. Subscribe to our free AI newsletter now.

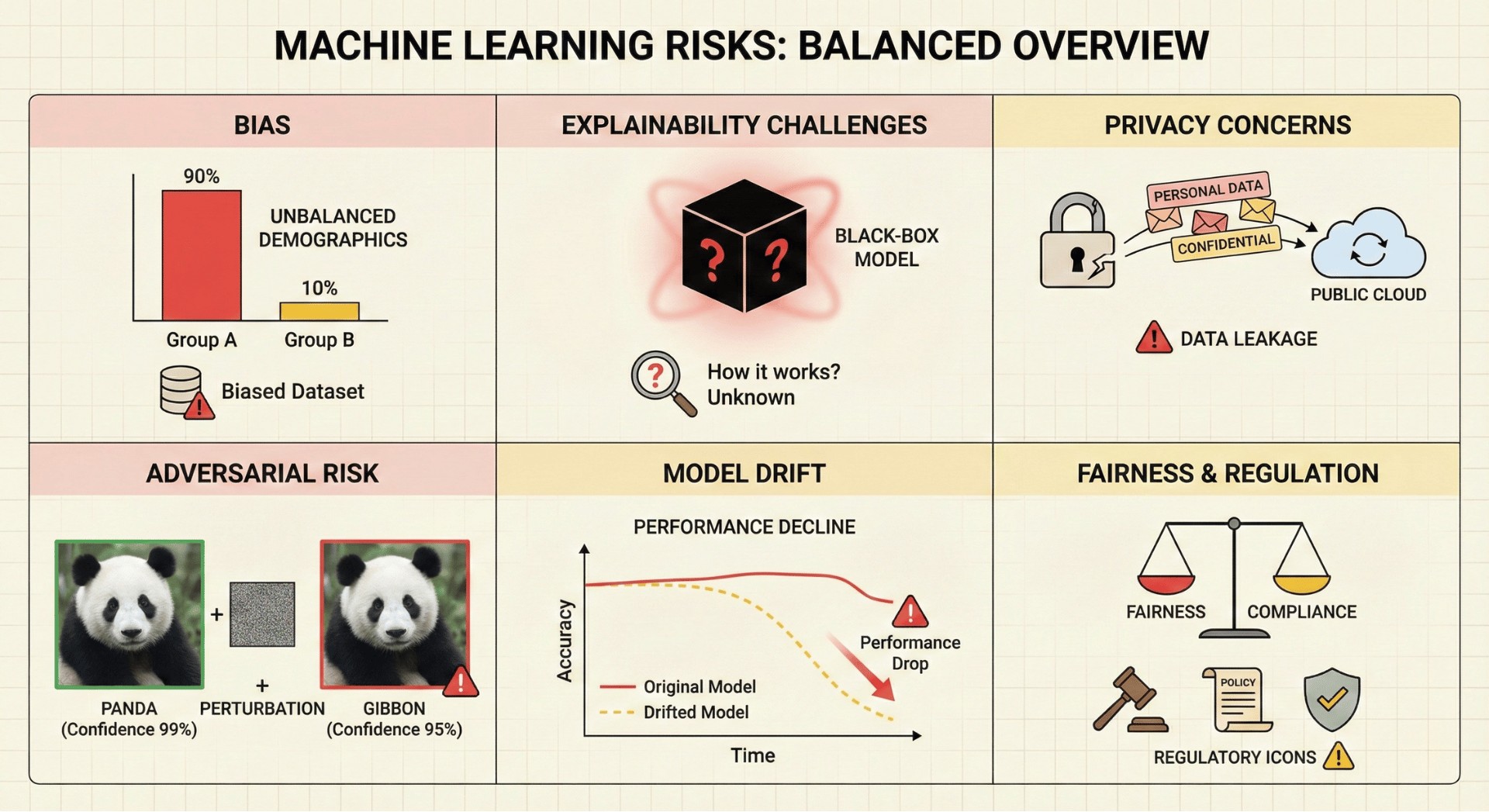

9. Challenges, Limitations & Risks of Machine Learning

1. Data Issues

- Insufficient data

- Noisy labels

- Biased datasets (e.g., biased face datasets)

- Model Limitations

- Black-box nature of deep learning

- Difficulty explaining decisions

- Vulnerability to adversarial attacks

- Operational Risks

- Model drift in the real world

- Ethical misuse (deepfakes, financial manipulation)

- Privacy violations

- Examples of Failures

- Amazon’s hiring ML tool discriminated against women.

- Apple Card raised concerns for biased credit limits.

- COVID prediction models often failed due to poor generalization.

- Regulatory Landscape

- EU AI Act

- US AI Safety standards

- India’s Digital Personal Data Protection Act (DPDPA)

- Global safety efforts from OpenAI, Anthropic, Google DeepMind

ML must be used responsibly and transparently to ensure trust.

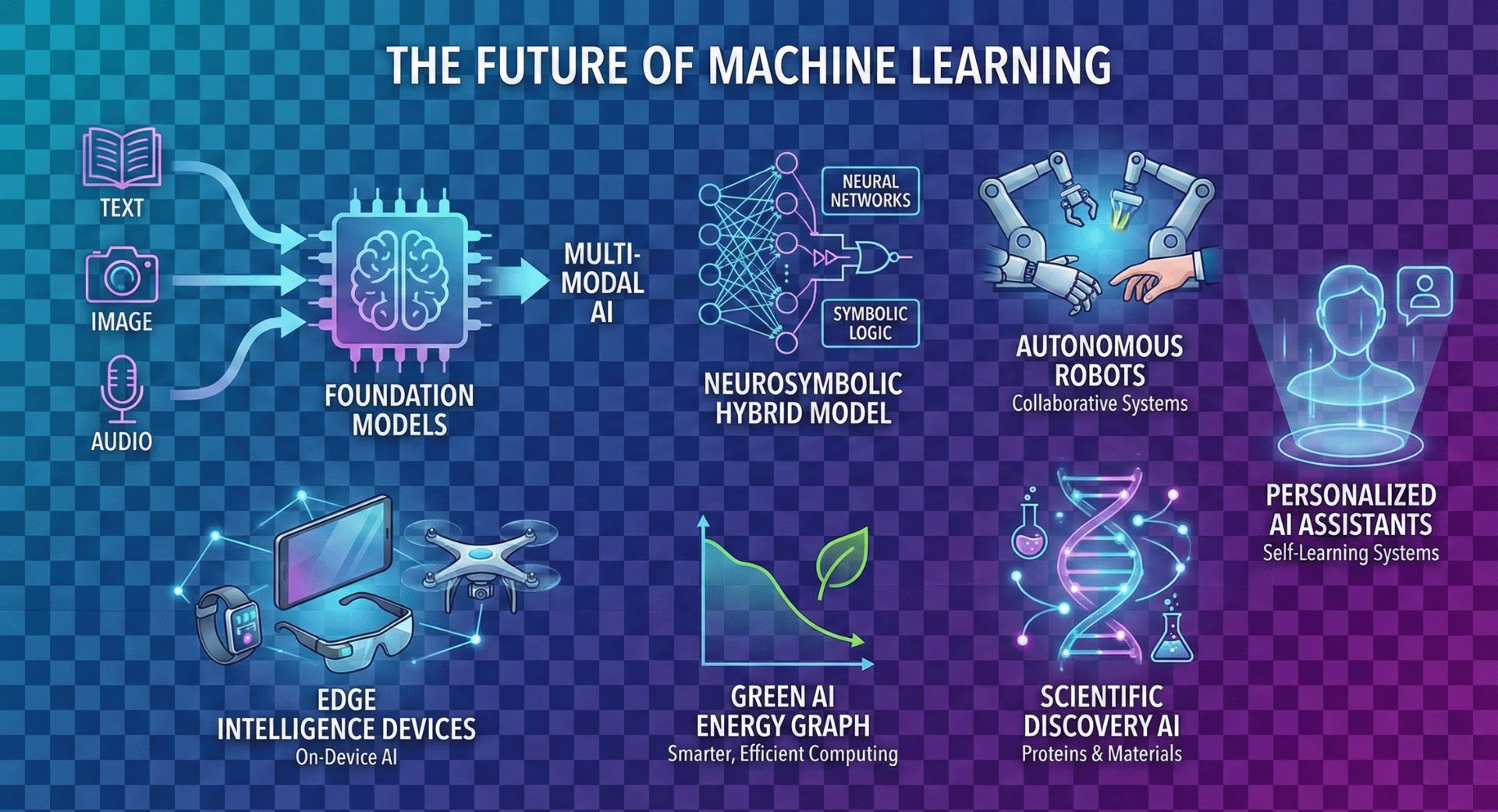

10. The Future of Machine Learning: Trends & Next-Generation Directions

Machine learning is advancing faster than any time in history.

Emerging Trends

- Foundation Models: Models trained on massive datasets, adaptable to any task.

- Multi-modal AI: Text + vision + audio + video integrated systems.

- Edge intelligence: On-device ML for privacy-preserving AI.

- Self-learning systems: Less reliance on labeled datasets.

- Neurosymbolic AI: Combining logic + neural networks.

- Energy-efficient ML: Green AI and carbon-optimized models.

Industry Impact

- ML will be embedded in every software system.

- Entire job families—data engineers, MLOps engineers, prompt engineers—will grow.

- New fields like “AI Safety” and “Responsible AI Governance” will become mainstream.

Long-Term Vision

Machine learning will fuel:

- Drug discovery at unprecedented speed

- Climate modeling with high accuracy

- Personalized education systems

- Fully autonomous robotic systems

- AI assistants capable of multi-step reasoning and planning

ML is becoming the backbone of scientific discovery, industrial productivity, and digital society. Upgrade your AI-readiness with our masterclass.