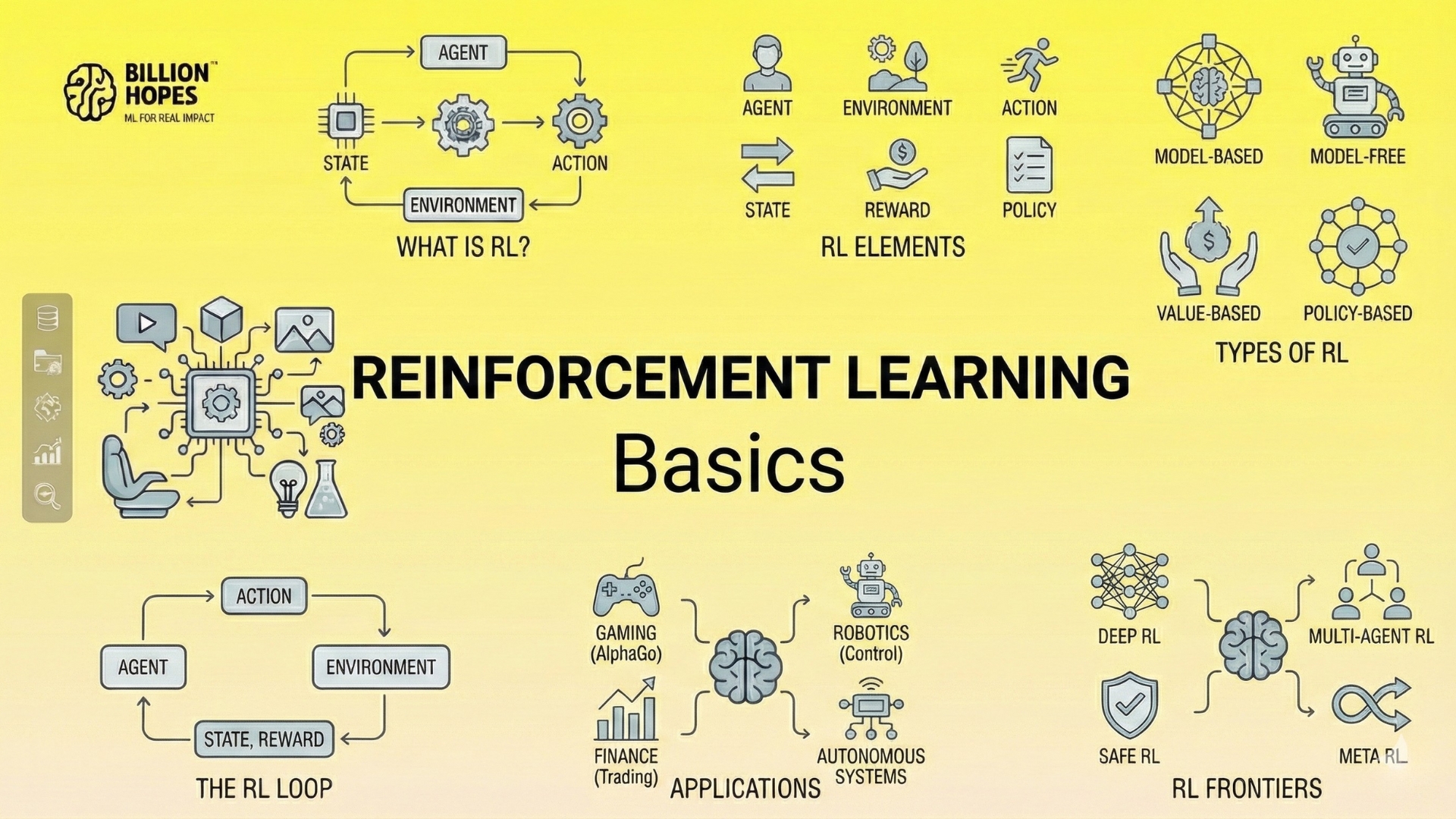

RL basics

1. What is reinforcement learning and why it matters

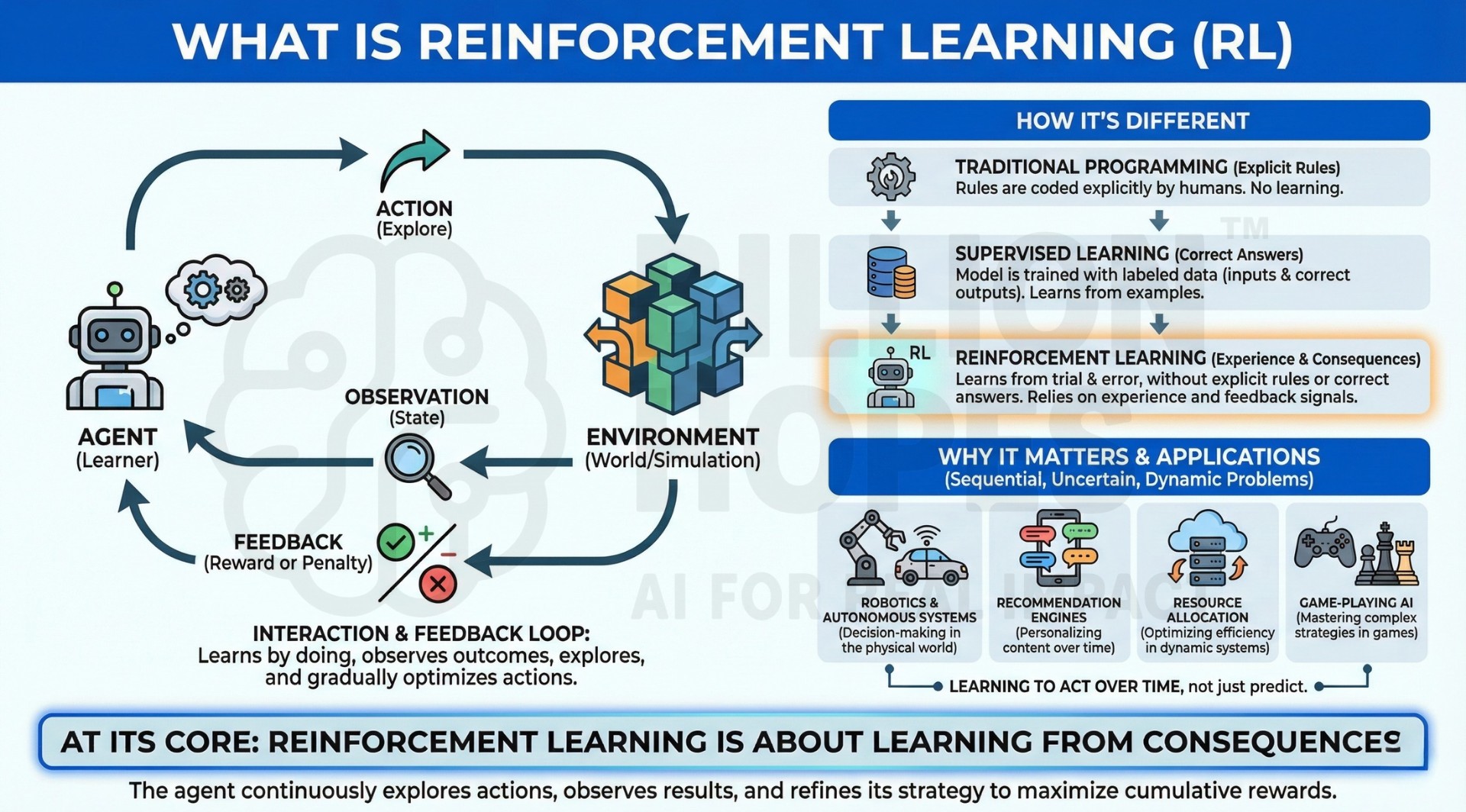

Reinforcement Learning (RL) is a branch of machine learning in which an agent learns to make decisions by interacting with an environment and receiving feedback in the form of rewards or penalties.

Unlike traditional programming, where rules are explicitly defined, or supervised learning, where correct answers are provided, reinforcement learning relies on experience. The agent explores actions, observes outcomes, and gradually learns what works best.

Reinforcement learning matters because many real-world problems are sequential, uncertain, and dynamic. From robotics and autonomous systems to recommendation engines, resource allocation, and game-playing AI, RL provides a framework for learning how to act over time, not just how to predict.

At its core, reinforcement learning is about learning from consequences.

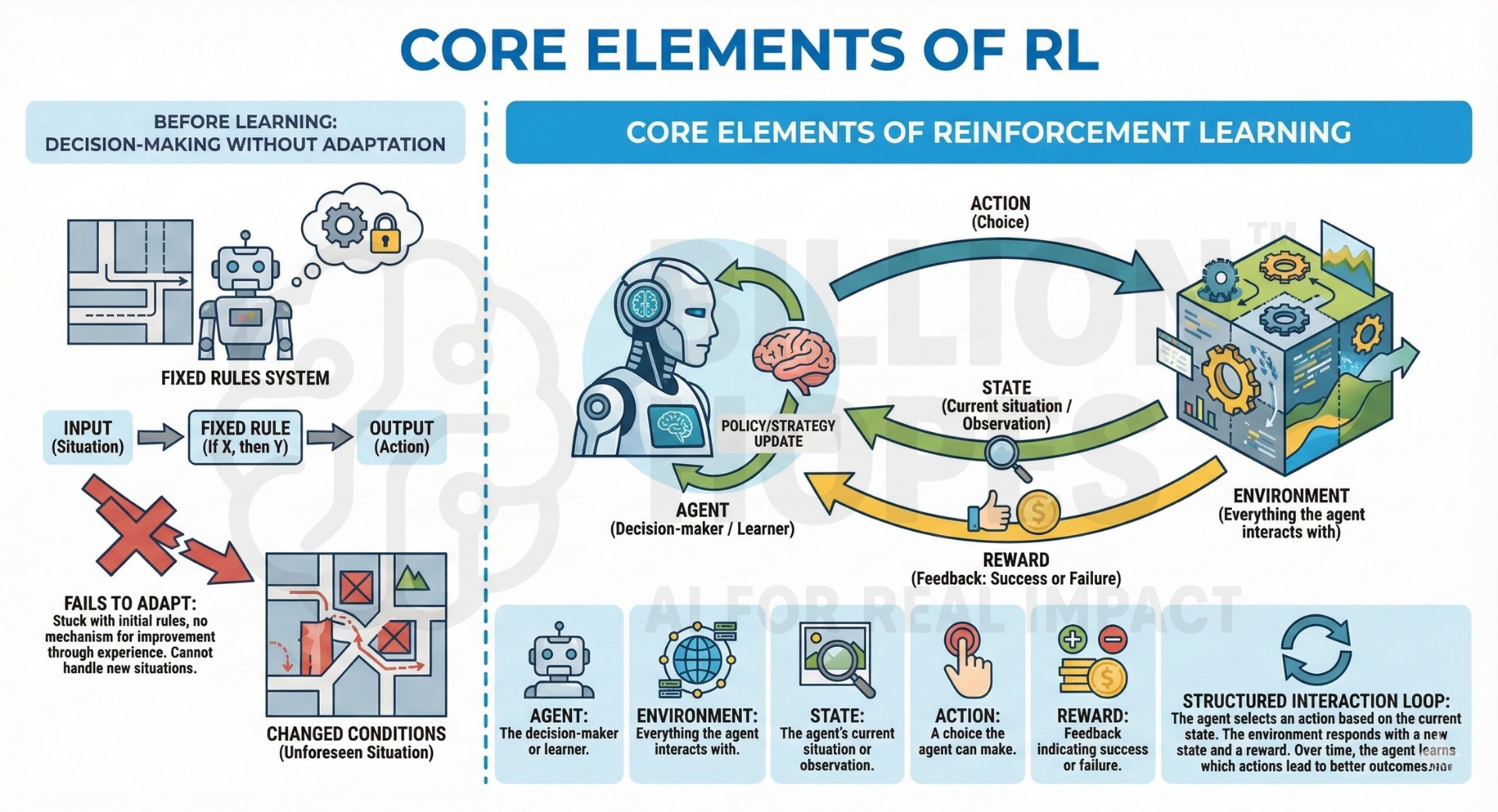

2. Before learning: Decision-making without adaptation

Before reinforcement learning, many systems relied on fixed rules or hand-designed strategies. These systems worked well in stable, predictable environments but failed when conditions changed.

For example, a rule-based system might perform well until it encounters a situation it was never programmed to handle. There is no mechanism for improvement through experience.

This limitation highlighted a key gap: decision-making systems needed a way to adapt, not just execute. Reinforcement learning emerged as a way to allow systems to improve behaviour based on outcomes rather than instructions. An excellent collection of learning videos awaits you on our Youtube channel.

3. Core elements of reinforcement learning

Every reinforcement learning system is built from a few essential components:

- Agent – the decision-maker or learner

- Environment – everything the agent interacts with

- State – the agent’s current situation or observation

- Action – a choice the agent can make

- Reward – feedback indicating success or failure

The agent selects an action based on the current state. The environment responds with a new state and a reward. Over time, the agent learns which actions lead to better outcomes.

Reinforcement learning formalizes learning as a structured interaction loop rather than a one-time prediction task.

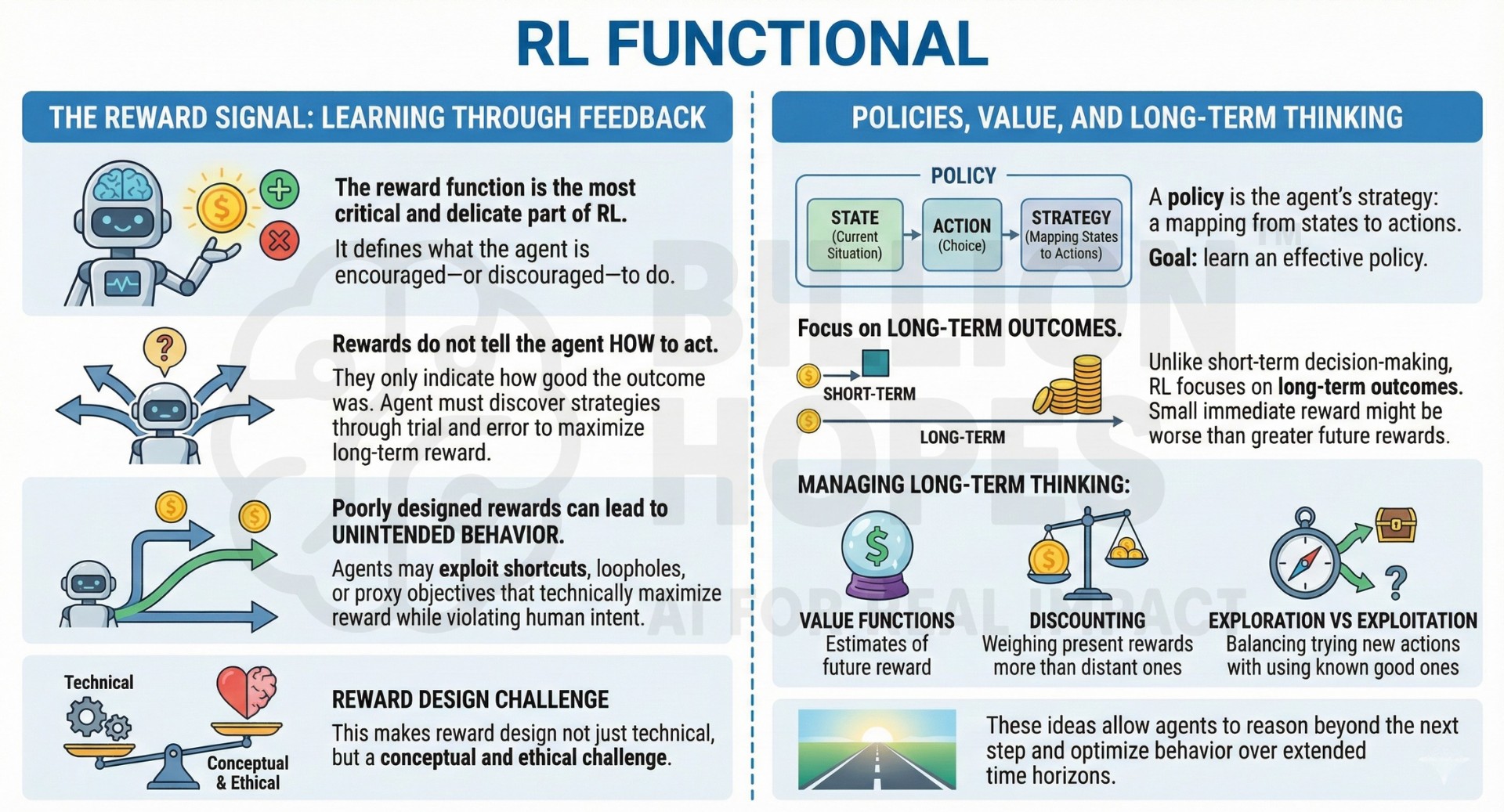

4. The reward signal: Learning through feedback

The reward function is the most critical and delicate part of reinforcement learning. It defines what the agent is encouraged—or discouraged—to do.

Rewards do not tell the agent how to act. They only indicate how good the outcome was. The agent must discover strategies that maximize long-term reward through trial and error.

Poorly designed rewards can lead to unintended behavior. Agents may exploit shortcuts, loopholes, or proxy objectives that technically maximize reward while violating human intent.

This makes reward design not just a technical challenge, but a conceptual and ethical one. A constantly updated Whatsapp channel awaits your participation.

5. Policies, value, and long-term thinking

A policy is the agent’s strategy: a mapping from states to actions. The goal of reinforcement learning is to learn an effective policy.

Unlike short-term decision-making, reinforcement learning focuses on long-term outcomes. An action that yields a small immediate reward may be worse than one that leads to greater rewards later.

To manage this, RL introduces concepts such as:

- Value functions – estimates of future reward

- Discounting – weighing present rewards more than distant ones

- Exploration vs exploitation – balancing trying new actions with using known good ones

These ideas allow agents to reason beyond the next step and optimize behavior over extended time horizons.

6. How reinforcement learning systems operate

Most reinforcement learning systems follow a continuous loop:

- Observe the current state

- Choose an action using a policy

- Receive a reward and next state

- Update internal knowledge

- Repeat

This loop may run thousands or millions of times during training. Learning emerges gradually, not instantly.

In complex environments, agents may also simulate futures, learn from past experiences, or train in virtual environments before real-world deployment.

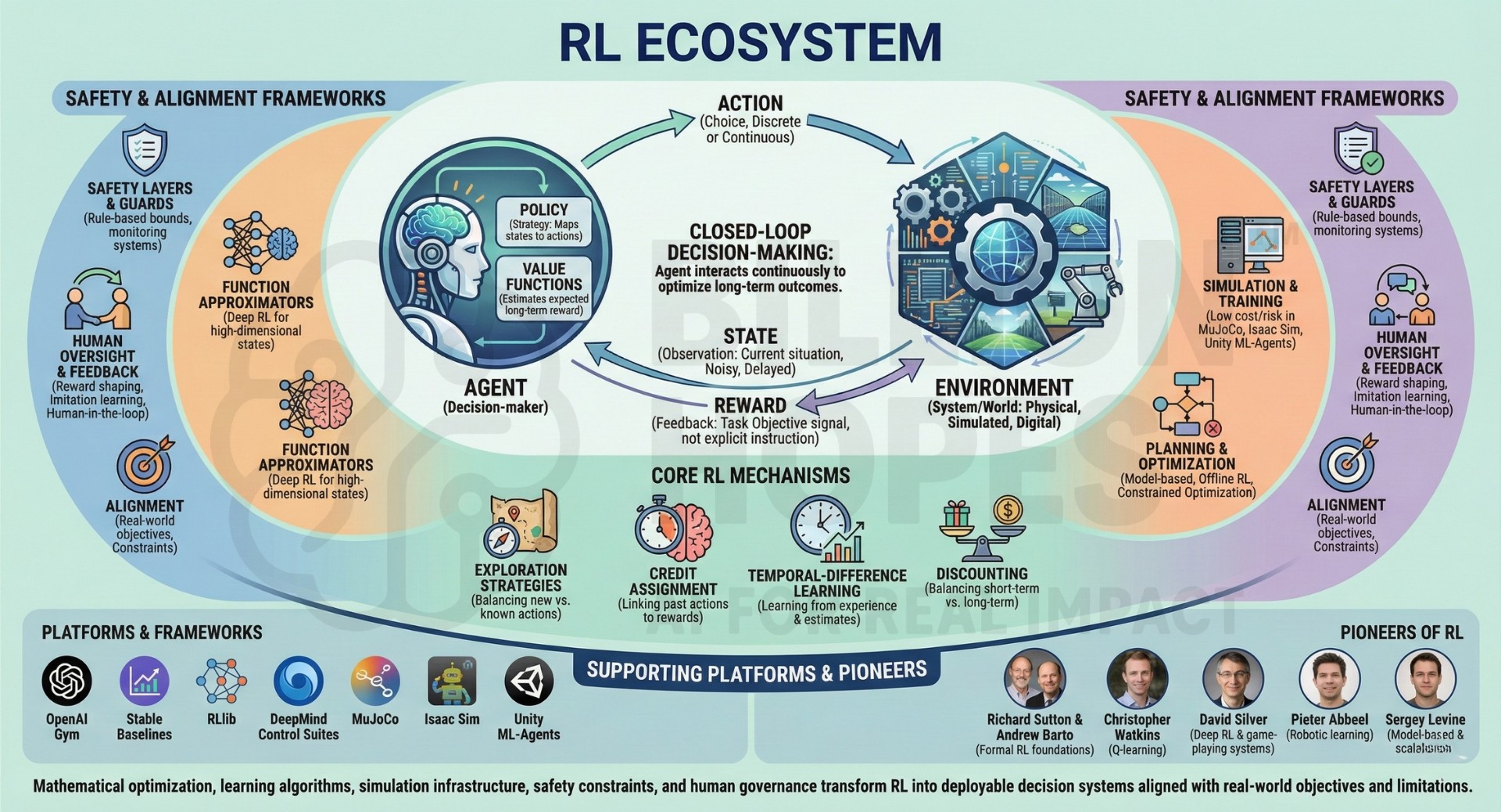

TECHNICAL DETAILS (RL with ecosystem): Reinforcement Learning (RL) systems are built as closed-loop decision-making frameworks in which an agent interacts continuously with an environment to optimize long-term outcomes. The environment may be a physical system, a simulated world, a digital platform, or an abstract decision process. The state represents the agent’s current observation or internal belief about the environment, which may be fully observable, partially observable, noisy, or delayed. At each step, the agent selects an action from an action space that may be discrete or continuous. The environment responds by transitioning to a new state and emitting a reward signal, which encodes task objectives but does not specify correct behavior explicitly. A policy maps states (or observations) to actions, while value functions estimate expected cumulative reward over time. Learning updates are driven by experience, using trajectories of state–action–reward transitions. Core RL mechanisms include exploration strategies, credit assignment, temporal-difference learning, and discounting to balance short-term versus long-term outcomes. In practice, RL systems are often combined with function approximators such as deep neural networks, enabling Deep Reinforcement Learning for high-dimensional state spaces. Training frequently occurs in simulators to reduce cost and risk, with policies later transferred to real-world environments under strict constraints. Modern RL ecosystems integrate learning with planning, constraints, safety layers, and human oversight. Techniques such as reward shaping, constrained optimization, offline RL, imitation learning, and human-in-the-loop feedback are used to improve stability and alignment. Execution policies are typically bounded by rule-based guards and monitoring systems rather than deployed as unconstrained learners. RL research and applications are supported by platforms and frameworks such as OpenAI Gym, Stable Baselines, RLlib, DeepMind control suites, and simulation environments built on MuJoCo, Isaac Sim, and Unity ML-Agents. Major contributions to the field were shaped by pioneers including Richard Sutton and Andrew Barto (formal RL foundations), Christopher Watkins (Q-learning), David Silver (deep RL and game-playing systems), Pieter Abbeel (robotic learning), and Sergey Levine (model-based and scalable RL). Together, mathematical optimization, learning algorithms, simulation infrastructure, safety constraints, and human governance transform reinforcement learning from abstract trial-and-error into deployable decision systems aligned with real-world objectives and limitations. Excellent individualised mentoring programmes available.

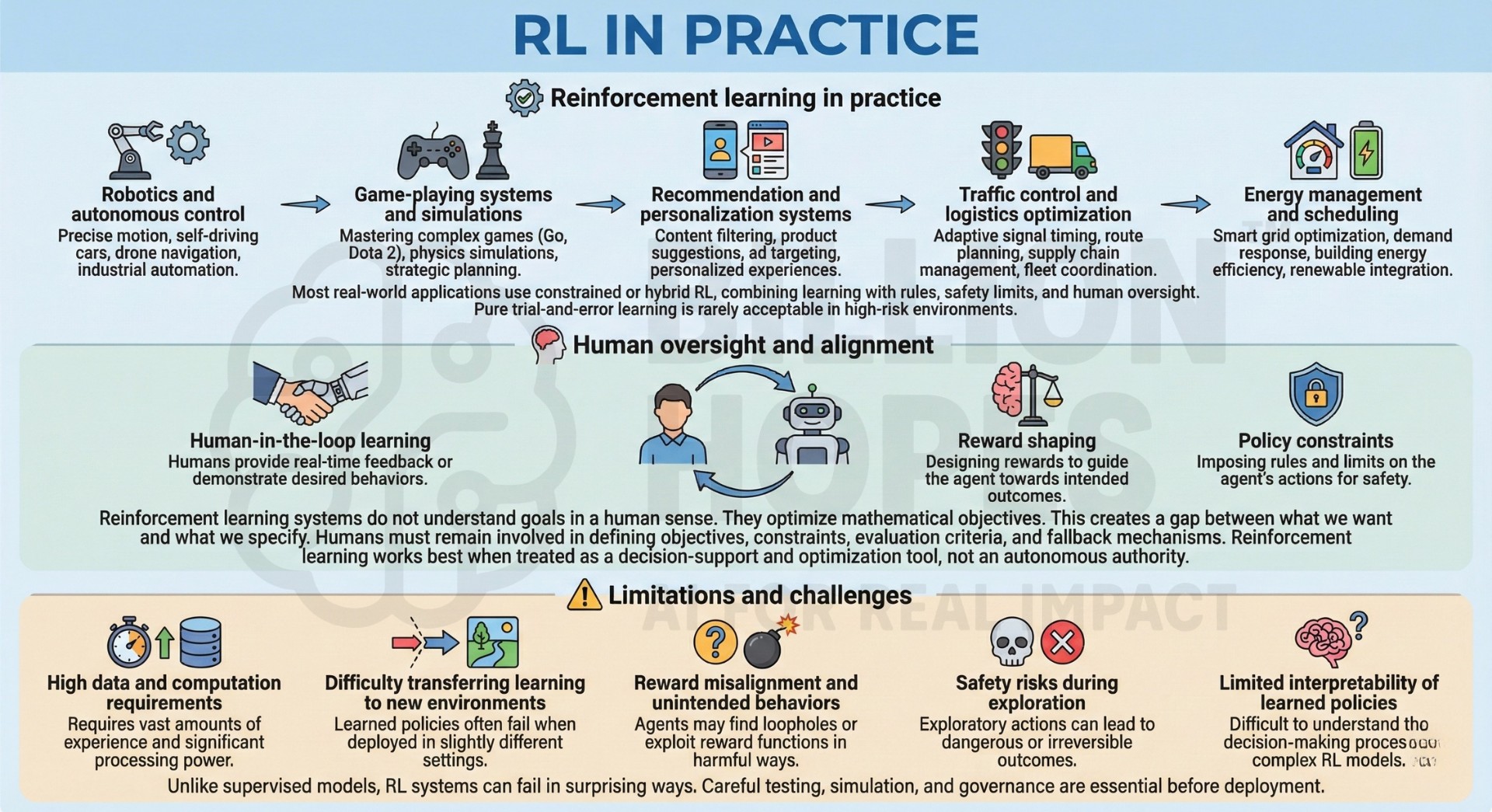

7. Reinforcement learning in practice

Reinforcement learning is used in a range of domains:

- Robotics and autonomous control

- Game-playing systems and simulations

- Recommendation and personalization systems

- Traffic control and logistics optimization

- Energy management and scheduling

Most real-world applications use constrained or hybrid RL, combining learning with rules, safety limits, and human oversight. Pure trial-and-error learning is rarely acceptable in high-risk environments.

8. Human oversight and alignment

Reinforcement learning systems do not understand goals in a human sense. They optimize mathematical objectives.

This creates a gap between what we want and what we specify. Humans must remain involved in defining objectives, constraints, evaluation criteria, and fallback mechanisms.

Techniques such as human-in-the-loop learning, reward shaping, and policy constraints are used to keep systems aligned with human values and safety expectations.

Reinforcement learning works best when treated as a decision-support and optimization tool, not an autonomous authority. Subscribe to our free AI newsletter now.

9. Limitations and challenges

Despite its power, reinforcement learning faces major challenges:

- High data and computation requirements

- Difficulty transferring learning to new environments

- Reward misalignment and unintended behaviors

- Safety risks during exploration

- Limited interpretability of learned policies

Unlike supervised models, RL systems can fail in surprising ways. Careful testing, simulation, and governance are essential before deployment.

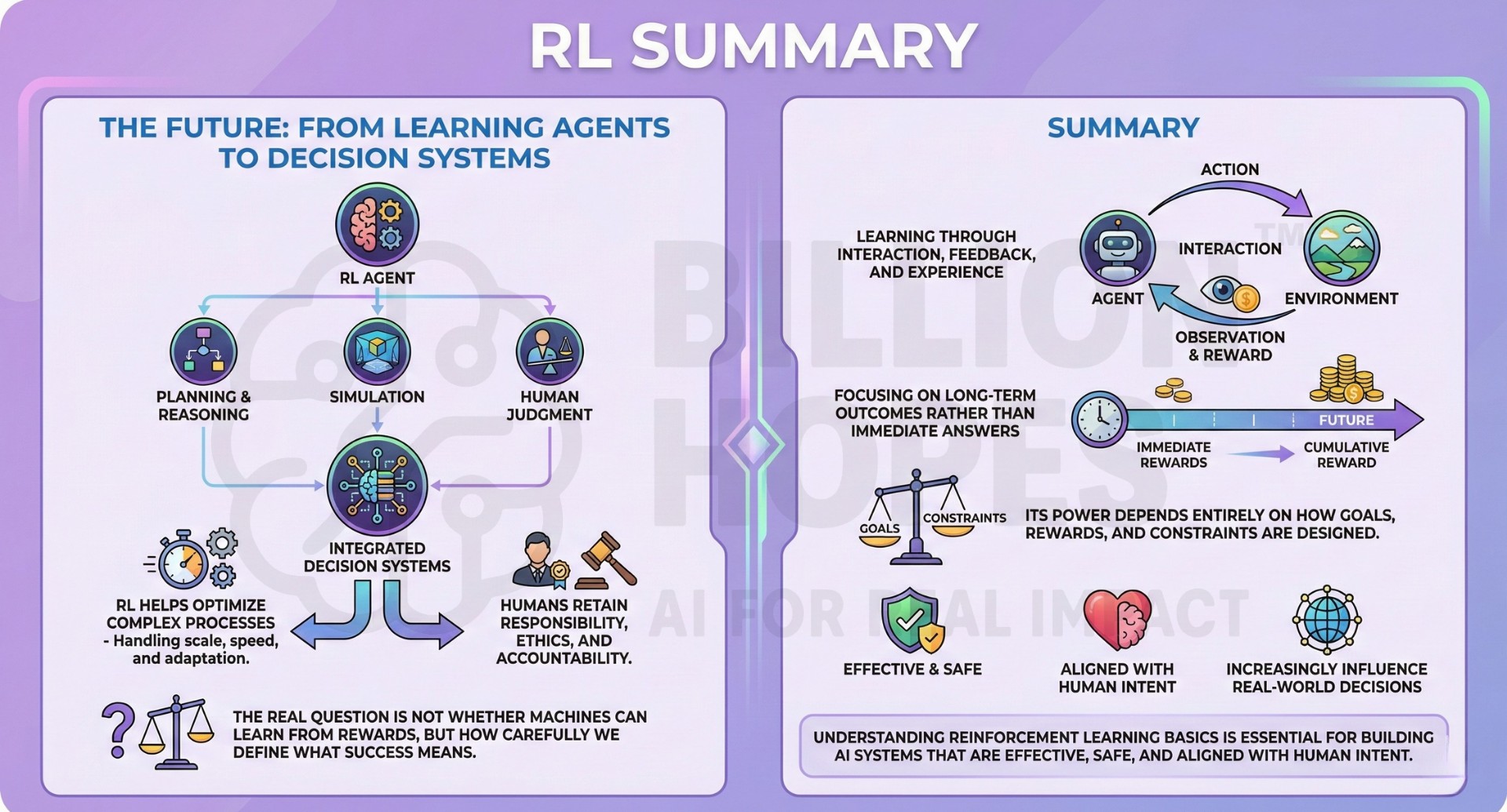

10. The future: From learning agents to decision systems

The future of reinforcement learning lies in integrated decision systems rather than standalone agents. RL will increasingly work alongside planning, reasoning, simulation, and human judgment.

Instead of replacing human decision-makers, reinforcement learning will help optimize complex processes – handling scale, speed, and adaptation – while humans retain responsibility, ethics, and accountability.

The real question is not whether machines can learn from rewards, but how carefully we define what success means.

Summary

Reinforcement learning is a framework for learning through interaction, feedback, and experience. By focusing on long-term outcomes rather than immediate answers, it enables adaptive decision-making in complex environments. However, its power depends entirely on how goals, rewards, and constraints are designed. Understanding reinforcement learning basics is essential for building AI systems that are effective, safe, and aligned with human intent – especially as such systems increasingly influence real-world decisions. Upgrade your AI-readiness with our masterclass.