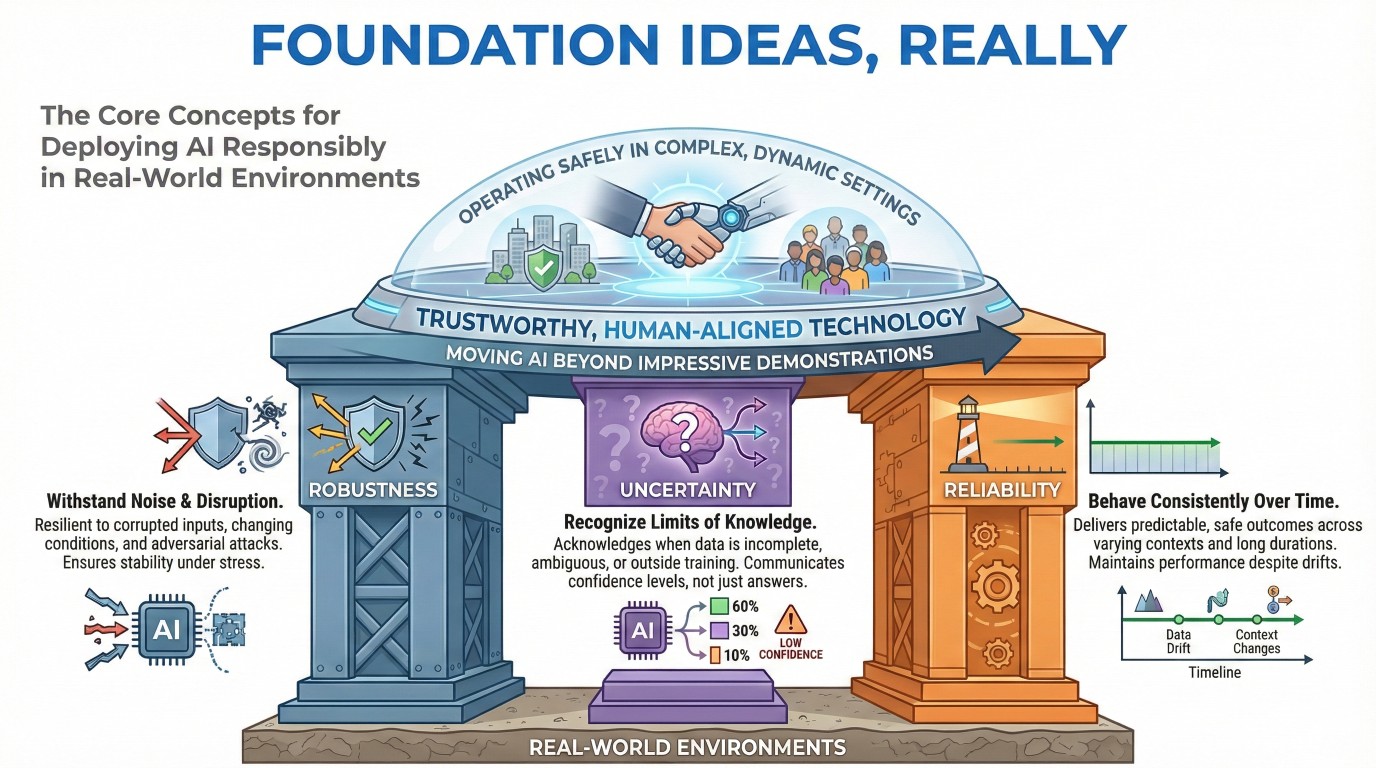

Robustness Uncertainty and Reliability basics

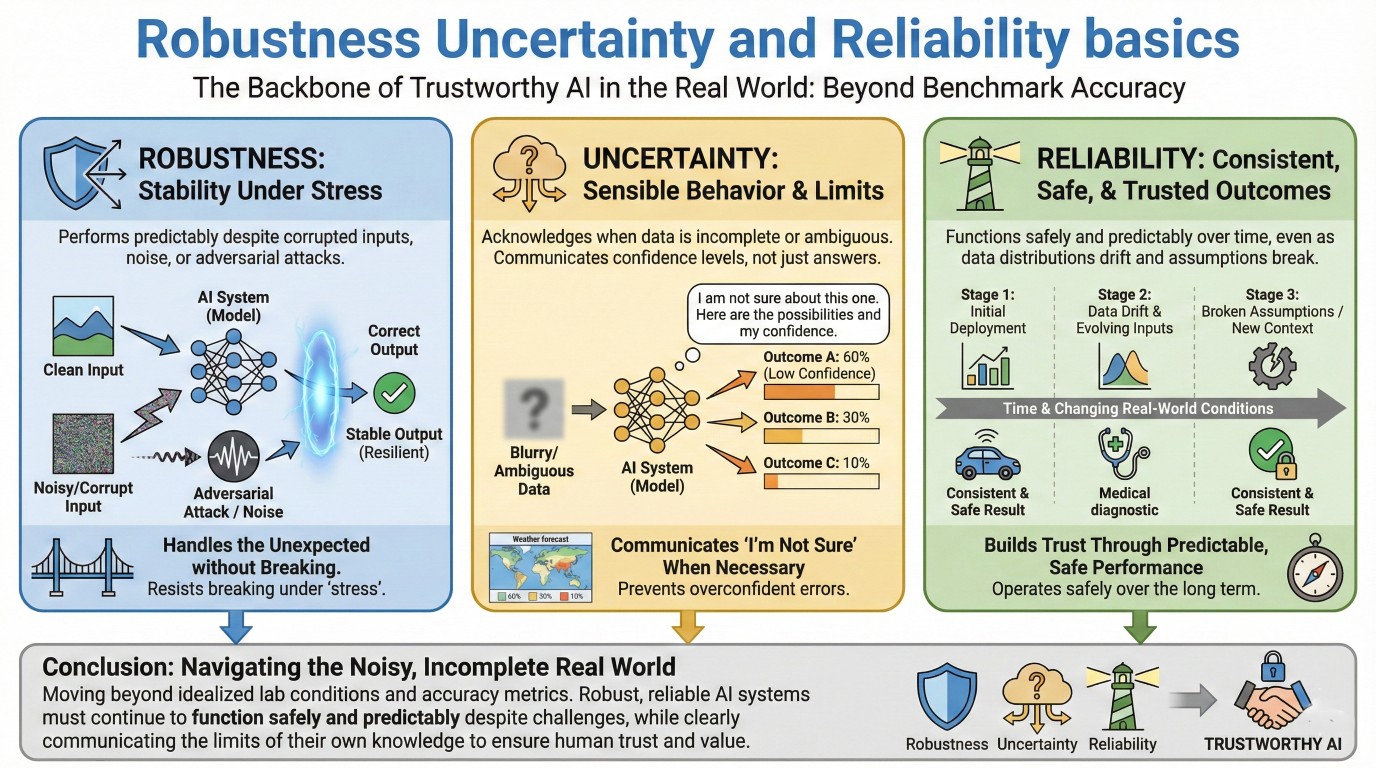

Robustness, uncertainty, and reliability form the backbone of trustworthy artificial intelligence. As AI systems move from controlled research settings into real-world environments, their value is no longer measured only by accuracy on benchmark datasets. Instead, the critical question becomes whether these systems behave sensibly under uncertainty, remain stable under stress, and provide outputs that humans can reasonably trust.

Unlike idealized laboratory conditions, the real world is noisy, incomplete, and constantly changing. Inputs may be corrupted, assumptions may break, and data distributions may drift over time. Robust, reliable AI systems must continue to function safely and predictably despite these challenges, while clearly communicating the limits of their own knowledge.

1. Why robustness and reliability matter

AI systems increasingly influence high-stakes decisions in healthcare, finance, transportation, governance, and education. In such contexts, failure is not just a technical inconvenience – it can lead to financial loss, safety risks, or erosion of public trust.

Robustness ensures that an AI system does not fail catastrophically when faced with slightly altered, noisy, or adversarial inputs. Reliability ensures that the system behaves consistently over time and across environments. Together, they determine whether AI can be responsibly deployed beyond narrow, carefully curated use cases.

Uncertainty awareness adds a crucial layer: a system that knows when it might be wrong is often safer than one that always appears confident.

2. What robustness means in AI systems

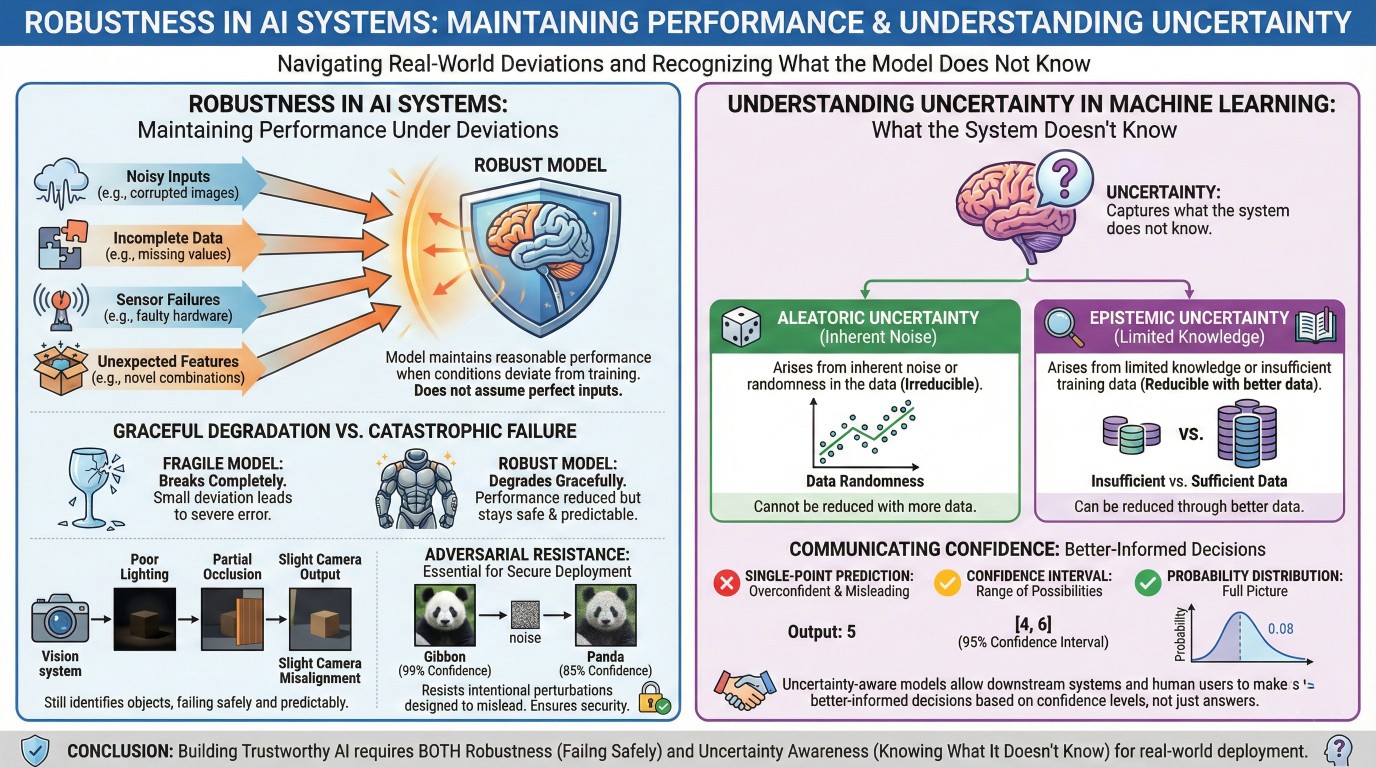

In AI, robustness refers to the ability of a model to maintain reasonable performance when conditions deviate from those seen during training. This may include noisy inputs, incomplete data, sensor failures, or unexpected combinations of features.

A robust model does not assume perfect inputs. Instead, it degrades gracefully. For example, a vision system should still identify objects under poor lighting, partial occlusion, or slight camera misalignment. Robustness is not about never failing—it is about failing safely and predictably.

Robustness also includes resistance to adversarial manipulation, where small, intentional perturbations are designed to mislead a model. Addressing such vulnerabilities is essential for secure AI deployment. An excellent collection of learning videos awaits you on our Youtube channel.

3. Understanding uncertainty in machine learning

Uncertainty captures what an AI system does not know. There are two broad forms of uncertainty:

- Aleatoric uncertainty, arising from inherent noise or randomness in the data

- Epistemic uncertainty, arising from limited knowledge or insufficient training data

Distinguishing between these types helps systems decide whether uncertainty can be reduced through better data or must be accepted as irreducible.

Uncertainty-aware models can express confidence intervals, probability distributions, or calibrated confidence scores rather than single-point predictions. This allows downstream systems—and human users—to make better-informed decisions.

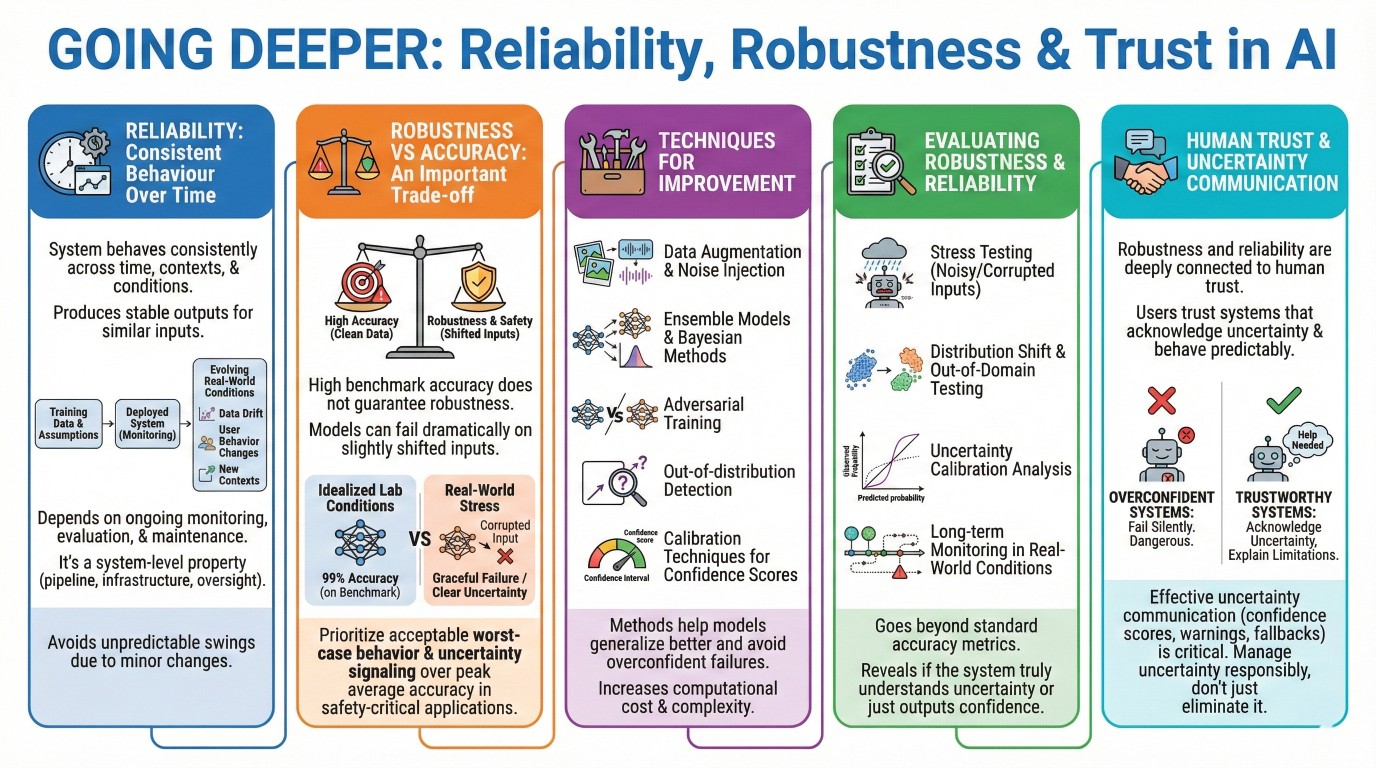

4. Reliability as consistent behaviour over time

Reliability focuses on whether an AI system behaves consistently across time, contexts, and operating conditions. A reliable system produces stable outputs for similar inputs and does not exhibit unpredictable swings in behavior due to minor environmental changes.

In deployed systems, reliability also involves monitoring performance drift. Data distributions evolve, user behaviour changes, and real-world conditions differ from training assumptions. Reliability therefore depends not only on model design, but also on ongoing evaluation, monitoring, and maintenance.

Reliability is a system-level property, shaped by data pipelines, deployment infrastructure, and human oversight – not just the model itself.

A constantly updated Whatsapp channel awaits your participation.

5. Robustness vs accuracy: an important trade-off

High benchmark accuracy does not guarantee robustness or reliability. Models may perform exceptionally well on clean test data while failing dramatically when inputs are slightly shifted.

This creates a tension between optimizing for average-case performance and ensuring acceptable worst-case behaviour. In safety-critical applications, it is often preferable to sacrifice a small amount of peak accuracy in exchange for improved robustness and clearer uncertainty signaling.

Understanding this trade-off is essential when translating research models into production systems.

6. Techniques for improving robustness and uncertainty handling

Several approaches are commonly used to strengthen robustness and uncertainty awareness:

- Data augmentation and noise injection

- Ensemble models and Bayesian methods

- Adversarial training

- Out-of-distribution detection

- Calibration techniques for confidence scores

These methods help models generalize better and avoid overconfident failures. However, they often increase computational cost and system complexity, reinforcing the need for thoughtful design choices. Excellent individualised mentoring programmes available.

7. Evaluating robustness and reliability

Evaluating robust and reliable AI systems goes beyond standard accuracy metrics. Effective evaluation includes:

- Stress testing under noisy or corrupted inputs

- Distribution shift and out-of-domain testing

- Uncertainty calibration analysis

- Long-term monitoring in real-world conditions

Such evaluations reveal whether a system truly understands uncertainty or merely produces confident-looking outputs without justification.

8. Human trust and uncertainty communication

Robustness and reliability are deeply connected to human trust. Users are more likely to trust AI systems that acknowledge uncertainty, explain limitations, and behave predictably.

Overconfident systems that fail silently can be more dangerous than systems that occasionally abstain or request human input. Effective uncertainty communication—through confidence scores, warnings, or fallback mechanisms—is therefore a critical design consideration.

Trustworthy AI is not about eliminating uncertainty, but about managing it responsibly. Subscribe to our free AI newsletter now.

9. Robust AI in real-world deployment

In practice, robustness and reliability are achieved through a combination of technical, organizational, and governance measures. These include rigorous testing, clear operational boundaries, human-in-the-loop oversight, and continuous performance auditing.

No model remains robust forever without maintenance. Responsible deployment treats AI systems as evolving artifacts that must be monitored and updated as conditions change.

10. The role of robustness and reliability in the future of AI

As AI systems become more autonomous and integrated into society, robustness, uncertainty awareness, and reliability will define their legitimacy. These properties are essential for embodied AI, decision-support systems, and any application where human well-being is affected.

Future AI progress will depend not only on making models more powerful, but on making them more dependable, interpretable, and honest about their limitations. Upgrade your AI-readiness with our masterclass.

Summary

Robustness, uncertainty, and reliability are foundational concepts for deploying AI responsibly in real-world environments. Robust systems withstand noise and disruption, uncertainty-aware systems recognize the limits of their knowledge, and reliable systems behave consistently over time. Together, these properties move AI beyond impressive demonstrations toward trustworthy, human-aligned technology capable of operating safely in complex, dynamic settings.