AI Cloning – 15 Core Concepts defining replication of human intelligence

AI cloning refers to a broad class of technologies that attempt to replicate, simulate, or extend human identity, cognition, behavior, or presence using artificial intelligence. Unlike traditional automation, AI cloning does not merely perform tasks—it mirrors who or how a person is. As these technologies mature, they raise profound technical, ethical, legal, and civilizational questions.

Below are 15 foundational concepts that together define the emerging AI cloning landscape.

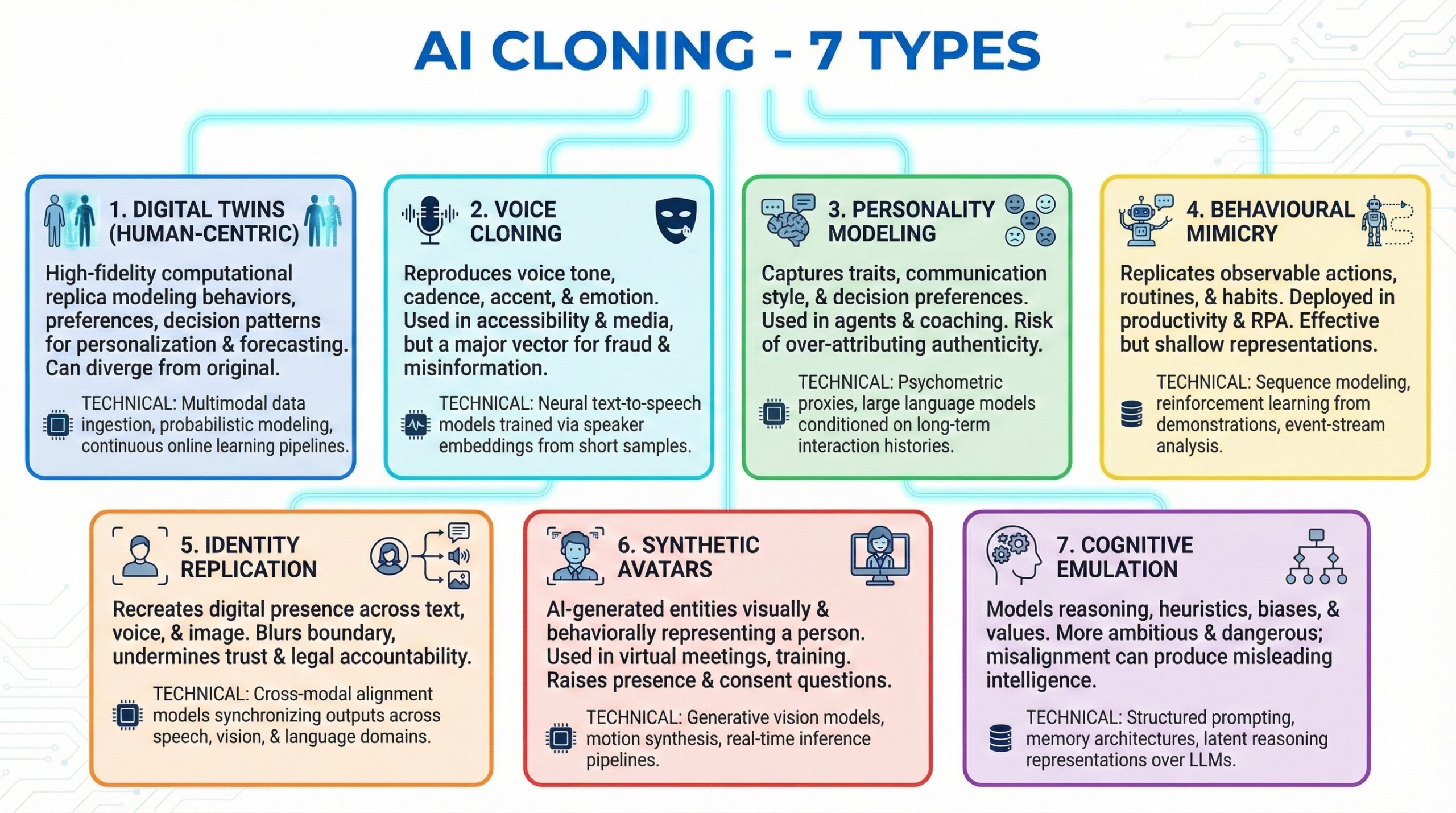

1. Digital Twins (Human-Centric)

A digital twin is a high-fidelity computational replica of a real-world entity, increasingly applied to humans rather than machines. In human contexts, digital twins model behaviors, preferences, decision patterns, or responses under simulated conditions. They are used for personalization, forecasting, and scenario analysis rather than direct replacement. Over time, such twins can diverge from the original human as data and environments change.

Technically, human digital twins rely on multimodal data ingestion, probabilistic state modeling, and continuous online learning pipelines.

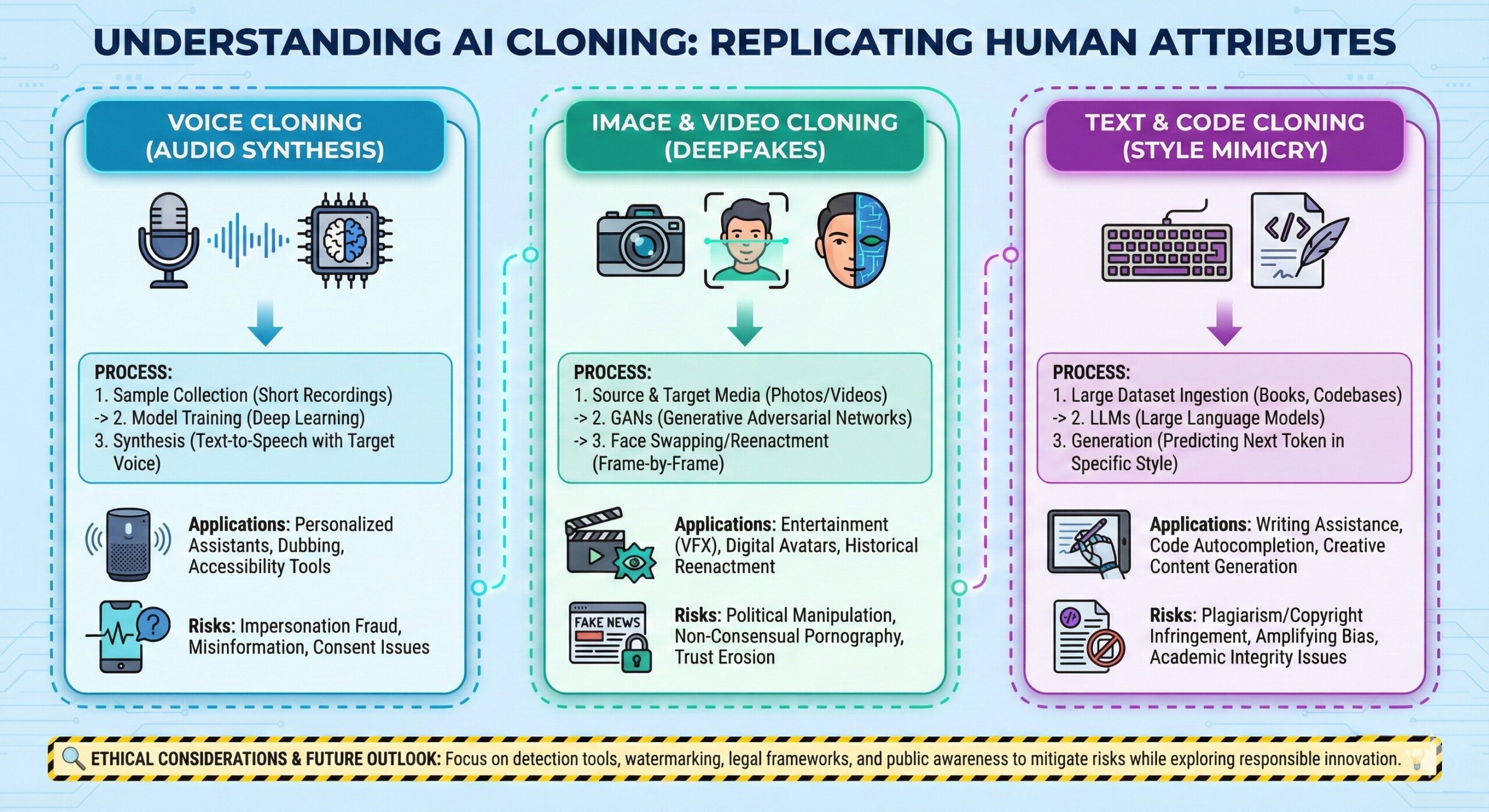

2. Voice Cloning

Voice cloning uses AI to reproduce a person’s voice, including tone, cadence, accent, and emotional inflection. It is widely applied in accessibility, media localization, and assistive technologies. However, it is also a major vector for fraud, impersonation, and misinformation. The technology lowers the cost of identity misuse dramatically.

Technically, modern voice cloning uses neural text-to-speech models trained via speaker embeddings extracted from short audio samples.

3. Personality Modeling

Personality modeling attempts to capture stable traits such as temperament, communication style, and decision preferences. Rather than copying exact words, it reproduces patterns of response. This is increasingly used in conversational agents, coaching tools, and digital companions. The danger lies in over-attributing authenticity to probabilistic behaviour.

Technically, personality models combine psychometric proxies with large language models conditioned on long-term interaction histories.

An excellent collection of learning videos awaits you on our Youtube channel.

4. Behavioural Mimicry

Behavioral mimicry focuses on replicating observable actions rather than internal reasoning. AI systems learn routines, workflows, and habits from user activity logs. These clones are often deployed in productivity tools or robotic process automation. They are effective but shallow representations of human intelligence.

Technically, behavioural mimicry relies on sequence modeling, reinforcement learning from demonstrations, and event-stream analysis.

5. Identity Replication

Identity replication recreates a person’s digital presence across text, voice, image, and interaction patterns. It blurs the boundary between representation and impersonation. Without safeguards, it undermines trust, authentication, and legal accountability. This makes it a core concern for governance and law.

Technically, identity replication requires cross-modal alignment models that synchronize outputs across speech, vision, and language domains.

6. Synthetic Avatars

Synthetic avatars are AI-generated entities that visually and behaviorally represent a person in digital environments. They are used in virtual meetings, training, entertainment, and customer engagement. Avatars may operate synchronously with humans or autonomously. Their realism raises questions about presence and consent.

Technically, they combine generative vision models, motion synthesis, and real-time inference pipelines. A constantly updated Whatsapp channel awaits your participation.

7. Cognitive Emulation

Cognitive emulation attempts to model how a person reasons, not just what they say or do. This includes heuristics, biases, values, and decision frameworks. It is far more ambitious—and dangerous—than surface-level mimicry. Misalignment here can produce convincing but misleading intelligence.

Technically, cognitive emulation uses structured prompting, memory architectures, and latent reasoning representations layered over LLMs.

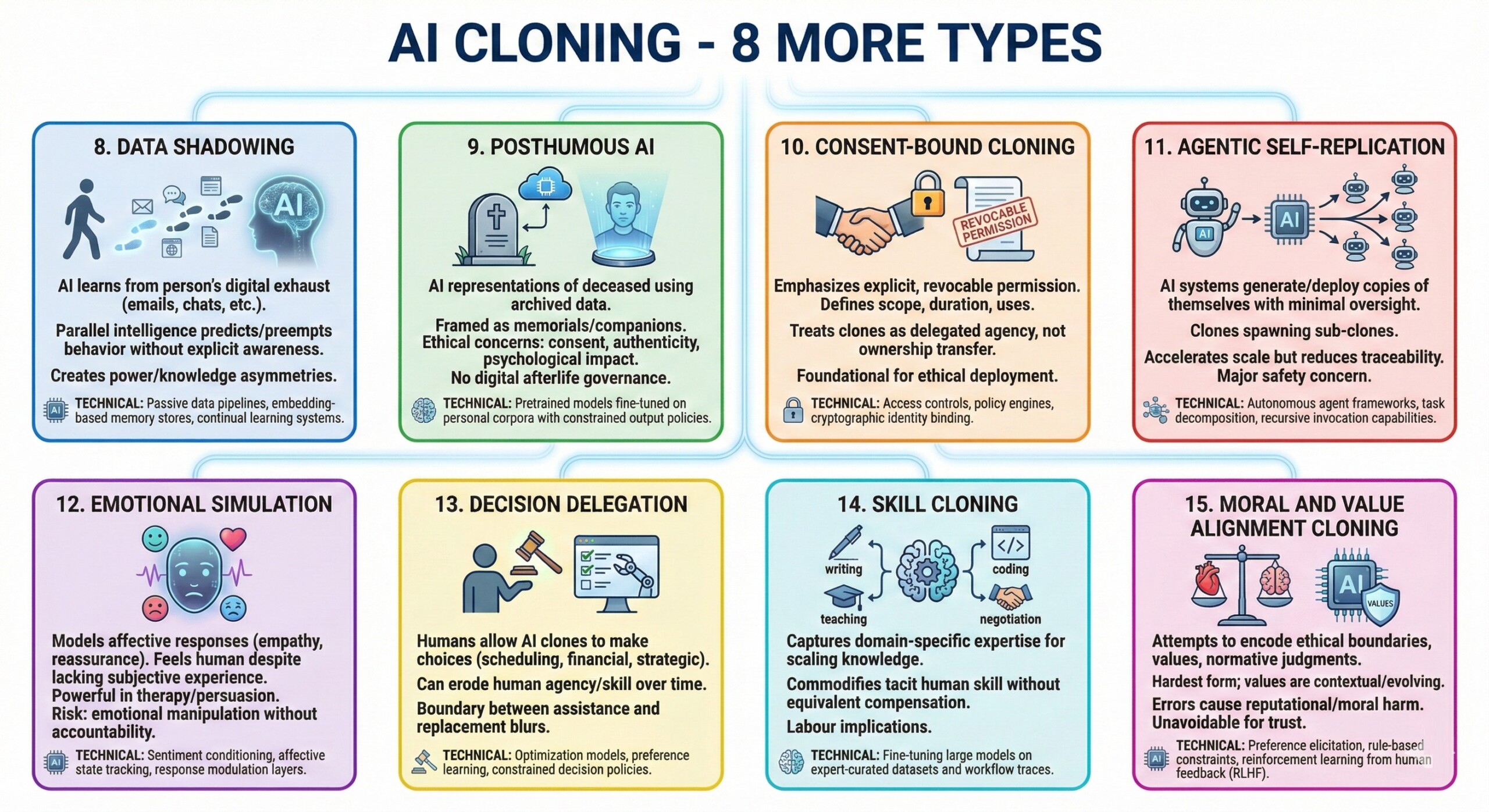

8. Data Shadowing

Data shadowing occurs when AI systems learn continuously from a person’s digital exhaust—emails, chats, documents, browsing, and actions. Over time, a parallel intelligence emerges that predicts and preempts human behavior. Often this happens without explicit user awareness. This creates asymmetries of power and knowledge.

Technically, data shadowing relies on passive data pipelines, embedding-based memory stores, and continual learning systems.

9. Posthumous AI

Posthumous AI involves creating AI representations of deceased individuals using archived data. These systems are framed as memorials, companions, or historical simulations. Ethical concerns include consent, authenticity, and psychological impact. Society lacks norms for digital afterlife governance.

Technically, posthumous AI systems reuse pretrained models fine-tuned on personal corpora with constrained output policies.

Excellent individualised mentoring programmes available.

10. Consent-Bound Cloning

Consent-bound cloning emphasizes explicit, revocable permission for AI cloning activities. It defines scope, duration, and allowed uses of a clone. This approach treats AI clones as delegated agency, not ownership transfer. It is foundational for ethical deployment.

Technically, consent-bound systems require access controls, policy engines, and cryptographic identity binding.

11. Agentic Self-Replication

Agentic self-replication occurs when AI systems generate or deploy copies of themselves with minimal human oversight. In human cloning contexts, this means a clone spawning sub-clones for tasks or roles. This accelerates scale but reduces traceability. It is a major safety concern.

Technically, this depends on autonomous agent frameworks with task decomposition and recursive invocation capabilities.

12. Emotional Simulation

Emotional simulation models affective responses such as empathy, reassurance, or urgency. These systems can feel deeply human despite lacking subjective experience. They are powerful in therapy, education, and persuasion contexts. The risk is emotional manipulation without accountability.

Technically, emotional simulation uses sentiment conditioning, affective state tracking, and response modulation layers.

Subscribe to our free AI newsletter now.

13. Decision Delegation

Decision delegation occurs when humans allow AI clones to make choices on their behalf. This ranges from scheduling to financial and strategic decisions. Over time, delegation can erode human agency and skill. The boundary between assistance and replacement becomes unclear.

Technically, delegation systems integrate optimization models, preference learning, and constrained decision policies.

14. Skill Cloning

Skill cloning captures domain-specific expertise such as writing, coding, teaching, or negotiation style. It is often used for scaling expert knowledge across organizations. However, it commodifies tacit human skill without equivalent compensation structures. This has labour implications.

Technically, skill cloning relies on fine-tuning large models on expert-curated datasets and workflow traces.

15. Moral and Value Alignment Cloning

This concept attempts to encode a person’s ethical boundaries, values, and normative judgments into AI behavior. It is the hardest form of cloning because values are contextual and evolving. Errors here can cause reputational or moral harm at scale. Yet it is unavoidable for trusted delegation.

Technically, value alignment cloning combines preference elicitation, rule-based constraints, and reinforcement learning from human feedback (RLHF). Upgrade your AI-readiness with our masterclass.

Summary

AI cloning forces humanity to confront a fundamental question: what aspects of ourselves are we willing to replicate, delegate, or surrender to machines? These technologies will not merely reshape jobs and productivity – they will reshape identity, agency, and trust. The challenge is not to stop AI cloning, but to govern it with clarity, consent, and humility. The future of human–AI coexistence depends on how responsibly we draw these boundaries.